AI And The Illusion Of Learning: Promoting Ethical And Responsible AI

Table of Contents

The Limitations of Current AI: Understanding the "Illusion"

Current AI systems, primarily based on machine learning, excel at statistical pattern recognition. They can identify patterns in vast datasets and make predictions based on those patterns. However, this is vastly different from true learning, which involves understanding, reasoning, and adapting to novel situations. This distinction is crucial to understanding the "illusion" of learning in AI. The limitations of current AI are significant and have far-reaching ethical consequences.

- Overfitting and Bias in Datasets: AI models trained on biased datasets will inevitably perpetuate and amplify those biases. A facial recognition system trained primarily on images of light-skinned individuals will likely perform poorly on darker-skinned individuals, highlighting the critical need for diverse and representative datasets in AI development.

- Inability to Generalize Beyond Training Data: AI struggles with generalizing knowledge learned from one context to another. A self-driving car trained to navigate a specific city may fail miserably in a different environment with unfamiliar road conditions. This limitation poses significant safety risks.

- Lack of Contextual Understanding and Common Sense Reasoning: AI often lacks the contextual understanding and common sense reasoning abilities that humans possess. A chatbot might generate grammatically correct but nonsensical responses due to a lack of true comprehension. This highlights the limitations of current natural language processing (NLP) techniques.

For example, a chatbot trained on a massive dataset of online text might generate seemingly coherent responses, but these responses often lack genuine understanding. They can easily be manipulated to produce inappropriate or harmful outputs, showcasing the illusion of true comprehension. The pursuit of Explainable AI (XAI) aims to address these issues by making AI decision-making processes more transparent and understandable.

Ethical Concerns in AI Development and Deployment

The limitations of current AI directly translate into significant ethical concerns. The illusion of intelligence masks potential for harm and injustice.

- AI Bias and Algorithmic Fairness: Biased algorithms can lead to unfair and discriminatory outcomes in various applications, from loan applications to criminal justice. Biases in training data reflect and magnify existing societal inequalities, leading to unfair disadvantages for certain groups. For instance, facial recognition systems have demonstrated bias against people of color, highlighting the urgent need for AI bias mitigation strategies.

- Accountability and Transparency in AI Systems: The complexity of many AI models makes it difficult to understand how they arrive at their decisions ("black box" problem). This lack of transparency hinders accountability and makes it difficult to identify and rectify errors or biases. The development and implementation of Explainable AI (XAI) techniques are crucial to address this issue.

- Potential for Misuse of AI: The potential for misuse of AI is a growing concern. Autonomous weapons systems raise serious ethical questions about accountability and the potential for unintended consequences. Deepfakes can be used to spread misinformation and damage reputations, while poorly secured AI systems can lead to privacy violations.

Addressing these ethical concerns requires a multi-faceted approach, including rigorous testing, robust AI safety measures, and clear ethical guidelines.

Promoting Responsible AI Development: Best Practices and Guidelines

Mitigating the risks associated with AI requires a proactive and responsible approach to its development and deployment.

- Diverse and Representative Datasets: Using diverse and representative datasets is crucial for mitigating bias in AI systems. This requires careful data collection, curation, and preprocessing techniques to ensure fairness and avoid perpetuating existing societal biases.

- Rigorous Testing and Validation: AI systems should undergo rigorous testing and validation to identify and address potential biases, errors, and vulnerabilities before deployment. This requires robust testing methodologies and comprehensive evaluation metrics.

- Human Oversight and Intervention: Human oversight and intervention in AI decision-making are essential to ensure responsible and ethical outcomes. This might involve incorporating human-in-the-loop AI systems, where humans retain ultimate control over critical decisions.

- Ethical Guidelines and Regulations: The development of clear ethical guidelines and regulations for AI is crucial to ensure responsible innovation and prevent harm. This requires collaboration between governments, researchers, industry leaders, and ethicists.

- Interdisciplinary Collaboration: Addressing the ethical challenges of AI requires interdisciplinary collaboration between computer scientists, ethicists, social scientists, policymakers, and other stakeholders.

Several organizations are actively working on promoting ethical AI, including the Partnership on AI and the AI Now Institute. Their initiatives provide valuable resources and guidelines for responsible AI development.

The Future of Ethical AI: Moving Beyond the Illusion

The future of AI hinges on moving beyond the current "illusion of learning" and developing systems that are truly intelligent, transparent, and ethical.

- Explainable AI (XAI) and Reinforcement Learning from Human Feedback: Research into explainable AI (XAI) aims to make AI decision-making processes more transparent and understandable. Reinforcement learning from human feedback allows AI systems to learn from human preferences and values, aligning their behavior with ethical considerations.

- AI Safety Research: Continued research and development in AI safety are critical to ensuring that AI systems are robust, reliable, and aligned with human values. This includes research on AI alignment, robustness, and security.

- AI Education and Public Awareness: Public engagement and education on AI issues are crucial to fostering a society that understands and can responsibly shape the development and use of AI. This involves promoting AI literacy and critical thinking about the implications of AI technologies.

By addressing the limitations and ethical concerns discussed in this article, we can pave the way for an AI future that is beneficial for all.

Conclusion: A Call for Ethical AI

The "illusion of learning" in current AI systems poses significant ethical challenges, including bias, lack of transparency, and the potential for misuse. Addressing these challenges requires a concerted effort to promote responsible AI development and deployment. This includes using diverse datasets, rigorous testing, human oversight, and ethical guidelines. Let's move beyond the illusion of learning and build an AI future that is ethical, fair, and beneficial for all. Learn more about promoting ethical and responsible AI and join the conversation!

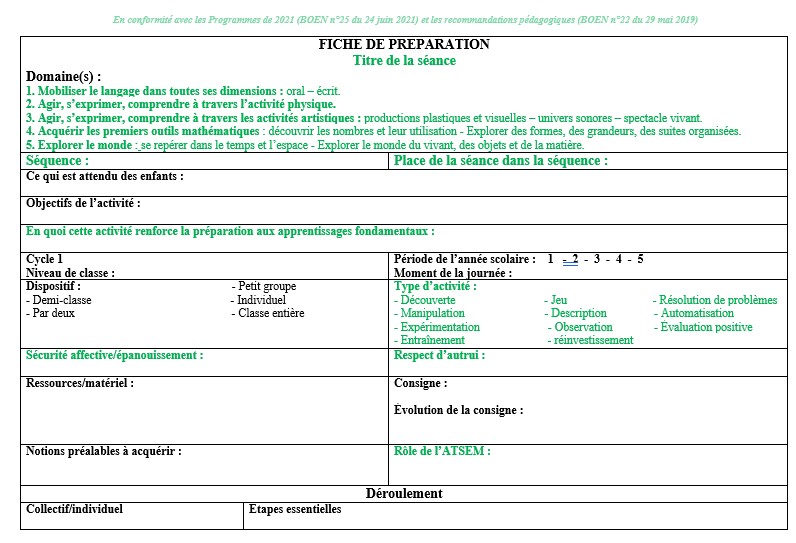

Featured Posts

-

Giro D Italia 2024 Papal Blessing Awaits Cyclists In Vatican City

May 31, 2025

Giro D Italia 2024 Papal Blessing Awaits Cyclists In Vatican City

May 31, 2025 -

A Speedy Review Of Molly Jongs How To Lose Your Mother

May 31, 2025

A Speedy Review Of Molly Jongs How To Lose Your Mother

May 31, 2025 -

Frais D Organisation De La Foire Au Jambon 2025 A Bayonne Un Deficit Preoccupant

May 31, 2025

Frais D Organisation De La Foire Au Jambon 2025 A Bayonne Un Deficit Preoccupant

May 31, 2025 -

Un Jour En Mer Preparation Et Securite Pour Tous

May 31, 2025

Un Jour En Mer Preparation Et Securite Pour Tous

May 31, 2025 -

Ben Sheltons Munich Semifinal Berth Darderi Overpowered

May 31, 2025

Ben Sheltons Munich Semifinal Berth Darderi Overpowered

May 31, 2025