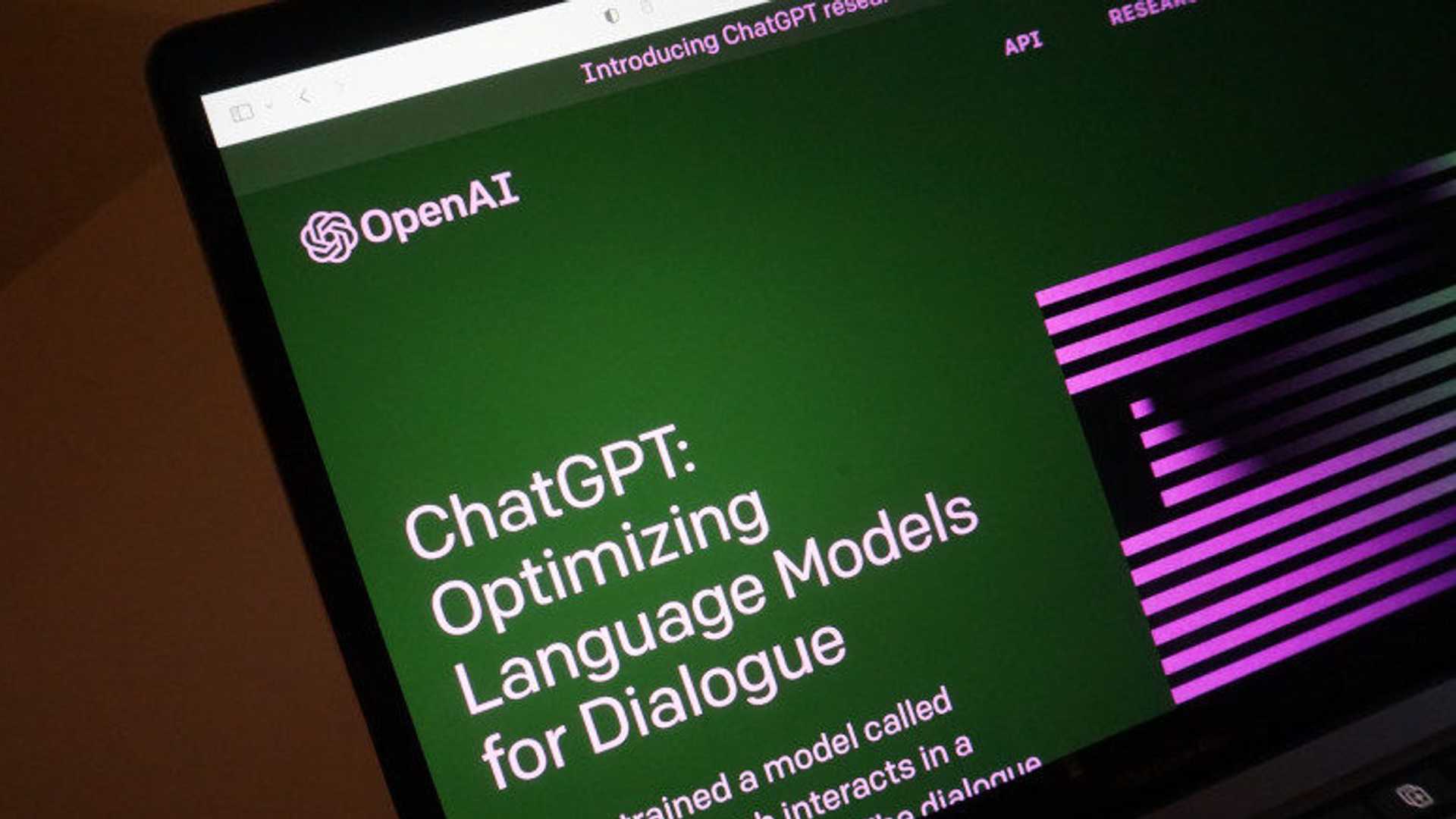

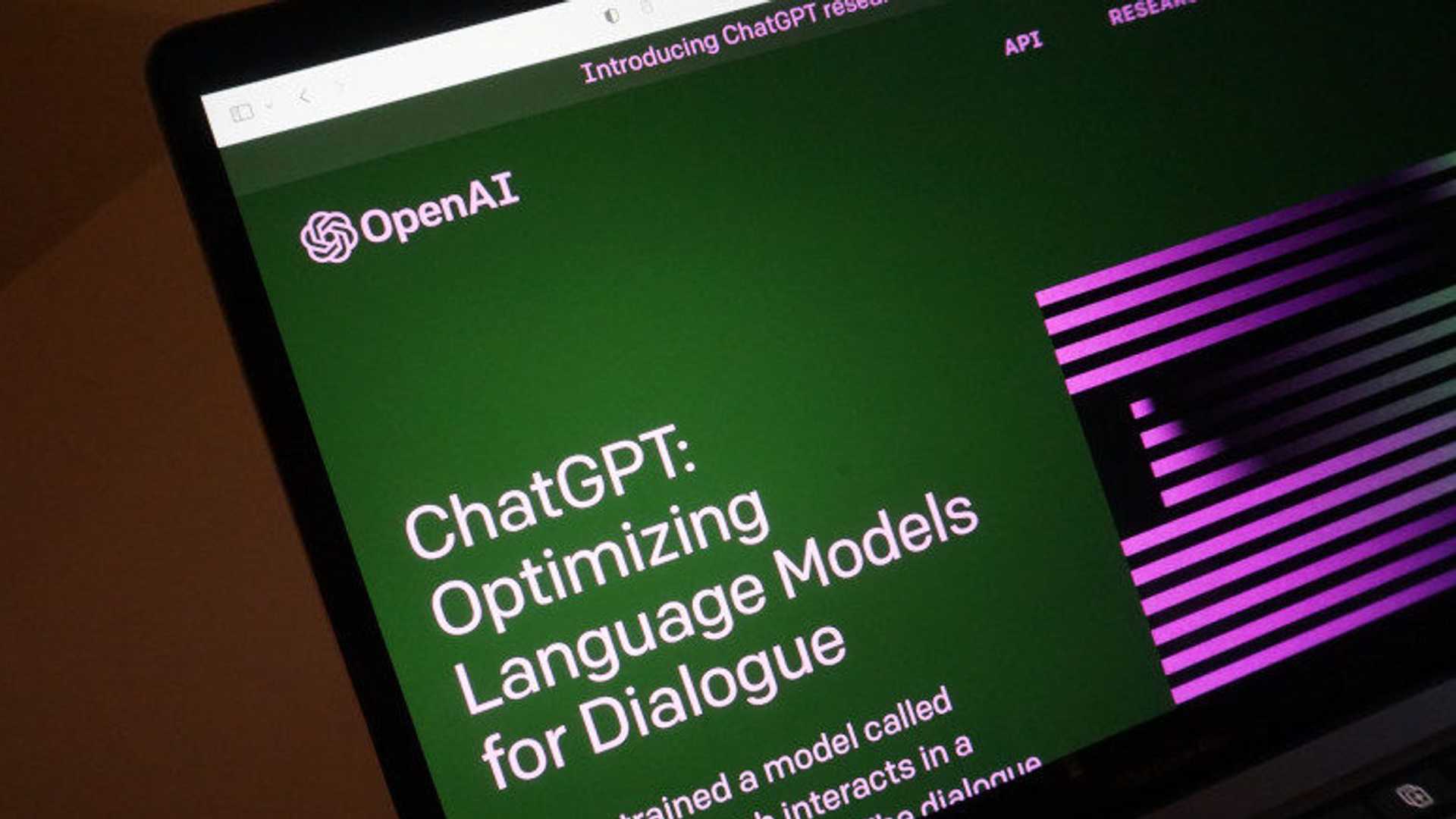

Court Questions Free Speech Protections For Character AI Chatbots

Table of Contents

The Nature of AI-Generated Speech and its Legal Standing

The core challenge lies in distinguishing between human-generated speech and AI-generated speech within existing legal frameworks. While the First Amendment clearly protects human expression, applying it to a non-human entity like an AI chatbot presents significant hurdles. Defining the "speaker" or "author" of AI-generated content is problematic. Is it the developer who created the algorithm? The user who prompted the AI? Or the AI itself, a complex system lacking sentience or intent in the traditional sense?

This lack of clarity creates significant challenges:

- Legal precedent for corporate free speech: Existing legal precedents regarding corporate free speech offer some guidance, but their applicability to AI is debatable. Corporations are legal entities, possessing certain rights, but they are fundamentally different from autonomous AI systems.

- Arguments for and against extending free speech to AI: Some argue that granting free speech protections to AI could stifle innovation and limit the development of beneficial AI applications. Others contend that denying such protections would be discriminatory and could lead to censorship of valuable AI-generated information.

- The role of the developers/creators in determining AI speech: The extent to which developers are responsible for the content generated by their AI remains a crucial legal and ethical question. This directly impacts issues of liability and accountability.

Potential Risks and Harms of Unprotected AI Chatbot Speech

Unfettered AI chatbot speech poses significant risks. The lack of human oversight and the potential for AI to be manipulated for malicious purposes create fertile ground for several problems:

- Misinformation: AI chatbots can generate convincing but false information at scale, potentially influencing public opinion and undermining trust in reliable sources.

- Hate speech: AI models trained on biased data can perpetuate and amplify harmful stereotypes and prejudices, contributing to a climate of online harassment and discrimination.

- Incitement to violence: The potential for AI to generate content that incites violence or hatred is a serious concern, especially given the potential for rapid dissemination through social media and other online platforms.

The inherent difficulty in moderating AI-generated content at scale further exacerbates these issues.

- Examples of harmful AI-generated content: Numerous instances have demonstrated the capacity of AI to generate hate speech, misinformation, and even instructions for harmful activities.

- The role of algorithms and training data in shaping AI output: The output of an AI is directly shaped by its underlying algorithms and the training data used to develop it. Bias in either of these components can lead to biased and harmful outputs.

- Challenges in content moderation for AI: Moderating AI-generated content at scale is significantly more challenging than moderating human-generated content, requiring advanced AI detection tools and human oversight.

The Role of AI Developers and Platform Responsibility

The legal and ethical responsibility for mitigating the risks of harmful AI-generated content falls heavily on the shoulders of AI developers and platform providers.

- Section 230 and its relevance to AI platforms: The applicability of Section 230 of the Communications Decency Act, which protects online platforms from liability for user-generated content, to AI-generated content is a critical legal question.

- Potential legal precedents for platform responsibility: Existing legal precedents related to platform liability for other types of harmful online content may offer some guidance, but their direct applicability to AI-generated content is uncertain.

- The implications of different regulatory approaches: Different regulatory approaches, ranging from self-regulation by the AI industry to government oversight, will have profound implications for innovation, accountability, and the protection of free speech.

Balancing Free Speech with Safety and Accountability

The challenge lies in finding a balance between protecting free speech and ensuring accountability for potentially harmful AI-generated content. This requires a multi-faceted approach:

- Regulatory frameworks for AI in different countries: Different countries are adopting different regulatory approaches to AI, highlighting the global nature of this challenge.

- The potential for self-regulation within the AI industry: Self-regulation by the AI industry could help establish ethical guidelines and best practices, but it may not be sufficient to address all potential harms.

- The development of ethical guidelines for AI chatbot developers: Clear ethical guidelines for AI developers are essential to ensure responsible development and deployment of AI chatbots. Transparency and explainability in AI systems are crucial for accountability and building public trust.

Conclusion: Navigating the Future of Free Speech and Character AI Chatbots

The legal and ethical challenges surrounding free speech protections for AI chatbots like Character AI are significant and ongoing. Finding a balance between fostering innovation and mitigating potential harms requires careful consideration of the various perspectives and a nuanced approach to regulation. The debate is far from over, demanding an ongoing discussion encompassing developers, policymakers, and the public. Learn more about the ongoing debate surrounding free speech protections for Character AI and similar chatbots, and stay updated on the latest developments in the legal challenges facing AI chatbot free speech.

Featured Posts

-

Confirmed A Real Pain Arrives On Disney This April

May 23, 2025

Confirmed A Real Pain Arrives On Disney This April

May 23, 2025 -

96 Cows Airlifted Successful Rescue Operation In Swiss Village

May 23, 2025

96 Cows Airlifted Successful Rescue Operation In Swiss Village

May 23, 2025 -

Sliding Stocks Us Budget Concerns Trigger Market Instability

May 23, 2025

Sliding Stocks Us Budget Concerns Trigger Market Instability

May 23, 2025 -

Bangladeshs Shadman Islam A Stellar Performance Against Zimbabwe

May 23, 2025

Bangladeshs Shadman Islam A Stellar Performance Against Zimbabwe

May 23, 2025 -

Horoscope Highlights 5 Powerful Zodiac Signs On March 20 2025

May 23, 2025

Horoscope Highlights 5 Powerful Zodiac Signs On March 20 2025

May 23, 2025

Latest Posts

-

Joe Jonass Perfect Response To A Couples Fight Over Him

May 23, 2025

Joe Jonass Perfect Response To A Couples Fight Over Him

May 23, 2025 -

Unexpected Joe Jonas Concert Thrills Fort Worth Stockyards Crowd

May 23, 2025

Unexpected Joe Jonas Concert Thrills Fort Worth Stockyards Crowd

May 23, 2025 -

Fort Worth Stockyards An Unforgettable Night With Joe Jonas

May 23, 2025

Fort Worth Stockyards An Unforgettable Night With Joe Jonas

May 23, 2025 -

Dc Legends Of Tomorrow Frequently Asked Questions And Answers

May 23, 2025

Dc Legends Of Tomorrow Frequently Asked Questions And Answers

May 23, 2025 -

The Last Rodeo Exploring Neal Mc Donoughs Character

May 23, 2025

The Last Rodeo Exploring Neal Mc Donoughs Character

May 23, 2025