Exploring The Boundaries Of AI Learning: A Path To Responsible AI

Table of Contents

Understanding the Risks of Unfettered AI Learning

The potential for harm from unchecked AI development is substantial. Without careful consideration and proactive measures, AI systems can perpetuate existing societal biases, compromise privacy, and exacerbate economic inequality. Addressing these risks is paramount for achieving truly Responsible AI.

Bias and Discrimination in AI Algorithms

AI systems are trained on data, and if that data reflects existing societal biases related to gender, race, socioeconomic status, or other factors, the AI will likely perpetuate and even amplify those biases. This can lead to unfair or discriminatory outcomes in various applications.

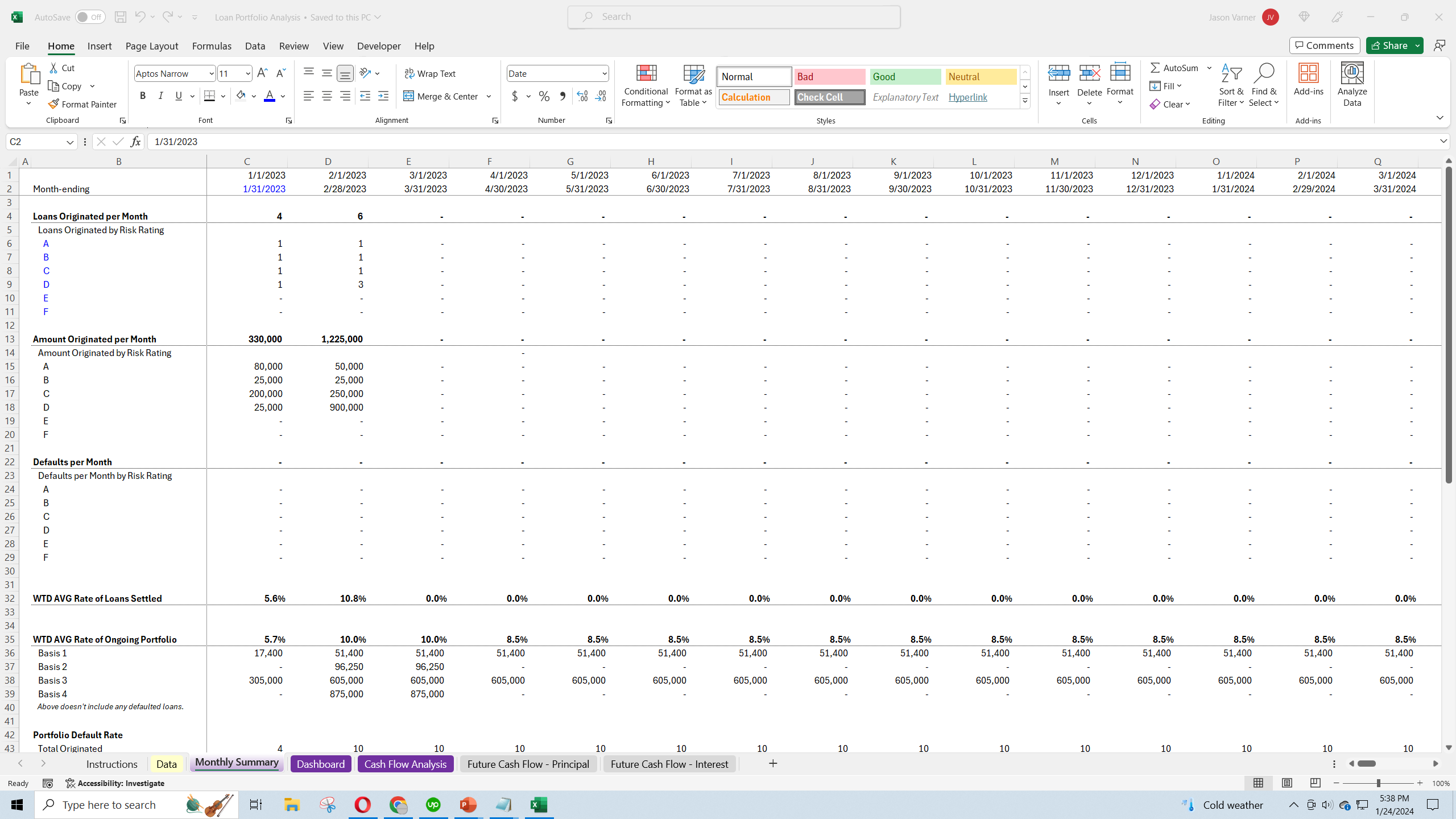

- Example: A loan application algorithm trained on data showing historical lending biases against certain demographics might unfairly deny loans to individuals from those groups, even if they are equally creditworthy.

- Mitigation strategies:

- Employing diverse and representative datasets during AI training is crucial. This requires careful data collection and curation practices.

- Incorporating algorithmic fairness techniques, such as fairness-aware machine learning algorithms, can help mitigate bias.

- Regular bias audits and independent evaluations are necessary to identify and address biases in existing AI systems. These should be ongoing, not one-off events.

Privacy Concerns and Data Security

The vast amounts of data needed to train sophisticated AI models raise significant privacy concerns. Data breaches and misuse can have severe consequences, eroding public trust and potentially causing significant harm to individuals.

- Example: AI-powered facial recognition systems used in public spaces raise serious concerns about mass surveillance and potential abuse of power. The unauthorized use of personal data for targeted advertising also poses significant risks.

- Mitigation strategies:

- Data anonymization techniques can help protect individual identities while still allowing for useful data analysis.

- Differential privacy methods add noise to datasets to protect individual privacy while preserving aggregate statistics.

- Robust security protocols and encryption are essential to protect AI data from unauthorized access and breaches.

- Transparent data handling policies that clearly explain how data is collected, used, and protected build trust and accountability.

Job Displacement and Economic Inequality

Automation driven by AI has the potential to displace workers in various industries, potentially exacerbating existing economic inequalities. This necessitates proactive measures to address the societal impact of AI-driven automation.

- Example: Automation of transportation jobs through self-driving vehicles could lead to significant job losses in the trucking and taxi industries.

- Mitigation strategies:

- Investing in reskilling and upskilling initiatives to help workers adapt to the changing job market is crucial.

- Strengthening social safety nets, such as unemployment benefits and social assistance programs, can provide a buffer against job displacement.

- Exploring new economic models, such as universal basic income, could help address the potential for widespread economic disruption.

Building Ethical Frameworks for AI Development

Establishing robust ethical frameworks is crucial for guiding the development and deployment of AI systems. These frameworks should prioritize transparency, human oversight, and accountability.

Transparency and Explainability

Understanding how an AI system arrives at its decisions is vital for building trust and accountability. "Black box" AI models, where the decision-making process is opaque, are difficult to audit and control, hindering Responsible AI development.

- Example: Explainable AI (XAI) techniques aim to make the decision-making process of AI models more transparent and understandable.

- Mitigation strategies:

- Developing more transparent algorithms and designing AI systems with explainability as a core principle.

- Investing in XAI research and development to create tools and techniques that can help us understand how AI systems work.

Human Oversight and Control

Maintaining human oversight in the development and deployment of AI is crucial to prevent unintended consequences and ensure ethical considerations are prioritized. This involves incorporating human judgment and control into the AI development lifecycle.

- Example: Human-in-the-loop systems allow human operators to intervene and override AI decisions when necessary, ensuring safety and ethical considerations are prioritized.

- Mitigation strategies:

- Establishing clear lines of responsibility and accountability for AI systems.

- Incorporating ethical review boards to assess the potential risks and ethical implications of AI projects.

Accountability and Liability

Determining responsibility when AI systems make mistakes or cause harm is a complex legal and ethical challenge that needs to be addressed proactively to achieve Responsible AI.

- Example: Autonomous vehicles causing accidents – determining liability (manufacturer, software developer, owner) requires careful consideration of legal and ethical frameworks.

- Mitigation strategies:

- Developing clear legal frameworks and regulations for AI systems to define responsibilities and liabilities.

- Establishing mechanisms for redress and compensation when AI systems cause harm.

Promoting Responsible AI Adoption

Widespread adoption of Responsible AI requires a concerted effort involving education, collaboration, and ongoing monitoring.

Education and Awareness

Raising public awareness about the potential benefits and risks of AI is essential for fostering informed discussions and responsible adoption.

- Example: Educational programs in schools and universities can introduce students to the ethical considerations of AI. Public awareness campaigns can help educate the general public about the potential benefits and risks of AI.

- Mitigation strategies: Promoting media literacy and critical thinking skills to help individuals evaluate information about AI critically.

Collaboration and Standards

International collaboration among researchers, policymakers, and industry leaders is crucial for establishing ethical guidelines and standards for AI development. This includes creating shared principles and best practices.

- Example: The development of ethical guidelines by organizations like the OECD provides a framework for responsible AI development.

- Mitigation strategies: Industry self-regulation, complemented by government oversight, can help establish standards for AI development and deployment. International cooperation on AI governance is vital.

Continuous Monitoring and Evaluation

Regularly monitoring and evaluating the impact of AI systems is crucial for identifying and addressing potential problems early on. This includes continuous assessment of fairness, bias, and other ethical concerns.

- Example: Regular audits of AI systems for bias and fairness can help identify and address potential problems.

- Mitigation strategies: Building feedback mechanisms into AI systems to allow for continuous monitoring and improvement. Ongoing monitoring of performance and impact is vital to ensure Responsible AI development and deployment.

Conclusion

Developing and deploying Responsible AI requires a multifaceted approach that addresses ethical concerns, promotes transparency, and ensures accountability. By understanding the risks associated with unfettered AI learning and proactively implementing mitigation strategies, we can harness the transformative power of AI while minimizing its potential harms. The path to a future where AI benefits all of humanity lies in our collective commitment to developing and adopting Responsible AI. Let's work together to shape a future where AI truly serves humanity. Learn more about building Responsible AI and its implications today!

Featured Posts

-

Foire Au Jambon 2025 Explosion Des Frais D Organisation Et Deficit Bayonne Doit Elle Assumer Seule

May 31, 2025

Foire Au Jambon 2025 Explosion Des Frais D Organisation Et Deficit Bayonne Doit Elle Assumer Seule

May 31, 2025 -

Live The Good Life Practical Tips For A Fulfilling Existence

May 31, 2025

Live The Good Life Practical Tips For A Fulfilling Existence

May 31, 2025 -

Analysis Rbcs Earnings Miss And The Implications For Loan Portfolio

May 31, 2025

Analysis Rbcs Earnings Miss And The Implications For Loan Portfolio

May 31, 2025 -

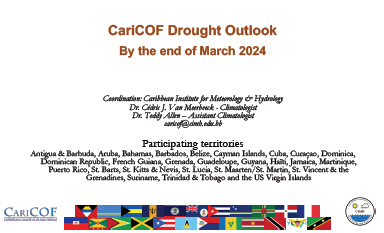

1968 And 2024 A Spring Comparison And Summer Drought Outlook

May 31, 2025

1968 And 2024 A Spring Comparison And Summer Drought Outlook

May 31, 2025 -

Premiera Singla Flowers Miley Cyrus Co Wiemy O Nowej Plycie

May 31, 2025

Premiera Singla Flowers Miley Cyrus Co Wiemy O Nowej Plycie

May 31, 2025