Exploring The Boundaries Of AI Learning: Towards Responsible AI Development And Deployment

Table of Contents

Artificial intelligence (AI) is rapidly transforming our world, impacting everything from healthcare and finance to transportation and entertainment. Recent advancements have led to remarkable achievements, but this rapid progress also necessitates a critical examination of its ethical and societal implications. The need for responsible AI development and deployment has never been more urgent. This article explores the boundaries of AI learning and delves into the crucial considerations needed to ensure that AI technologies are developed and used responsibly, ethically, and safely. We will examine the challenges posed by AI bias, the "black box" problem, and data privacy concerns, while also outlining strategies for promoting responsible AI development practices. Keywords like ethical AI, AI safety, AI bias, and AI governance will be key to understanding this evolving landscape.

Understanding the Challenges of AI Learning:

The immense potential of AI learning is tempered by significant challenges that demand careful consideration. These challenges are intricately linked and necessitate a multifaceted approach to responsible AI development.

H3: Bias in AI Datasets and Algorithms:

AI systems are trained on data, and if that data reflects existing societal biases, the resulting AI will likely perpetuate and even amplify those biases. This algorithmic bias can lead to unfair or discriminatory outcomes in various applications.

- Examples: Facial recognition systems exhibiting higher error rates for people of color; loan applications unfairly rejected based on biased algorithms; recruitment tools showing gender or racial bias.

- Consequences: Erosion of trust in AI systems; perpetuation of inequality; legal and ethical ramifications.

- Mitigation Strategies:

- Careful data curation and preprocessing to identify and mitigate biases.

- Employing data augmentation techniques to balance datasets.

- Utilizing algorithmic fairness techniques to design algorithms that minimize discriminatory outcomes.

- Implementing bias detection tools to monitor and identify biases in AI systems.

H3: The Black Box Problem and Explainability:

Many advanced AI models, particularly deep learning systems, are often referred to as "black boxes" due to their complexity and opacity. Understanding how these models arrive at their decisions is a significant challenge. This lack of explainability undermines trust and accountability.

- Challenges: Difficulty in debugging AI systems; inability to identify and correct errors; lack of transparency hindering public acceptance.

- Importance of XAI: Explainable AI (XAI) aims to develop more transparent and interpretable AI models, enabling us to understand their decision-making processes.

- Techniques for Increasing Model Interpretability:

- LIME (Local Interpretable Model-agnostic Explanations): Approximates the model's behavior locally.

- SHAP (SHapley Additive exPlanations): Provides explanations based on game theory.

H3: Data Privacy and Security Concerns:

Training sophisticated AI models often requires vast amounts of data, including personal information. This raises significant data privacy and security concerns.

- Ethical and Legal Implications: Violation of individual privacy rights; potential for misuse of sensitive data.

- Data Anonymization and Privacy-Preserving Techniques: Techniques like differential privacy and federated learning help protect individual privacy while still enabling AI development.

- Risks of Data Breaches: Compromised data can lead to identity theft, financial losses, and reputational damage. Robust cybersecurity measures are crucial. Compliance with regulations like GDPR and CCPA is essential.

Promoting Responsible AI Development Practices:

Addressing the challenges of AI learning requires a proactive and multifaceted approach focused on responsible AI development practices.

H3: Establishing Ethical Guidelines and Frameworks:

The development and deployment of AI must be guided by strong ethical principles. This necessitates the establishment of clear ethical guidelines and frameworks.

- Importance of Ethical Guidelines: To ensure AI systems are developed and used in a way that aligns with societal values and human rights.

- Existing Frameworks: Asilomar AI Principles, OECD Principles on AI, and various national and regional initiatives provide valuable guidance.

- Ethical Review Boards: Independent review boards can assess the ethical implications of AI projects before deployment.

- Responsible Innovation Processes: Incorporating ethical considerations throughout the entire AI lifecycle, from design to deployment.

H3: Building Robust and Secure AI Systems:

Responsible AI development also requires building robust and secure AI systems that are resilient to attacks and errors.

- Techniques for Building Robust AI Systems: Adversarial training, model validation, and error detection mechanisms.

- Rigorous Testing and Validation: Thorough testing is crucial to identify and mitigate potential biases, vulnerabilities, and errors.

- Cybersecurity Measures: Protecting AI systems from malicious attacks and data breaches is paramount.

H3: Fostering Collaboration and Transparency:

Effective responsible AI development and deployment requires collaboration and transparency among researchers, developers, policymakers, and the public.

- Collaboration: Sharing best practices, datasets, and tools to advance responsible AI development.

- Open-Source AI: Promoting the use of open-source tools and datasets to foster transparency and accountability.

- Public Engagement: Involving the public in discussions about the ethical and societal implications of AI.

Conclusion:

The journey towards responsible AI development and deployment is a continuous process requiring vigilance and collaboration. We have explored crucial challenges, including AI bias, the black box problem, and data privacy concerns. By embracing ethical guidelines, building robust and secure systems, and fostering transparency and collaboration, we can mitigate the potential risks and unlock the transformative benefits of AI. By embracing responsible AI development and deployment, we can harness the transformative power of AI while mitigating its potential risks. Learn more about ethical AI frameworks and best practices to contribute to a future where AI benefits all of humanity.

Featured Posts

-

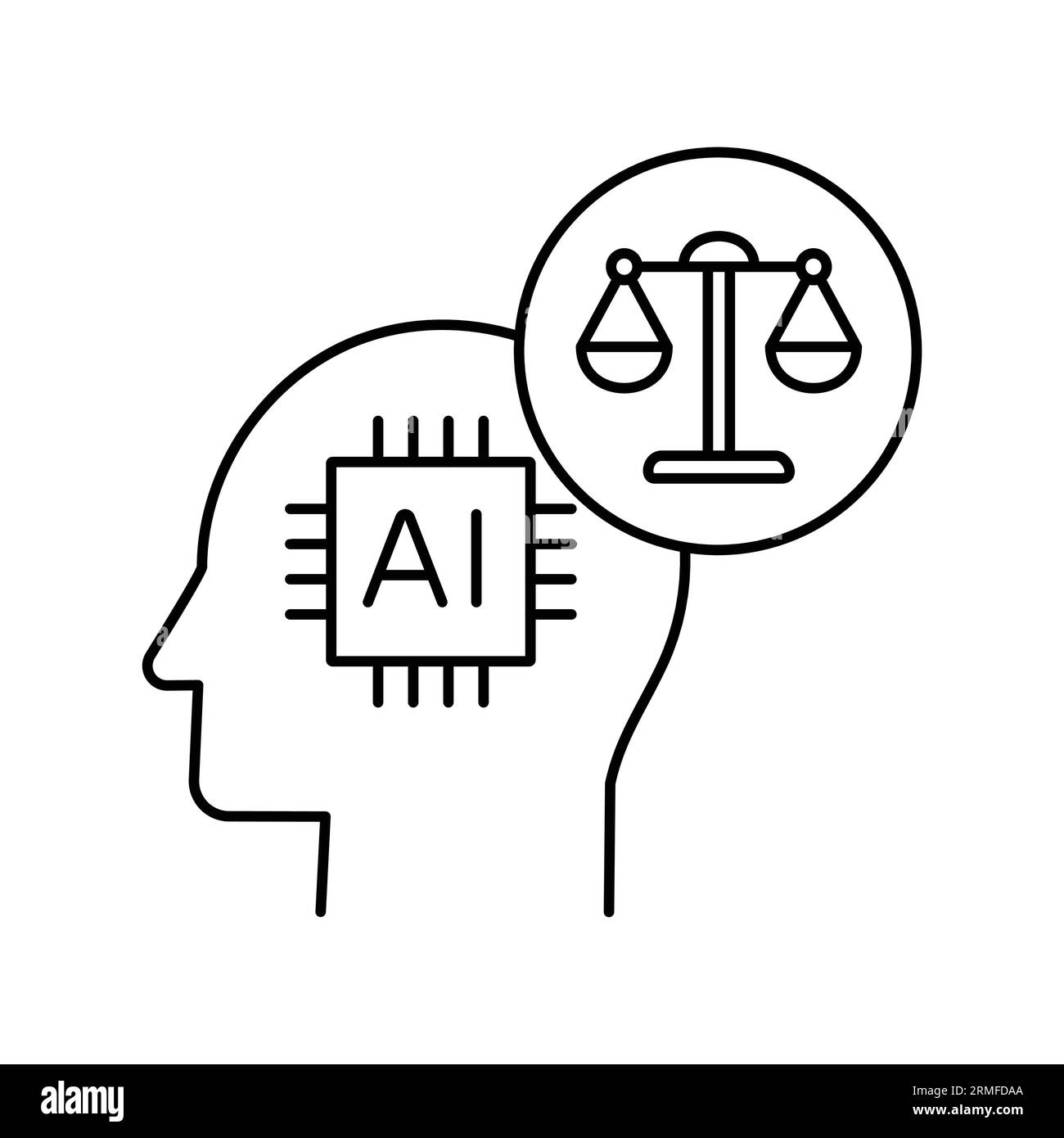

Saturday May 3rd Nyt Mini Crossword Solutions

May 31, 2025

Saturday May 3rd Nyt Mini Crossword Solutions

May 31, 2025 -

Padel Court Development Update Bannatyne Health Club Ingleby Barwick

May 31, 2025

Padel Court Development Update Bannatyne Health Club Ingleby Barwick

May 31, 2025 -

Nowy Singiel Miley Cyrus Flowers Data Premiery I Szczegoly Albumu

May 31, 2025

Nowy Singiel Miley Cyrus Flowers Data Premiery I Szczegoly Albumu

May 31, 2025 -

Rosemary And Thyme A Culinary Guide To Herb Gardening

May 31, 2025

Rosemary And Thyme A Culinary Guide To Herb Gardening

May 31, 2025 -

Cd Projekt Reds Cyberpunk 2 Development Updates And Future Plans

May 31, 2025

Cd Projekt Reds Cyberpunk 2 Development Updates And Future Plans

May 31, 2025