Holding Tech Companies Accountable For Algorithmically Fueled Mass Shootings

Table of Contents

The Role of Algorithms in Spreading Extremist Ideology

Recommendation algorithms, the unseen forces shaping our online experiences, are designed to keep us engaged. Unfortunately, this often translates to creating echo chambers and radicalization pipelines. These algorithms, driven by data and designed to maximize user engagement, inadvertently amplify extremist viewpoints, connecting like-minded individuals and pushing them further down the rabbit hole of radicalization. The concept of "filter bubbles," where users are only exposed to information confirming their existing biases, exacerbates this problem.

- Examples of algorithms: Facebook's News Feed algorithm, YouTube's recommendation engine, and Twitter's trending topics all play a role in shaping what users see and interact with, potentially leading to exposure to extremist content.

- Statistics on reach: Studies show a significant increase in the reach of extremist content through these algorithms, particularly among vulnerable individuals who may be more susceptible to radicalization. (Specific statistics should be inserted here, referencing reputable sources).

- Case studies: Analyzing the online activities of individuals who committed mass shootings reveals patterns of exposure to extremist ideologies through algorithmic amplification. (Again, cite specific, credible sources here).

Legal and Ethical Responsibilities of Tech Companies

Existing legal frameworks, such as Section 230 in the US, offer significant protection to tech companies regarding the content posted on their platforms. This creates a complex legal landscape where holding these companies accountable for algorithmically fueled mass shootings becomes challenging. The ethical dilemma is further compounded by the tension between upholding free speech principles and preventing the spread of content that incites violence.

- Existing Laws and Limitations: Section 230 protects online platforms from liability for content posted by users, making it difficult to directly sue them for enabling the spread of extremist views. However, exceptions may exist if platforms are found to have directly encouraged or profited from the spread of illegal content.

- Arguments for and against legal responsibility: Proponents of holding tech companies accountable argue that their algorithms directly contribute to the spread of violence and that they have a moral and ethical obligation to prevent it. Opponents contend that holding them liable would stifle free speech and create an impossible burden of censorship.

- Potential for New Legislation: The debate is fueling calls for new laws and regulations that would hold tech companies more directly accountable for content moderation and the design of their algorithms. This could include increased transparency requirements, stricter content moderation policies, and potential liability for failing to prevent the spread of extremist content leading to real-world violence.

Mitigating the Risk: Strategies for Prevention

Preventing algorithmically fueled mass shootings requires a multi-pronged approach combining technological solutions, human oversight, and broader societal changes.

- Technological Solutions: Improved content moderation algorithms, using AI-powered detection systems to identify and remove extremist content more effectively, are crucial.

- Human Oversight: While AI is essential, human review and intervention remain vital to ensure accuracy and contextual understanding in content moderation. This requires significantly increased investment in human moderators.

- Transparency and Accountability: Tech companies must be more transparent about their algorithms and content moderation practices, allowing for independent audits and public scrutiny.

- Media Literacy Education: Equipping individuals with the critical thinking skills to discern credible information from disinformation is paramount in preventing online radicalization. Comprehensive media literacy programs in schools and communities are essential.

The Call for Systemic Change: Beyond Individual Accountability

Addressing algorithmically fueled mass shootings requires looking beyond individual accountability and addressing broader societal issues.

- Mental Healthcare: Increased access to affordable and effective mental healthcare is critical in addressing the underlying mental health issues that can contribute to violence.

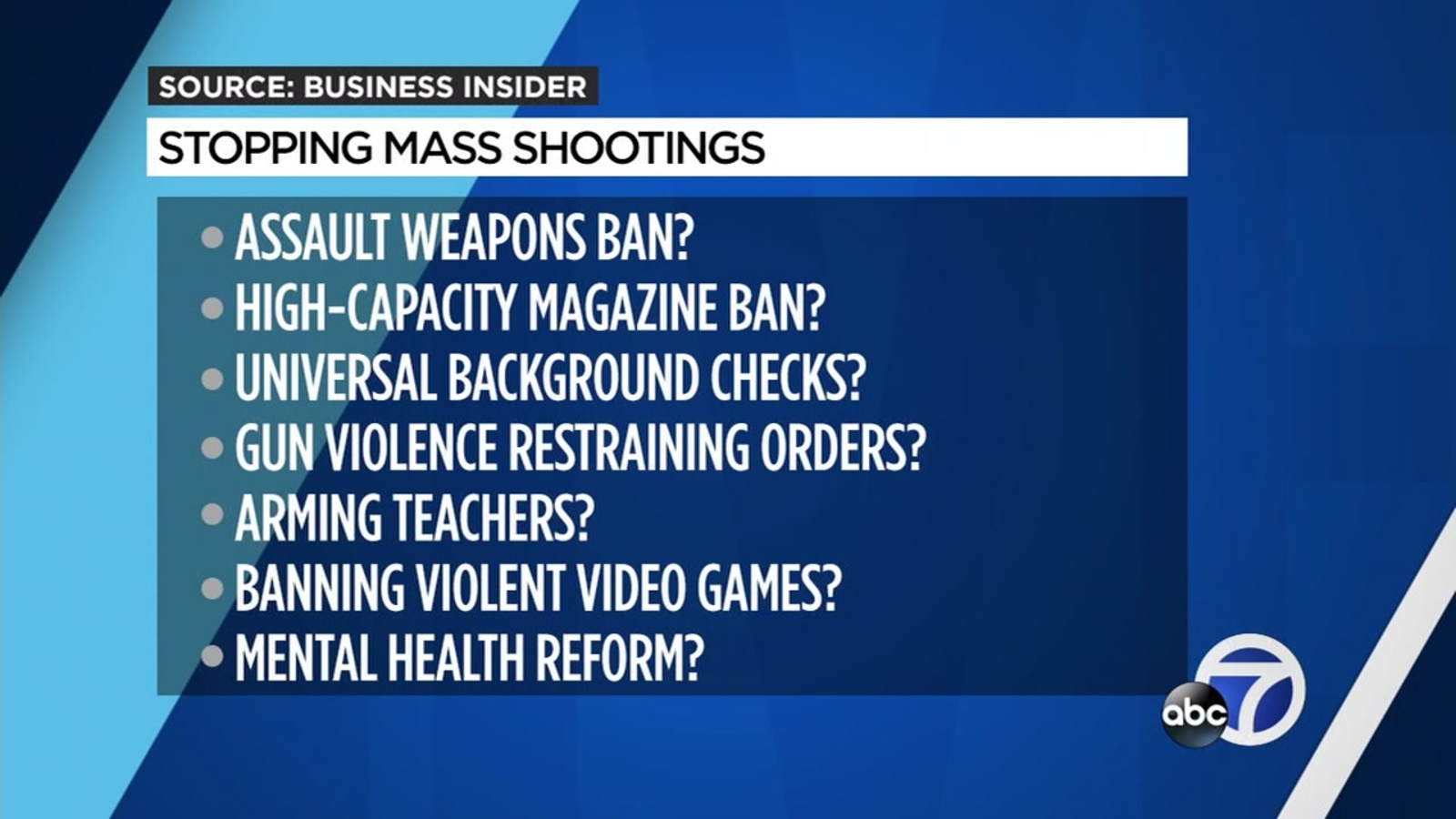

- Gun Control: Stricter gun control measures are essential in limiting access to firearms for individuals prone to violence.

- Social Justice Initiatives: Addressing social inequalities, such as poverty, discrimination, and lack of opportunity, can help reduce the factors that contribute to extremist ideologies and violence.

Holding Tech Companies Accountable for Algorithmically Fueled Mass Shootings – A Path Forward

The evidence strongly suggests a link between algorithms amplifying extremist views and the tragic reality of algorithmically fueled mass shootings. Tech companies have a moral, ethical, and potentially legal responsibility to mitigate this risk. This requires a combination of technological solutions, improved content moderation practices, greater transparency, and robust media literacy programs. We must also address the broader societal factors that contribute to violence. Demand greater accountability from tech companies, support legislation aimed at preventing online radicalization, and promote media literacy. Only through collective action can we hope to effectively combat this growing threat and prevent future tragedies fueled by algorithmically amplified extremism. The fight against algorithmically fueled mass shootings is a fight for a safer future – let’s fight it together.

Featured Posts

-

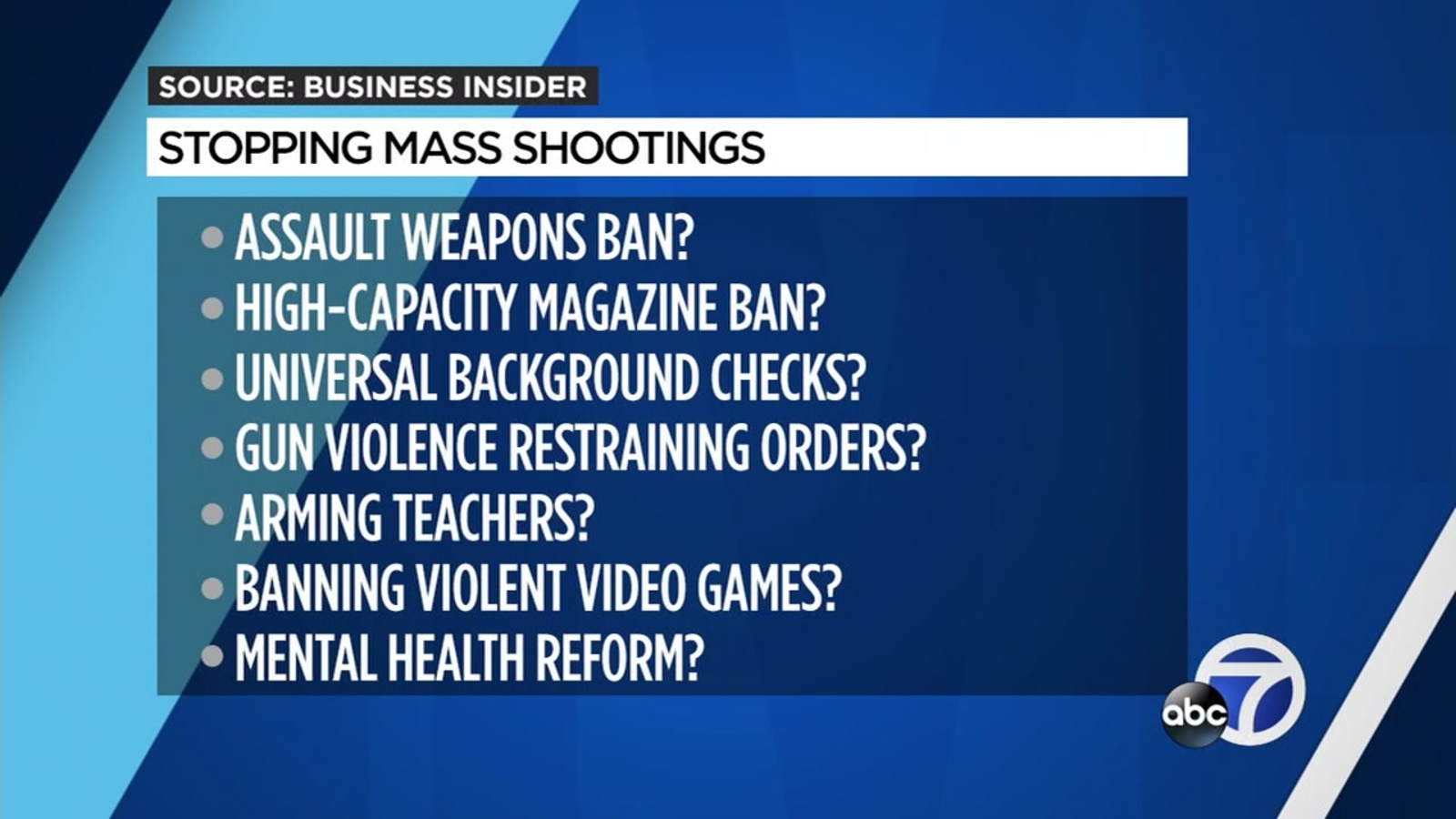

The Potential For Energy Price Increases Under The New Us Policy

May 30, 2025

The Potential For Energy Price Increases Under The New Us Policy

May 30, 2025 -

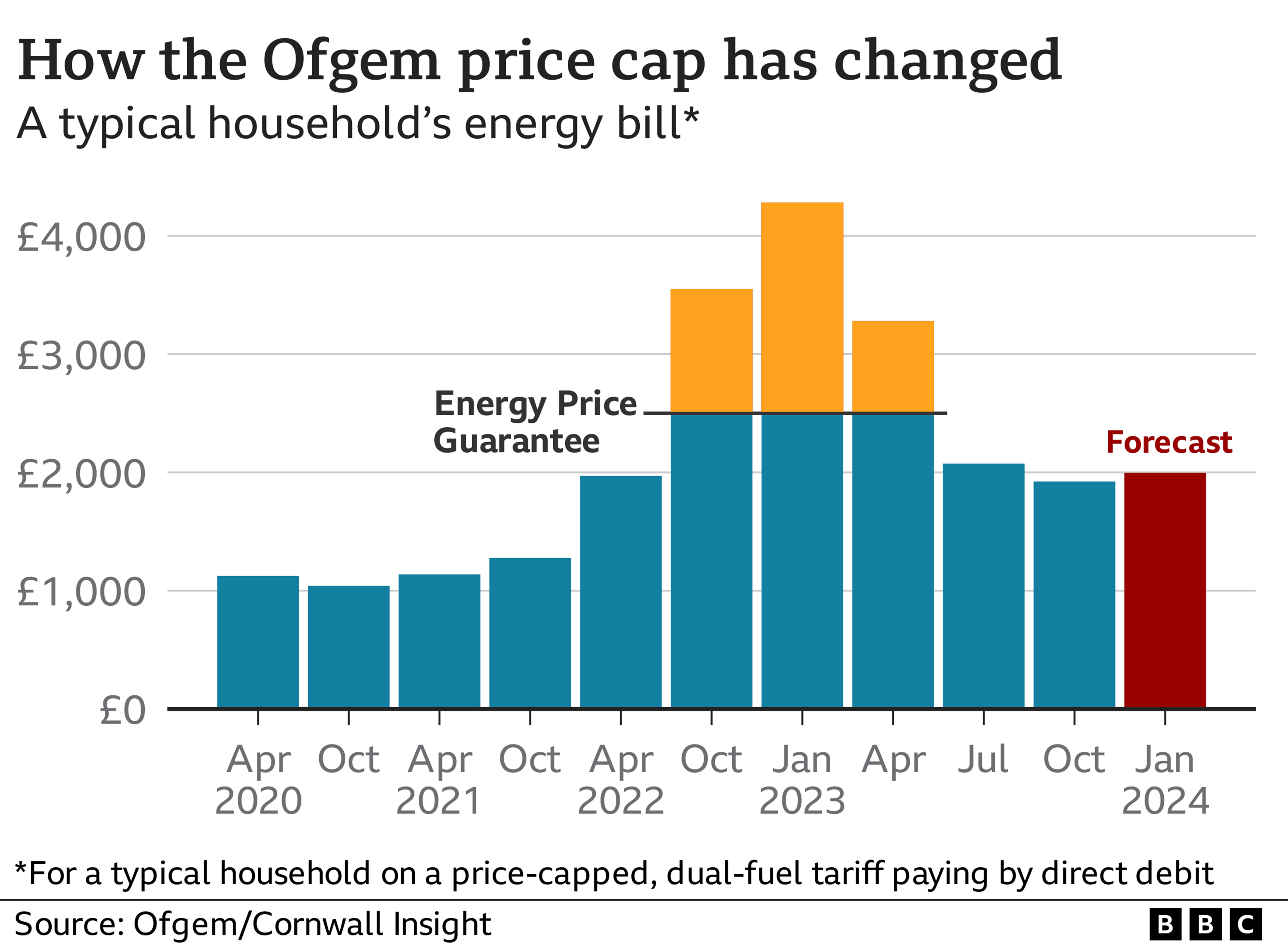

Friday Forecast Continued Drop For Live Music Stocks

May 30, 2025

Friday Forecast Continued Drop For Live Music Stocks

May 30, 2025 -

The Day A Punch Changed Academia Understanding Trumps University Policies

May 30, 2025

The Day A Punch Changed Academia Understanding Trumps University Policies

May 30, 2025 -

8 Ways Trumps Trade War Is Harming Canadas Economy

May 30, 2025

8 Ways Trumps Trade War Is Harming Canadas Economy

May 30, 2025 -

Firmenlauf Augsburg Ergebnisse Und Fotos Vom M Net Lauf

May 30, 2025

Firmenlauf Augsburg Ergebnisse Und Fotos Vom M Net Lauf

May 30, 2025

Latest Posts

-

Hudbay Minerals Staff Evacuated Amidst Flin Flon Wildfires

May 31, 2025

Hudbay Minerals Staff Evacuated Amidst Flin Flon Wildfires

May 31, 2025 -

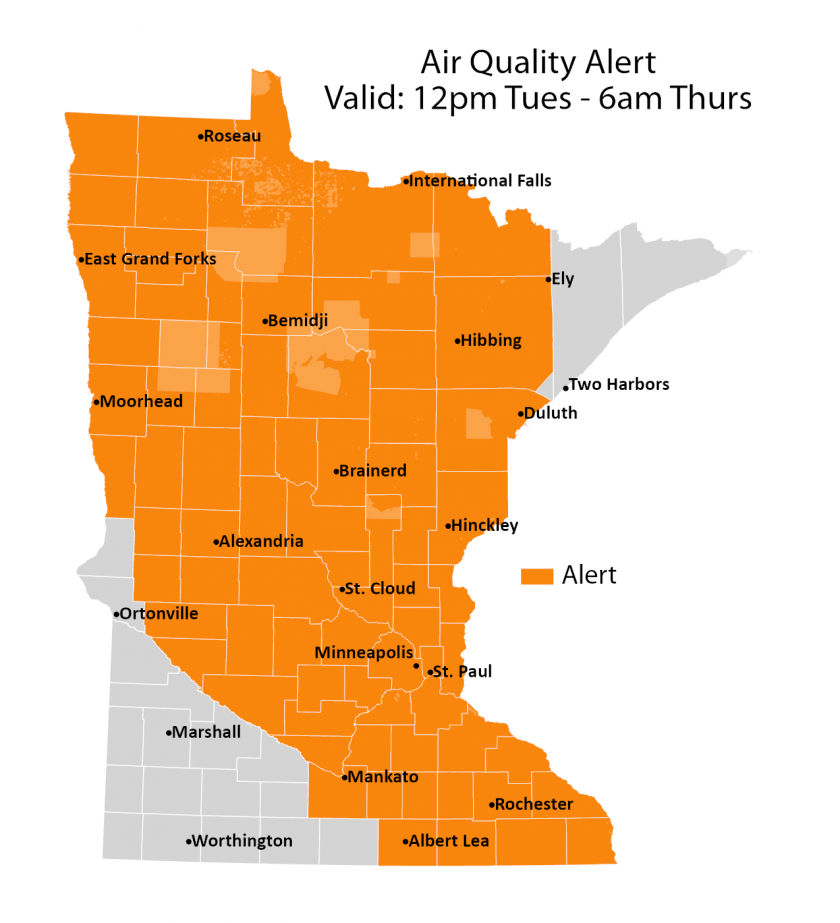

Minnesotas Air Quality Deteriorates Due To Canadian Wildfires

May 31, 2025

Minnesotas Air Quality Deteriorates Due To Canadian Wildfires

May 31, 2025 -

Hotter Temperatures Increase Wildfire Threat In Saskatchewan

May 31, 2025

Hotter Temperatures Increase Wildfire Threat In Saskatchewan

May 31, 2025 -

Air Quality Alert Minnesota Suffers From Canadian Wildfire Smoke

May 31, 2025

Air Quality Alert Minnesota Suffers From Canadian Wildfire Smoke

May 31, 2025 -

Saskatchewan Wildfires Preparing For A More Intense Season

May 31, 2025

Saskatchewan Wildfires Preparing For A More Intense Season

May 31, 2025