Nonprofit Control Ensured: OpenAI's Commitment To Responsible AI

Table of Contents

OpenAI's Nonprofit Structure and its Significance

OpenAI began as a non-profit research company, a crucial element of its initial commitment to responsible AI. This structure was deliberately chosen to prioritize the long-term societal benefit of AI over short-term profit maximization. The nonprofit model offered several key advantages:

- Focus on Societal Benefit: Without the pressure to deliver immediate financial returns, OpenAI could focus its resources on research that addresses fundamental AI safety challenges and potential societal impacts.

- Long-Term Ethical Considerations: The nonprofit structure fostered a culture that prioritized ethical considerations over rapid advancement, allowing for careful consideration of the potential risks associated with increasingly powerful AI systems.

- Increased Transparency and Accountability: A nonprofit organization generally operates under higher levels of transparency and scrutiny, enhancing public accountability and trust.

However, OpenAI transitioned to a "capped-profit" company. This shift, while raising some concerns about maintaining its initial commitment to responsible AI, is accompanied by safeguards designed to ensure that ethical considerations remain paramount. These safeguards include limitations on investor returns, ensuring that profits do not override the organization's dedication to ethical AI development. The capped-profit model aims to balance responsible innovation with the financial resources necessary for continued groundbreaking research.

OpenAI's Safety Research and Development

OpenAI has made substantial investments in AI safety research, recognizing the potential risks associated with advanced AI systems. This commitment manifests in various key initiatives:

- Alignment Research: This crucial area focuses on ensuring that AI systems' goals align with human values and intentions, preventing unintended consequences arising from misaligned objectives.

- Robustness Research: OpenAI invests heavily in making AI systems more resilient to adversarial attacks, manipulation, and unforeseen circumstances, bolstering their safety and reliability.

- Interpretability Research: Understanding how AI systems arrive at their decisions is essential for building trust and identifying potential biases or flaws. OpenAI's work in this area aims to make AI systems more transparent and accountable.

These ongoing research efforts underscore OpenAI's dedication to developing AI safely and responsibly, mitigating potential risks before they materialize. The keywords "AI safety," "alignment research," "robustness," and "interpretability" are central to their mission.

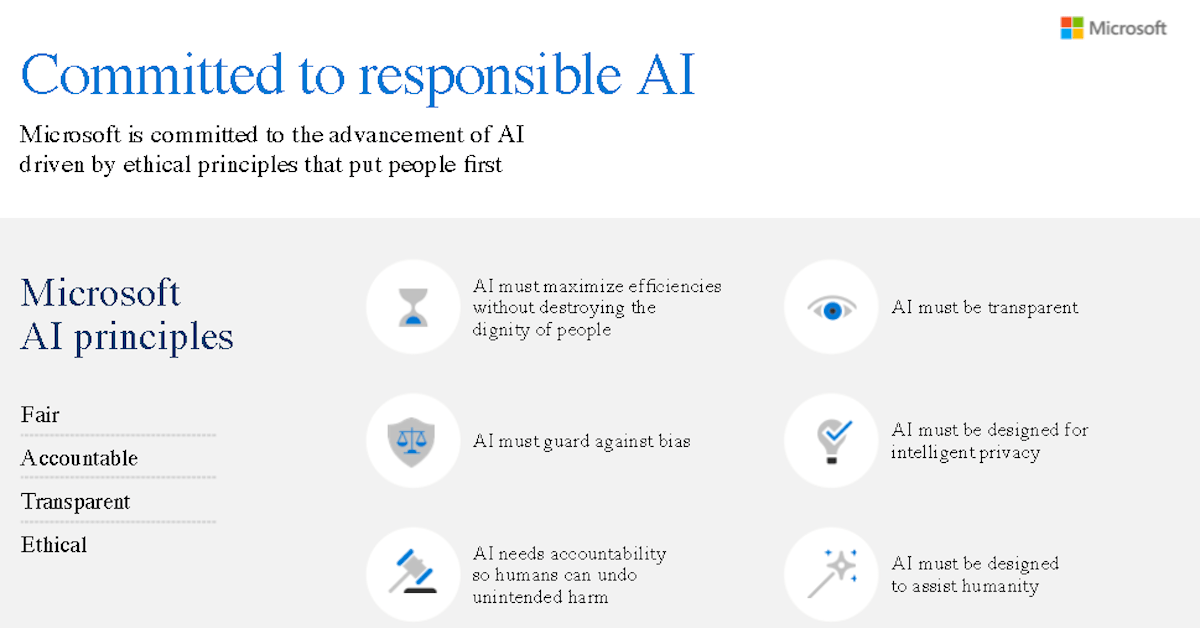

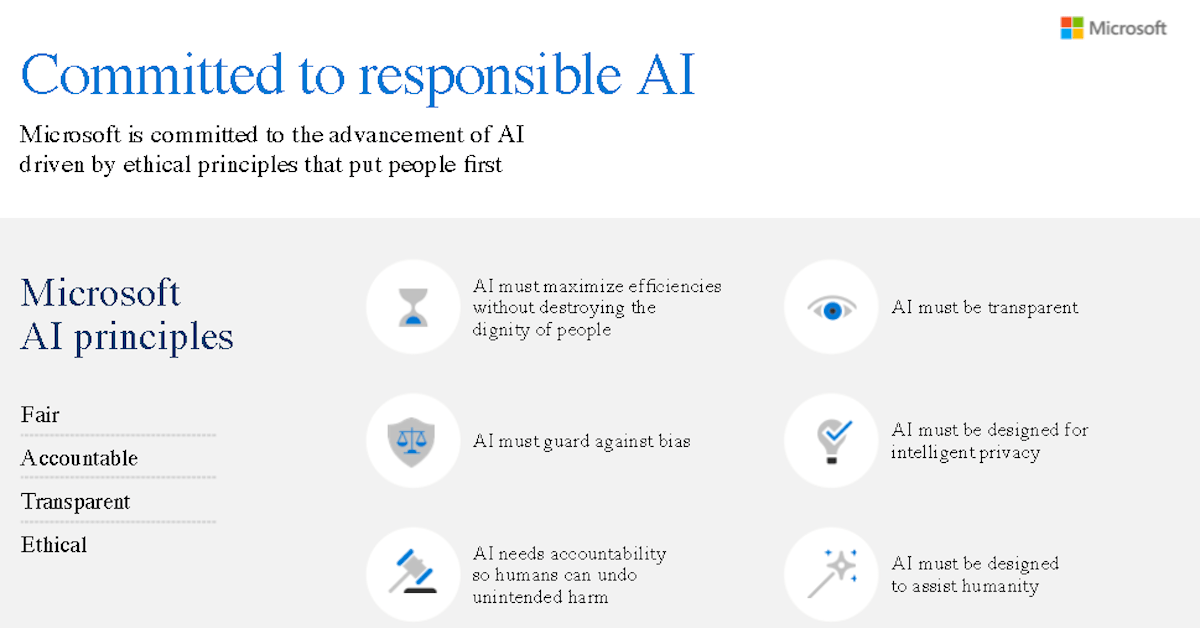

OpenAI's Commitment to Transparency and Collaboration

Transparency is a cornerstone of responsible AI development. OpenAI actively shares its research findings with the broader AI community through publications, open-source tools, and collaborative projects. This approach fosters trust, enables peer review, and promotes collective progress towards responsible AI.

- Open-Source Initiatives: By releasing certain tools and datasets openly, OpenAI facilitates broader participation in AI safety research and enables independent verification of its findings.

- Collaboration with Stakeholders: OpenAI actively engages with other researchers, organizations, and policymakers to discuss the ethical implications of AI and to shape responsible AI policies. This collaborative approach underscores the importance of a multi-stakeholder approach to addressing the challenges presented by advanced AI.

This commitment to "AI transparency," "open-source" practices, and broad "collaboration" is integral to building a future where AI benefits all of humanity.

Addressing Criticisms and Future Challenges

Despite its strong commitment to responsible AI, OpenAI faces ongoing challenges and criticisms. Concerns regarding the transition to a capped-profit model, the potential for misuse of its technology, and the limitations of current safety research are valid points that require continuous attention. The rapidly evolving nature of AI technology also presents new challenges that demand constant adaptation and innovation in approaches to "AI ethics" and "AI governance."

OpenAI’s future work will likely focus on refining existing safety techniques, exploring new approaches to AI alignment and interpretability, and actively participating in broader conversations on AI regulation and governance. The "future of AI" necessitates a proactive and adaptable approach to ensuring the responsible development and deployment of AI technologies.

Securing the Future with Nonprofit Control and Responsible AI

OpenAI's journey, from its initial nonprofit structure to its current capped-profit model, exemplifies the ongoing effort to balance innovation with ethical considerations in AI development. Its substantial investment in AI safety research, commitment to transparency and collaboration, and proactive engagement with criticism are crucial steps in building trust and ensuring responsible AI. While the transition to a capped-profit structure raises questions, OpenAI’s implemented safeguards underscore its continued dedication to responsible AI. The ongoing commitment to "Nonprofit Control," in spirit if not in strict legal structure, remains vital for ensuring that AI development prioritizes societal benefit over profit.

Learn more about OpenAI's commitment to responsible AI, and join the conversation on responsible AI development. Explore the future of nonprofit control in AI and its crucial role in shaping a safer and more equitable future with AI.

Featured Posts

-

Peut On S Attendre A Voir Lane Hutson Comme Un Defenseur Elite Dans La Lnh

May 07, 2025

Peut On S Attendre A Voir Lane Hutson Comme Un Defenseur Elite Dans La Lnh

May 07, 2025 -

Ako Sa Bude Formovat Svetovy Pohar 2028 Dilema Nhl Ohladom Ruska A Slovenska

May 07, 2025

Ako Sa Bude Formovat Svetovy Pohar 2028 Dilema Nhl Ohladom Ruska A Slovenska

May 07, 2025 -

Ralph Macchio Returns For Karate Kid 6 A Mixed Reaction

May 07, 2025

Ralph Macchio Returns For Karate Kid 6 A Mixed Reaction

May 07, 2025 -

Jackie Chans Birthday Disha Patanis Heartfelt Kung Fu Yoga Message

May 07, 2025

Jackie Chans Birthday Disha Patanis Heartfelt Kung Fu Yoga Message

May 07, 2025 -

Charles Barkleys Bold Prediction Cleveland Cavaliers Future

May 07, 2025

Charles Barkleys Bold Prediction Cleveland Cavaliers Future

May 07, 2025