OpenAI Facing FTC Investigation: The Future Of AI Regulation

Table of Contents

The FTC's Concerns Regarding OpenAI

The FTC's investigation into OpenAI centers around several key areas of concern, all highlighting the urgent need for stronger AI regulation and ethical guidelines.

Data Privacy Violations

OpenAI's models are trained on vast datasets, raising significant concerns about potential violations of consumer privacy laws. The sheer volume of data used, coupled with the potential for the inclusion of personally identifiable information (PII), necessitates a thorough examination of OpenAI's data handling practices. Specific concerns include:

- Illegal collection of personal data: The FTC is likely investigating whether OpenAI obtained consent for the use of personal data used in training its models, and whether this data collection adheres to relevant legislation like the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the US.

- Insufficient user consent: Even if data was legally obtained, the FTC might investigate whether users were adequately informed about how their data would be used in training AI models and whether they provided truly informed consent.

- Lack of data security measures: The investigation will likely scrutinize the security measures employed by OpenAI to protect the massive datasets used to train its AI, looking for vulnerabilities that could lead to data breaches and misuse.

These data privacy issues are not unique to OpenAI; they represent a broader challenge for the entire AI industry, underscoring the need for robust data protection regulations.

Algorithmic Bias and Discrimination

Another key area of the FTC's investigation is the potential for algorithmic bias embedded within OpenAI's models. AI models learn from the data they are trained on, and if that data reflects existing societal biases, the resulting AI system may perpetuate and even amplify those biases. This can lead to discriminatory outcomes in various applications, such as:

- Loan applications: Biased AI could unfairly deny loans to specific demographics.

- Hiring processes: AI-powered recruitment tools might discriminate against certain candidates based on factors like gender or race.

- Criminal justice: Biased algorithms could lead to unfair sentencing or profiling.

The potential societal impact of biased AI is profound, highlighting the importance of fairness and accountability in AI development. The FTC's investigation will likely assess OpenAI's efforts to mitigate bias in its models and determine whether sufficient steps have been taken to ensure fair and equitable outcomes.

Misleading Marketing and Consumer Protection

The FTC's investigation also extends to OpenAI's marketing claims regarding the capabilities and safety of its AI models. The investigation will focus on whether OpenAI has made misleading claims about the accuracy, reliability, and safety of its technology, potentially violating consumer protection laws. This includes:

- Overstating capabilities: The FTC may investigate claims suggesting the AI is more accurate or reliable than it actually is.

- Understating limitations: Similarly, the investigation might examine whether OpenAI adequately disclosed the limitations and potential risks associated with its AI models.

- Failing to warn about potential harm: The FTC will likely evaluate whether OpenAI adequately warned users of the potential for biased outputs or other harmful consequences.

The FTC’s scrutiny of OpenAI's marketing emphasizes the importance of transparency and responsible communication in the AI industry. Clear and accurate information about AI capabilities and limitations is crucial for informed consumer choice and responsible technology adoption.

The Broader Implications for AI Regulation

The FTC's investigation into OpenAI has significant implications for the future of AI regulation globally.

The Need for Comprehensive AI Legislation

The investigation underscores the urgent need for clear and comprehensive AI regulations worldwide. Current laws are often insufficient to address the unique challenges posed by advanced AI systems. Key areas requiring regulation include:

- Data privacy: Stronger data protection laws are needed to ensure the responsible collection, use, and protection of personal data used in AI development.

- Algorithmic transparency: Regulations promoting transparency in algorithms can help identify and mitigate bias.

- Accountability for AI-driven decisions: Clear mechanisms for accountability are crucial to address harms caused by AI systems.

The development of effective AI legislation requires careful consideration of different regulatory approaches, balancing the need for innovation with the imperative to protect individuals and society.

Balancing Innovation and Safety

Creating effective AI regulation requires a delicate balance between fostering innovation and ensuring responsible AI development and deployment. Over-regulation could stifle innovation, but insufficient regulation could lead to significant societal harms. Strategies for achieving this balance include:

- Ethical guidelines: Industry-wide adoption of ethical guidelines for AI development can help guide responsible practices.

- Independent audits: Regular, independent audits of AI systems can help identify and address potential risks.

- Robust testing procedures: Rigorous testing procedures are crucial for ensuring the safety and reliability of AI systems before deployment.

The role of both industry self-regulation and government oversight is essential in achieving this balance.

The Future of Generative AI

Generative AI models like ChatGPT and DALL-E pose unique regulatory challenges. The ability of these models to create realistic text, images, and other content raises concerns about:

- Deepfakes and misinformation: Generative AI could be used to create convincing but false content, potentially damaging reputations and undermining trust.

- Copyright infringement: The use of copyrighted material in training generative AI models raises significant legal questions.

- Bias amplification: Generative AI models may amplify existing societal biases in their outputs.

Potential regulatory approaches for generative AI include content moderation, watermarking, and transparency requirements regarding the data used in training models. International cooperation is crucial in addressing the global challenges posed by generative AI.

Conclusion

The FTC's investigation into OpenAI highlights the urgent need for robust and comprehensive AI regulation. The development and deployment of powerful AI technologies necessitate a proactive and thoughtful approach to addressing concerns related to data privacy, algorithmic bias, and consumer protection. Failure to establish effective regulatory frameworks could have significant consequences for individuals, society, and the future of AI itself. The time to act is now – let's work together to ensure the responsible development and use of AI through stronger OpenAI regulation and broader AI governance. The future of AI depends on it.

Featured Posts

-

Fenerbahce De Talisca Tartismasi Ve Tadic Operasyonu Yoenetim Harekete Gecti

May 20, 2025

Fenerbahce De Talisca Tartismasi Ve Tadic Operasyonu Yoenetim Harekete Gecti

May 20, 2025 -

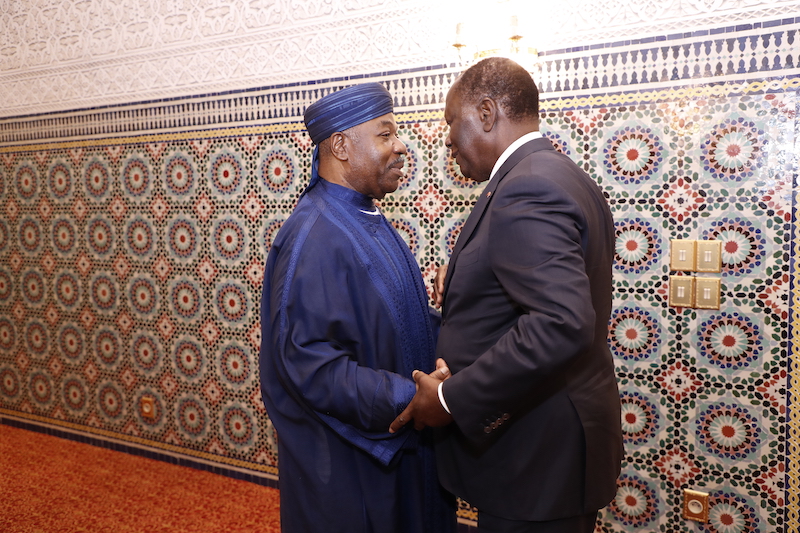

Visite D Amitie Et De Travail Du President Ghaneen Mahama A Abidjan Renforcement Des Liens Diplomatiques

May 20, 2025

Visite D Amitie Et De Travail Du President Ghaneen Mahama A Abidjan Renforcement Des Liens Diplomatiques

May 20, 2025 -

Tyler Bates Wwe Raw Return What To Expect

May 20, 2025

Tyler Bates Wwe Raw Return What To Expect

May 20, 2025 -

Nyt Mini Crossword Answers And Hints For April 20 2025

May 20, 2025

Nyt Mini Crossword Answers And Hints For April 20 2025

May 20, 2025 -

Tyler Bate And Pete Dunne Reunite On Wwe Raw

May 20, 2025

Tyler Bate And Pete Dunne Reunite On Wwe Raw

May 20, 2025