OpenAI's 2024 Event: Easier Voice Assistant Creation For Developers

Table of Contents

Streamlined Development Tools and APIs

OpenAI is expected to unveil new and improved APIs and SDKs specifically designed for voice assistant development. These tools will simplify complex tasks, making the process more accessible to a wider range of developers, regardless of their prior experience with voice assistant SDKs or API integration. The focus is on simplified development, allowing more developers to enter this exciting field.

- Simplified API calls for speech-to-text and text-to-speech: Expect streamlined API calls, reducing the code needed for basic voice assistant functionality. This will drastically cut development time.

- Improved accuracy and speed of speech recognition: OpenAI's advancements promise more accurate and faster speech recognition, leading to more responsive and reliable voice assistants. This is crucial for a positive user experience.

- Pre-built modules for common voice assistant functionalities: Pre-built modules for tasks like intent recognition and dialogue management will allow developers to quickly integrate core features, accelerating the development cycle. This means less time building from scratch and more time focusing on unique features.

- Enhanced documentation and tutorials for easier integration: Comprehensive documentation and tutorials will make the integration process smoother and more intuitive, even for developers new to OpenAI's tools. This lowers the barrier to entry significantly.

- Support for multiple languages and dialects: Support for a wider range of languages and dialects will broaden the reach of voice assistants, making them accessible to a global audience. This expands market potential.

Enhanced Natural Language Processing (NLP) Capabilities

OpenAI’s advancements in NLP are crucial for building intelligent and responsive voice assistants. Expect significant improvements in areas like intent recognition, context understanding, and dialogue management, leading to more natural and engaging conversational AI.

- More accurate intent recognition even with noisy audio input: Improved algorithms will better understand user commands even in challenging acoustic environments, making voice assistants more robust and reliable.

- Improved ability to handle complex conversations and maintain context: The ability to manage complex conversations and remember previous interactions will significantly enhance the user experience, creating more natural-sounding dialogue.

- Better understanding of user nuances and emotions: Advanced NLP models may detect user sentiment and adapt responses accordingly, resulting in more personalized and empathetic interactions.

- Support for personalized and adaptive responses: Voice assistants will become more tailored to individual users, learning preferences and adapting their behavior accordingly.

- Advanced features for handling interruptions and corrections: Seamless handling of interruptions and corrections will make interactions feel more natural and fluid.

Pre-trained Models for Faster Development

OpenAI may release pre-trained models that developers can fine-tune for their specific needs, drastically reducing the time and resources needed for training custom models. This leverages the power of transfer learning and machine learning models, accelerating the development cycle significantly. Developers can focus on customizing existing models rather than training entirely new ones from scratch.

Improved Speech Synthesis and Recognition

Expect significant enhancements in both speech synthesis (making the voice assistant sound more natural) and speech recognition (improving accuracy and robustness). High-quality audio and natural-sounding voices are key to a positive user experience.

- More natural-sounding voices with improved intonation and emotion: Expect more expressive and human-like voices, enhancing the overall interaction.

- Enhanced ability to handle different accents and dialects: Improved speech recognition will understand a wider range of accents and dialects, making voice assistants more inclusive.

- Reduced latency for real-time interactions: Faster processing will lead to more responsive and fluid conversations.

- Improved noise cancellation and robustness to background sounds: Advanced noise cancellation techniques will ensure reliable performance even in noisy environments.

Conclusion

OpenAI's 2024 event promises to democratize voice assistant development, making it easier and faster for developers to build innovative and engaging voice-powered applications. The streamlined tools, enhanced NLP capabilities, and pre-trained models will significantly lower the barrier to entry. Don't miss out on the opportunity to leverage these advancements – stay tuned for the official announcements and prepare to revolutionize your voice assistant projects with the power of OpenAI. Learn more about creating your own voice assistant with the latest OpenAI tools and APIs and join the future of voice AI development.

Featured Posts

-

Trade Tensions G7 Fails To Address Tariff Concerns

May 26, 2025

Trade Tensions G7 Fails To Address Tariff Concerns

May 26, 2025 -

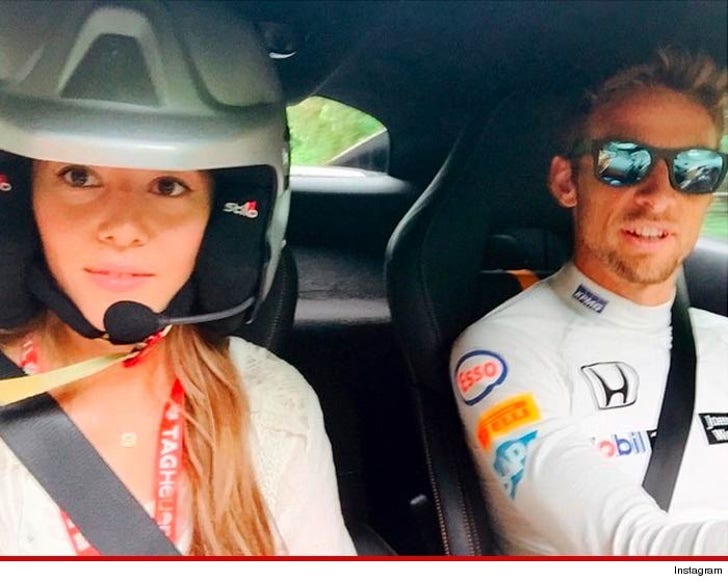

250k London Robbery F1 Star Jenson Button Stays Abroad

May 26, 2025

250k London Robbery F1 Star Jenson Button Stays Abroad

May 26, 2025 -

Zivotni Stil Penzionera Luksuz I Bogatstvo

May 26, 2025

Zivotni Stil Penzionera Luksuz I Bogatstvo

May 26, 2025 -

Naomi Campbells Possible Met Gala 2025 Absence A Rift With Anna Wintour

May 26, 2025

Naomi Campbells Possible Met Gala 2025 Absence A Rift With Anna Wintour

May 26, 2025 -

Voici L Avenir Des Locaux De La Rtbf Au Palais Des Congres De Liege

May 26, 2025

Voici L Avenir Des Locaux De La Rtbf Au Palais Des Congres De Liege

May 26, 2025