OpenAI's ChatGPT Under FTC Scrutiny: Implications For AI Regulation

Table of Contents

The FTC's Investigation into ChatGPT: Potential Violations

The FTC's investigation into ChatGPT centers on potential violations related to consumer protection and data privacy. The agency is examining whether OpenAI's practices comply with existing laws and regulations, setting a crucial precedent for the broader AI industry.

Unfair or Deceptive Practices

The FTC's concerns regarding unfair or deceptive practices stem from several key areas:

-

Misrepresentation of ChatGPT's capabilities: ChatGPT is marketed as a powerful AI, but its limitations are not always clearly communicated. Overstated claims about its accuracy, reliability, and understanding could mislead users. For instance, ChatGPT sometimes generates factually incorrect or nonsensical information, which could have serious consequences depending on the application.

-

Lack of transparency about data collection and usage: Concerns exist regarding the extent and nature of data collected by ChatGPT during its operation. Users might not fully understand how their input is used to train the model, potentially raising privacy red flags. The lack of clear and accessible information about data practices could violate consumer protection laws.

-

Potential for biased or harmful outputs: ChatGPT's responses are shaped by the data it's trained on, which might contain biases reflecting societal prejudices. This can lead to discriminatory or harmful outputs, posing ethical and legal issues. Examples include generating biased responses based on gender, race, or religion, or producing content that could incite violence or hate speech.

The potential consequences of these violations are significant. OpenAI could face substantial reputational damage and substantial financial penalties, including hefty fines. Legal precedents, such as those established in cases involving deceptive advertising, are relevant here. For example, the FTC's action against companies making false or unsubstantiated claims about their products' efficacy directly informs the current investigation.

Data Privacy Concerns

Another critical area of the FTC's investigation is data privacy. Several issues are at stake:

-

Unauthorized data collection: The extent to which ChatGPT collects and retains user data, and whether this collection is always authorized and transparent, remains a concern. The collection of personal identifiable information (PII) without informed consent would represent a clear violation of data privacy regulations.

-

Insufficient data security measures: The robustness of OpenAI's security measures to protect user data from breaches and misuse is being scrutinized. Failure to implement appropriate safeguards could result in unauthorized access to sensitive information, leading to severe consequences for users.

-

Potential for misuse of personal information: The possibility of user data being misused or inadvertently revealed through ChatGPT's responses needs to be addressed. This is particularly relevant given the increasing use of AI in sensitive contexts, such as healthcare and finance. Compliance with regulations like GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) is paramount in mitigating these risks.

The implications of inadequate data handling practices are far-reaching. Data breaches can lead to identity theft, financial loss, and reputational harm for users. Furthermore, non-compliance with GDPR and CCPA can result in substantial fines and legal repercussions for OpenAI.

Broader Implications for AI Regulation

The FTC's investigation into ChatGPT isn't isolated; it sets a precedent for how AI companies will be held accountable. This investigation highlights the need for a comprehensive regulatory framework to address the unique challenges posed by AI.

The Need for Comprehensive AI Legislation

The current regulatory landscape is ill-equipped to handle the rapid advancements in AI. Several issues demand immediate attention:

-

Lack of clear legal frameworks for AI: Existing laws often don't adequately address the specific risks associated with AI systems, leaving a regulatory gap. The complexity of AI technologies makes it challenging to define precise legal boundaries.

-

Difficulties in defining and regulating AI systems: The evolving nature of AI makes it difficult to create static regulations that can adapt to ongoing technological changes. Defining what constitutes AI itself and establishing clear regulatory categories present considerable hurdles.

-

The need for international cooperation on AI governance: AI transcends national borders, requiring international collaboration to establish consistent and effective regulations. Harmonizing diverse legal standards and approaches will be vital for preventing regulatory arbitrage and ensuring global accountability.

Existing and proposed AI regulations, such as the EU AI Act, provide frameworks, but many challenges remain in translating these broad principles into workable, enforceable regulations specific to generative AI like ChatGPT.

Balancing Innovation and Consumer Protection

The key challenge lies in finding the right balance between fostering AI innovation and ensuring consumer protection. This requires a nuanced approach:

-

The importance of fostering AI innovation while mitigating risks: Overly stringent regulations could stifle innovation and hinder the development of beneficial AI technologies. The regulatory framework needs to encourage responsible innovation while addressing potential harms.

-

The need for ethical guidelines and responsible AI development practices: Industry self-regulation and ethical guidelines play a crucial role in promoting responsible AI development. These guidelines should address issues like bias, transparency, and accountability.

-

The role of industry self-regulation: While government regulation is necessary, industry self-regulation can supplement government oversight, promoting faster adaptation to technological advancements. This requires a high level of commitment and transparency from AI companies.

The potential impact on AI development and market competition is substantial. A heavy regulatory burden could slow down innovation, while inadequate regulation could lead to market failures and consumer harm.

The Future of ChatGPT and AI Development

The FTC scrutiny will likely force significant changes in how OpenAI and other AI companies operate.

Increased Transparency and Accountability

The investigation is expected to drive substantial changes, including:

-

Enhanced data privacy measures: OpenAI will likely invest in more robust data security measures and improve data handling practices to ensure compliance with relevant regulations.

-

Improved user control over data: Users should have greater control over their data, including the ability to access, correct, and delete their information. Transparency in data usage is also paramount.

-

Clearer disclosures about AI limitations and biases: OpenAI and other AI companies will need to provide more transparent information about the limitations and potential biases of their AI systems. This increased transparency will empower users to make informed decisions about how to use these tools.

Increased auditing and compliance costs are inevitable, potentially impacting OpenAI's business model and competitiveness.

The Shift Towards Responsible AI Development

The FTC investigation signals a shift towards prioritizing responsible AI development:

-

Emphasis on ethical considerations in AI design: Ethical considerations will be central to the design and development of future AI systems. This requires embedding ethical principles into the AI development lifecycle.

-

Increased investment in AI safety research: More resources will be dedicated to researching and mitigating the risks associated with AI, including bias, safety, and security.

-

Greater collaboration between industry, government, and academia: A collaborative approach involving all stakeholders is essential to address the complex challenges of AI governance and ensure responsible AI development.

This shift will likely lead to changes in industry standards and best practices, affecting the long-term trajectory of AI development and deployment.

Conclusion

The FTC's investigation into OpenAI's ChatGPT is a pivotal moment for the AI industry. It underscores the critical need for robust regulation to balance the incredible potential of AI with the inherent risks to consumers and society. The outcome of this investigation will significantly shape the future of AI development, demanding increased transparency, accountability, and a commitment to responsible AI practices. Moving forward, a proactive and collaborative approach involving regulators, developers, and researchers is essential to navigate the complex challenges of governing AI and ensuring its benefits are widely shared while mitigating potential harms. Further scrutiny of ChatGPT and other AI technologies is crucial to ensure responsible innovation.

Featured Posts

-

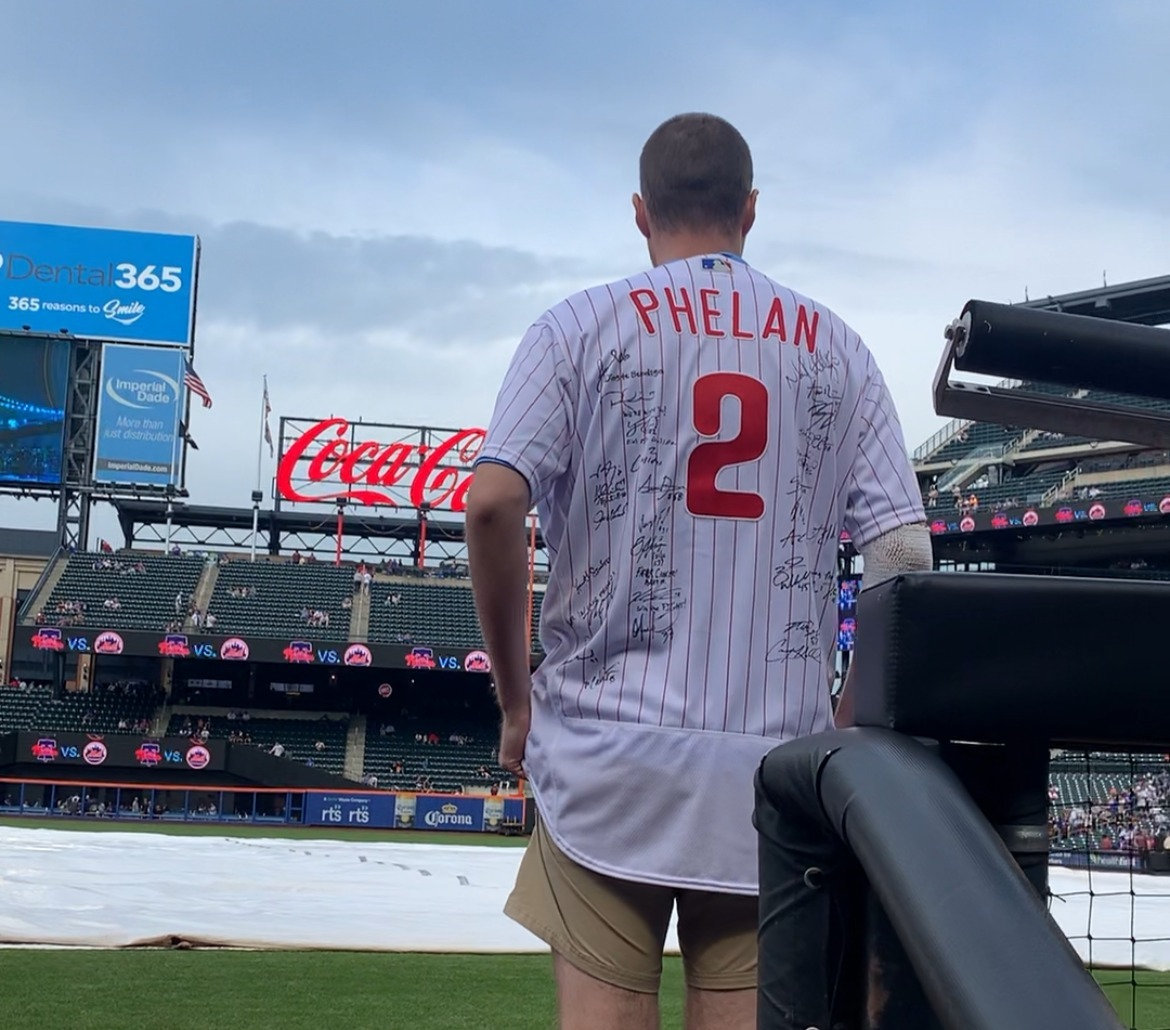

Citi Field Hosts Mets Blue Jays Series

May 19, 2025

Citi Field Hosts Mets Blue Jays Series

May 19, 2025 -

The Fight Over Californias Ev Mandate Exclusive Insights

May 19, 2025

The Fight Over Californias Ev Mandate Exclusive Insights

May 19, 2025 -

Eurovision 2025 Where Will It Be Held And When

May 19, 2025

Eurovision 2025 Where Will It Be Held And When

May 19, 2025 -

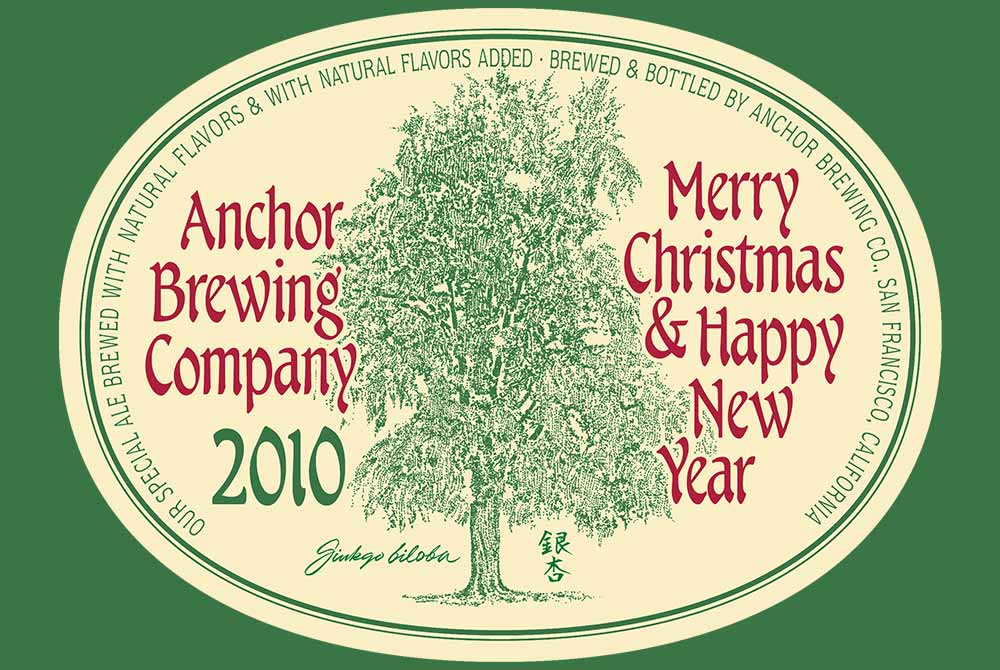

Anchor Brewing Shuttering What Happens Next

May 19, 2025

Anchor Brewing Shuttering What Happens Next

May 19, 2025 -

Cardinal News Roundup Wednesday Afternoon Edition

May 19, 2025

Cardinal News Roundup Wednesday Afternoon Edition

May 19, 2025