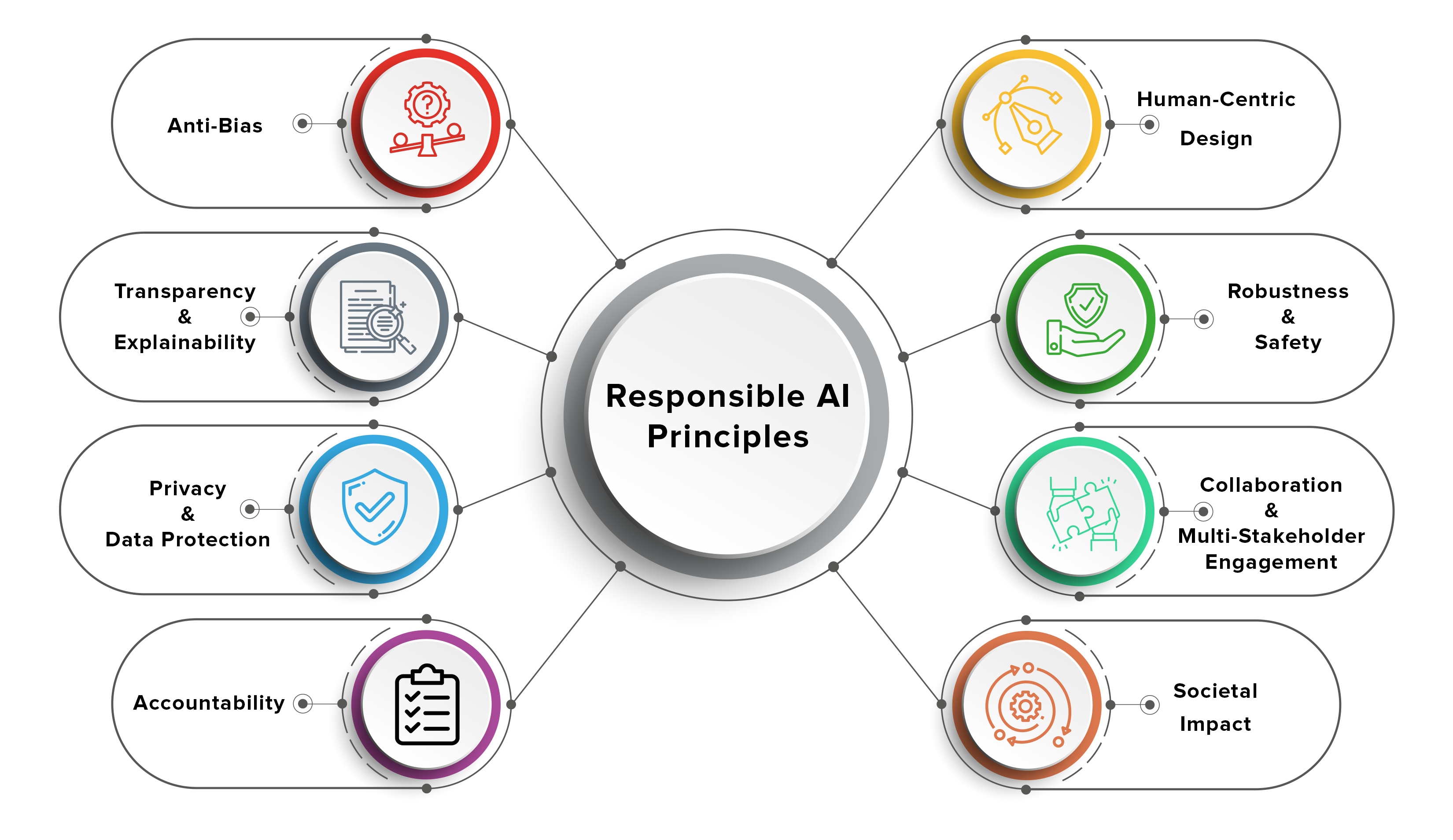

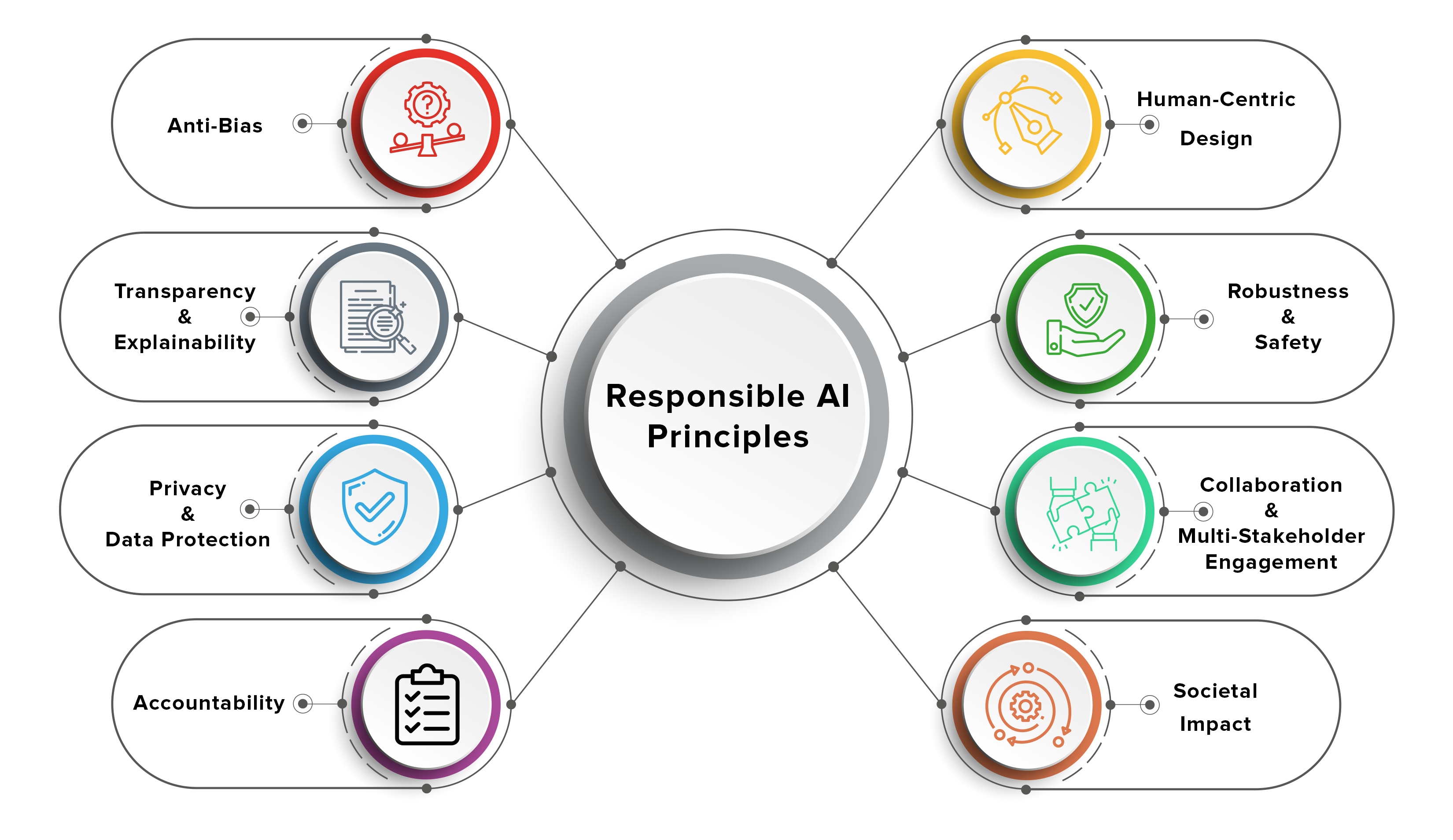

Responsible AI: Acknowledging And Addressing The Limitations Of AI Learning

Table of Contents

Data Bias and its Impact on AI Systems

The foundation of any AI system is its data. Unfortunately, the data used to train these systems often reflects existing societal biases, leading to unfair or discriminatory outcomes. This is the core problem of biased AI.

The Problem of Biased Datasets

Biased datasets are datasets that systematically misrepresent the reality they intend to model. This misrepresentation can stem from various sources, creating different types of bias:

- Sample Bias: Occurs when the sample used to create the dataset is not representative of the population it aims to model. For example, a facial recognition system trained primarily on images of light-skinned individuals may perform poorly on darker-skinned individuals.

- Measurement Bias: Arises from flaws in how data is collected or measured. Consider a loan application AI trained on historical data where certain demographics were historically denied loans, regardless of creditworthiness. This would perpetuate existing inequalities.

- Confirmation Bias: This bias occurs when data is selected or interpreted to confirm pre-existing beliefs, potentially ignoring contradictory evidence.

The consequences of biased AI are severe. Biased algorithms can reinforce societal inequalities, lead to inaccurate predictions, and even perpetuate harmful stereotypes. For example, biased algorithms in criminal justice systems can lead to unfair sentencing, while biased hiring tools can perpetuate workplace discrimination.

Mitigating Bias in AI Development

Addressing bias requires proactive strategies throughout the AI development lifecycle:

- Data Augmentation: Increasing the diversity and size of datasets by artificially generating synthetic data can help reduce bias.

- Algorithmic Fairness Methods: Implementing algorithms designed to minimize bias and ensure fair outcomes is crucial. Techniques like fairness-aware learning and adversarial debiasing are actively being researched and implemented.

- Diverse and Representative Datasets: The data used to train AI systems must reflect the diversity of the population it will impact. This requires careful data collection and curation.

- Rigorous Testing and Evaluation: Thorough testing and evaluation on diverse datasets are essential to identify and address bias before deployment.

The Limits of Generalization and Explainability in AI

Another key limitation of AI lies in its capacity for generalization and explainability.

The Black Box Problem

Many sophisticated AI models, particularly deep learning models, function as "black boxes." Their internal workings are so complex that it's difficult, if not impossible, to understand how they arrive at their conclusions. This lack of transparency presents several challenges:

- Challenges in Interpreting Model Outputs: Understanding why an AI system made a particular decision is crucial for trust and accountability. The inability to interpret outputs limits our ability to identify and correct errors.

- Limitations of Current Explainable AI (XAI) Techniques: While research on explainable AI is rapidly advancing, current techniques still have limitations in explaining the decision-making processes of complex models.

- The Importance of Transparency: Transparency is paramount for building trust in AI systems. Users need to understand how these systems work to ensure they are used responsibly.

Overfitting and Generalization Issues

AI models are trained on specific datasets. If a model is overtrained, it performs exceptionally well on this training data but poorly on unseen data, severely limiting its real-world applicability. This lack of generalization is a critical limitation.

- Techniques to Improve Generalization: Regularization techniques (like L1 and L2 regularization) and cross-validation methods help mitigate overfitting and improve the model's ability to generalize to new data.

- Importance of Robust Testing on Diverse Datasets: Rigorous testing on datasets that differ from the training data is essential to assess the model's ability to generalize and identify potential weaknesses.

Ethical Considerations and Responsible AI Deployment

The development and deployment of AI raise significant ethical concerns that demand careful consideration.

Accountability and Transparency

Establishing clear lines of responsibility for AI systems and their outputs is crucial. This involves:

- Auditing AI Systems: Regular audits of AI systems can help identify and address biases, errors, and potential ethical issues.

- Establishing Ethical Guidelines for AI Development and Deployment: Clear ethical guidelines are necessary to guide the development and use of AI systems.

- User Rights and Data Privacy: Protecting user rights and data privacy is paramount. AI systems should be designed and used in ways that respect individual privacy and autonomy.

Societal Impact and Mitigation Strategies

AI has the potential to significantly impact society, both positively and negatively. Potential societal impacts include job displacement and privacy concerns. Responsible implementation requires:

- Investing in Retraining Programs: Preparing the workforce for the changes brought about by AI through retraining and upskilling initiatives is crucial to mitigate job displacement.

- Implementing Robust Regulatory Frameworks: Establishing appropriate regulations to govern the development and use of AI is essential to prevent harmful outcomes.

- Promoting Public Awareness and Education: Educating the public about the capabilities and limitations of AI, as well as its ethical implications, is vital to ensure responsible adoption.

Conclusion

The limitations of AI learning—data bias, lack of explainability, generalization issues, and ethical concerns—are significant challenges that must be addressed. Developing Responsible AI requires a multi-faceted approach encompassing rigorous data curation, the development of fairer algorithms, increased transparency, and robust ethical guidelines. The key takeaways are the urgent need for proactive measures to mitigate bias, improve model explainability, and ensure the ethical and responsible deployment of AI systems. The future of Responsible AI depends on our collective commitment to developing and implementing AI technologies that benefit all of society. Let's embrace Responsible AI and work towards a future where AI serves humanity ethically and equitably. Learn more about developing Responsible AI and contribute to building a better future for all.

Featured Posts

-

Receta De Lasana De Calabacin De Pablo Ojeda La Version Mas Facil De Mas Vale Tarde

May 31, 2025

Receta De Lasana De Calabacin De Pablo Ojeda La Version Mas Facil De Mas Vale Tarde

May 31, 2025 -

Live The Good Life Practical Tips For A Fulfilling Existence

May 31, 2025

Live The Good Life Practical Tips For A Fulfilling Existence

May 31, 2025 -

Legendary Pop Group The Searchers To Play Final Show At Glastonbury

May 31, 2025

Legendary Pop Group The Searchers To Play Final Show At Glastonbury

May 31, 2025 -

Munguias Revenge Dominant Victory Over Surace In Rematch

May 31, 2025

Munguias Revenge Dominant Victory Over Surace In Rematch

May 31, 2025 -

Deutsche Stadt Lockt Mit Kostenloser Unterkunft Neue Einwohner An

May 31, 2025

Deutsche Stadt Lockt Mit Kostenloser Unterkunft Neue Einwohner An

May 31, 2025