The Limits Of AI Learning: Responsible AI Practices For A Safer Future

Table of Contents

Data Bias and its Impact on AI Systems

AI systems are only as good as the data they are trained on. Biased datasets inevitably lead to biased AI outputs, perpetuating and even amplifying existing societal inequalities. This problem, often referred to as AI bias, manifests in various sectors, leading to unfair or discriminatory outcomes.

For example, in recruitment, AI systems trained on historical data that reflects gender or racial biases may unfairly discriminate against qualified candidates from underrepresented groups. Similarly, in healthcare, biased algorithms could lead to misdiagnosis or unequal access to care. In the criminal justice system, biased AI could contribute to unfair sentencing or profiling.

- Lack of diversity in training data: Insufficient representation of diverse populations in datasets leads to algorithms that perform poorly or unfairly for certain groups.

- Reinforcement of existing societal biases: AI systems can inadvertently learn and amplify existing biases present in the data, leading to discriminatory outcomes.

- The difficulty in detecting and mitigating bias: Identifying and correcting bias in complex AI models is a significant challenge, requiring careful analysis and specialized techniques.

Mitigating data bias requires proactive measures, such as data augmentation to increase diversity and the use of algorithmic fairness techniques to ensure equitable outcomes. Developing robust methods for detecting and mitigating bias is crucial for building responsible and trustworthy AI systems.

The Problem of Explainability in AI ("Black Box" Problem)

Many advanced AI models, particularly deep learning systems, function as "black boxes." Their decision-making processes are opaque and difficult to understand, making it challenging to determine how they arrive at their conclusions. This lack of transparency poses significant challenges for accountability and trust.

The inability to understand an AI's reasoning process has several implications:

- Difficulty in debugging and improving AI systems: Without understanding why an AI made a particular decision, it's difficult to identify and correct errors or biases.

- Concerns about algorithmic accountability and legal liability: If an AI system makes a harmful decision, determining responsibility becomes challenging when the decision-making process is unclear.

- The need for explainable AI (XAI) techniques: Developing methods to make AI's decision-making processes more transparent is crucial for building trust and accountability.

Researchers are actively developing methods aimed at increasing AI explainability, such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which provide insights into the factors influencing an AI's predictions. These techniques are crucial steps toward addressing the "black box" problem and building more trustworthy AI systems.

Security Risks and the Vulnerability of AI Systems

AI systems, despite their sophisticated capabilities, are vulnerable to various security threats. Adversarial attacks and data poisoning are particularly concerning. Adversarial attacks involve manipulating input data in subtle ways to cause the AI system to produce incorrect or misleading outputs. Data poisoning involves corrupting the training data to compromise the AI's performance.

The security of AI systems is paramount, and robust security measures are crucial:

- Adversarial examples that fool AI models: Small, almost imperceptible changes to input data can drastically alter an AI's output, potentially with serious consequences.

- Data breaches and unauthorized access to AI systems: AI systems often contain sensitive data, making them attractive targets for malicious actors.

- The need for security audits and robust defenses: Regular security audits and the implementation of robust security protocols are essential to protect AI systems from attacks.

Secure AI development practices must be prioritized, including secure data handling, robust model training, and rigorous testing to identify and mitigate vulnerabilities.

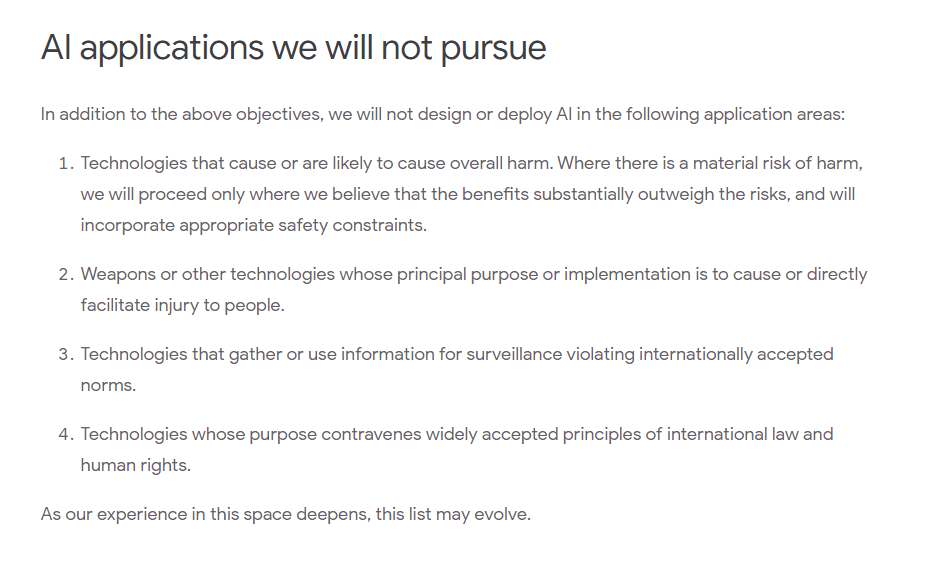

Ethical Considerations and Responsible AI Development

The deployment of AI raises significant ethical considerations, including concerns about privacy, job displacement, and the potential for misuse. Responsible AI development requires careful consideration of these ethical implications.

Several ethical concerns demand attention:

- Privacy concerns related to data collection and use: AI systems often rely on vast amounts of data, raising concerns about individual privacy and data security.

- The potential for AI to exacerbate existing inequalities: Biased AI systems can perpetuate and amplify existing societal inequalities, leading to unfair or discriminatory outcomes.

- The importance of human oversight in AI systems: Human oversight is crucial to ensure that AI systems are used responsibly and ethically.

Ethical guidelines and regulations are essential to guide the development and deployment of AI. Promoting responsible AI development principles, such as fairness, transparency, accountability, and privacy, is crucial for building a future where AI benefits all of humanity.

Conclusion

Understanding the limits of AI learning—including data bias, the lack of explainability, security risks, and ethical concerns—is crucial for developing responsible AI practices. By prioritizing data diversity, explainability, security, and ethical considerations, we can harness the power of AI while mitigating its risks and creating a future where AI benefits all of humanity. Building a safer future with AI requires a commitment to responsible AI development and deployment, ensuring that AI technologies are used ethically and for the betterment of society. Let's work together to address the limits of AI learning and foster a future where AI is a force for good.

Featured Posts

-

Former Nypd Commissioner Bernard Kerik Hospitalized Update On His Condition

May 31, 2025

Former Nypd Commissioner Bernard Kerik Hospitalized Update On His Condition

May 31, 2025 -

Sanofi Depakine Et L Enquete Sur Les Rejets Toxiques A Mourenx

May 31, 2025

Sanofi Depakine Et L Enquete Sur Les Rejets Toxiques A Mourenx

May 31, 2025 -

Building The Good Life A Step By Step Approach To A Fulfilling Life

May 31, 2025

Building The Good Life A Step By Step Approach To A Fulfilling Life

May 31, 2025 -

Konfirmasi Resmi Selena Gomez Dan Miley Cyrus Akhiri Perseteruan Rencanakan Kencan Ganda

May 31, 2025

Konfirmasi Resmi Selena Gomez Dan Miley Cyrus Akhiri Perseteruan Rencanakan Kencan Ganda

May 31, 2025 -

Glastonbury And Sanremo 2025 Lineups Announced Complete Artist List

May 31, 2025

Glastonbury And Sanremo 2025 Lineups Announced Complete Artist List

May 31, 2025