The Role Of Algorithms In Radicalization: Are Tech Firms Liable For Mass Shootings?

Table of Contents

How Algorithms Contribute to Radicalization

Algorithms, designed to maximize user engagement, inadvertently contribute to the radicalization process through several key mechanisms.

Echo Chambers and Filter Bubbles

Algorithmic personalization creates echo chambers and filter bubbles, reinforcing extremist views and limiting exposure to counter-narratives. These systems prioritize content that confirms pre-existing biases, leading users down a rabbit hole of increasingly extreme viewpoints.

- Confirmation Bias Amplification: Algorithms show users more of what they already agree with, strengthening their convictions and making them less receptive to alternative perspectives.

- Limited Exposure to Diverse Opinions: The curated nature of personalized feeds restricts exposure to dissenting voices and balanced information, fostering a sense of isolation and validating extremist beliefs.

- Algorithmic Bias: Underlying biases in the algorithms themselves can further amplify the problem, disproportionately promoting certain types of content over others.

Keywords: Echo chambers, filter bubbles, algorithmic bias, online radicalization.

Recommendation Systems and Extremist Content

Recommendation systems, designed to suggest relevant content, often promote extremist content through personalized feeds and suggestions, frequently without user awareness. The prioritization of engagement metrics over safety leads to the amplification of harmful content.

- Engagement-Driven Design: Algorithms prioritize content that elicits strong emotional responses, even if that content is harmful or extremist. This incentivizes the creation and spread of inflammatory material.

- Lack of Transparency: The opaque nature of many algorithms makes it difficult for users to understand how recommendations are generated, hindering their ability to critically evaluate the information presented.

- Content Amplification: Once extremist content gains traction, algorithms further amplify its reach, exposing it to a wider audience and potentially radicalizing more individuals.

Keywords: Recommendation systems, content amplification, engagement metrics, harmful content.

The Spread of Misinformation and Disinformation

Algorithms facilitate the rapid dissemination of misinformation and disinformation, often fueling conspiracy theories and extremist ideologies. The speed and reach of online platforms make it challenging to detect and remove this content before it reaches a large audience.

- Viral Spread of Falsehoods: Algorithms can accelerate the spread of false narratives and conspiracy theories, creating a sense of legitimacy and reinforcing existing biases.

- Difficulty in Content Moderation: Identifying and removing harmful content at scale is a significant challenge for tech companies, often leading to a lag between the appearance of harmful content and its removal.

- Sophisticated Manipulation Tactics: Extremist groups use sophisticated techniques to manipulate algorithms and spread their message effectively.

Keywords: Misinformation, disinformation, fake news, conspiracy theories, content moderation challenges.

Legal and Ethical Implications for Tech Companies

The role of algorithms in radicalization raises complex legal and ethical questions for tech companies.

Section 230 and its Limitations

Section 230 of the Communications Decency Act (in the US) and similar legislation in other countries protect tech platforms from liability for user-generated content. However, the effectiveness of Section 230 is increasingly debated, particularly in the context of online extremism.

- Balancing Free Speech and Liability: The ongoing debate centers on balancing the need to protect free speech with the responsibility to prevent the spread of harmful content.

- Calls for Reform: Many argue that Section 230 needs reform to hold tech companies more accountable for the content hosted on their platforms.

- International Variations: Different countries have varying legal frameworks regarding platform liability, leading to a complex and fragmented regulatory landscape.

Keywords: Section 230, platform liability, content moderation laws, legal responsibility.

Ethical Responsibilities of Tech Companies

Beyond legal obligations, tech companies have ethical responsibilities to protect their users from harmful content. This involves navigating the tension between protecting free speech and preventing violence.

- Prioritizing User Safety: Companies have a moral obligation to design their platforms in a way that minimizes the risk of harm, even if it means limiting some forms of expression.

- Content Moderation Ethics: Developing effective and ethical content moderation policies is crucial, requiring careful consideration of human rights, freedom of expression, and the potential for algorithmic bias.

- Corporate Social Responsibility: Tech companies should actively engage in initiatives that counter online extremism and promote tolerance and understanding.

Keywords: Ethical responsibility, corporate social responsibility, content moderation ethics, free speech vs. safety.

The Role of Transparency and Accountability

Greater transparency in algorithmic processes and increased accountability from tech companies are crucial for addressing the spread of extremist content.

- Algorithmic Audits: Independent audits of algorithms could help identify biases and vulnerabilities that contribute to radicalization.

- Enhanced Content Moderation Policies: More robust content moderation policies, informed by research and ethical considerations, are essential.

- User Empowerment: Providing users with greater control over their online experiences and enabling them to report harmful content effectively is crucial.

Keywords: Algorithmic transparency, accountability, content moderation policies, independent audits.

Case Studies and Examples

Several real-world examples illustrate how algorithms have facilitated radicalization and subsequent acts of violence. While specific details often remain sensitive due to ongoing investigations, analyzing such instances is crucial for understanding the problem's scope and developing solutions. (Note: Including specific case studies requires careful selection and citation of reliable sources to avoid unintentional harm or misrepresentation.)

Keywords: Case studies, real-world examples, specific incidents, algorithmic influence on violence.

Conclusion: Addressing the Role of Algorithms in Radicalization

Algorithms play a significant role in facilitating radicalization, and tech companies face both legal and ethical challenges in addressing this issue. The question of whether tech firms are liable for mass shootings facilitated by their algorithms remains complex and requires further legal and ethical debate. However, the evidence strongly suggests a need for increased accountability and proactive measures.

We must demand greater transparency and responsibility from tech companies regarding algorithms in radicalization. This requires advocating for stronger regulations of social media algorithms, supporting research into algorithmic bias and its impact on online extremism, and promoting media literacy to help users critically evaluate online information. Engage in further research on this critical issue and advocate for change by contacting relevant organizations working to combat online extremism and promote digital safety. Let's work together to mitigate the harmful impact of algorithms in radicalization and prevent future tragedies.

Featured Posts

-

Justice Pour Les Etoiles De Mer Une Nouvelle Ere De Droits Pour Le Vivant

May 31, 2025

Justice Pour Les Etoiles De Mer Une Nouvelle Ere De Droits Pour Le Vivant

May 31, 2025 -

Exploring The Boundaries Of Ai Learning A Path To Responsible Ai

May 31, 2025

Exploring The Boundaries Of Ai Learning A Path To Responsible Ai

May 31, 2025 -

Adverse Vada Result Casts Shadow On Jaime Munguias Career

May 31, 2025

Adverse Vada Result Casts Shadow On Jaime Munguias Career

May 31, 2025 -

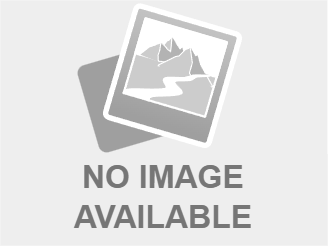

Nyt Mini Crossword Puzzle Solutions Tuesday April 8th

May 31, 2025

Nyt Mini Crossword Puzzle Solutions Tuesday April 8th

May 31, 2025 -

V Mware Costs To Soar 1 050 At And T Details Broadcoms Extreme Price Hike

May 31, 2025

V Mware Costs To Soar 1 050 At And T Details Broadcoms Extreme Price Hike

May 31, 2025