The Role Of Algorithms In Radicalization: Investigating Mass Shooter Cases

Table of Contents

Algorithmic Amplification of Extremist Content

Algorithms, the invisible engines driving our online experiences, play a significant role in shaping what we see and how we interact with information online. This section examines two key aspects of this influence:

Echo Chambers and Filter Bubbles

Algorithms create echo chambers and filter bubbles by prioritizing content similar to what a user has previously engaged with. This leads to reinforcement of existing beliefs and biases, potentially exposing individuals to increasingly extreme viewpoints.

- Examples: YouTube's recommendation system, often criticized for leading users down rabbit holes of extremist content; Facebook's News Feed, which prioritizes content from friends and pages aligned with a user's existing interests.

- Psychological Mechanisms: Confirmation bias, where individuals seek out information that confirms their pre-existing beliefs, is amplified by algorithmic filtering. Cognitive dissonance, the discomfort of holding conflicting beliefs, is reduced by exposure to homogenous information.

- Research: Studies have shown a correlation between increased exposure to extremist content through algorithmic recommendations and the likelihood of radicalization. (Cite specific studies here with links).

Personalized Recommendations and Targeted Advertising

Algorithms personalize content recommendations and targeted advertising, potentially steering individuals towards extremist ideologies. This personalized approach can create a "rabbit hole" effect, gradually exposing users to increasingly extreme content without their full awareness.

- Examples: Targeted advertising campaigns by extremist groups on social media platforms using sophisticated user data analysis.

- Ethical Considerations: The ethical implications of personalized recommendations are significant. Should platforms be responsible for preventing the spread of extremist content through their algorithms?

- Data Collection: The vast amount of data collected by online platforms provides fuel for these algorithms, allowing for highly targeted and potentially manipulative content delivery.

Online Communities and the Spread of Conspiracy Theories

Algorithms also play a crucial role in facilitating the formation and growth of online communities that spread conspiracy theories and extremist ideologies.

The Role of Online Forums and Social Media Groups

Online forums and social media groups provide spaces for individuals with similar extremist viewpoints to connect, share ideas, and reinforce each other's beliefs. Algorithms often prioritize engagement within these groups, making them even more insular and echo-chamber-like.

- Examples: Certain online forums and groups have been linked to the online activities of individuals who later committed mass shootings (mention general patterns without naming specific groups or individuals).

- Anonymity and Lack of Accountability: The anonymity offered by some online platforms reduces accountability and allows for the spread of hateful and violent content without fear of repercussions.

- Online Group Dynamics: Group polarization, where the opinions of individuals within a group become more extreme over time, is amplified within online communities facilitated by algorithms.

The Spread of Misinformation and Disinformation

Algorithms contribute to the rapid spread of misinformation and disinformation online, creating fertile ground for the cultivation of radical beliefs. False narratives and conspiracy theories are easily disseminated and amplified through algorithmic prioritization.

- Examples: Misinformation campaigns surrounding mass shootings, often designed to promote specific agendas or incite further violence.

- Combating Misinformation: The challenge of combating misinformation is immense, requiring a multi-faceted approach involving fact-checking initiatives, media literacy education, and algorithmic adjustments.

- Bots and Automated Accounts: Bots and automated accounts are frequently used to spread misinformation and propaganda at scale, further amplifying the reach of extremist content.

Case Studies: Analyzing the Online Activity of Mass Shooters

While respecting ethical considerations and avoiding identification of specific individuals, research analyzing the online activities of mass shooters reveals concerning patterns.

Identifying Patterns in Online Behavior

Studies examining the online behavior of individuals involved in mass shootings frequently uncover a pattern of engagement with extremist content, participation in online communities espousing hateful ideologies, and exposure to conspiracy theories.

- Online Behaviors: Engagement with extremist content, participation in online communities promoting violence, and consumption of misinformation are common factors observed across numerous studies.

- Methodological Challenges: Studying online radicalization presents several challenges, including data access, privacy concerns, and the need for rigorous methodological approaches to avoid bias.

- Ethical Research Practices: Maintaining data privacy and ensuring ethical research practices are paramount when investigating sensitive topics like online radicalization.

Limitations and Further Research

Current research offers valuable insights, but limitations remain. Further investigation is crucial to fully understand the complex interplay between algorithms, online radicalization, and mass violence.

- Gaps in Understanding: The long-term effects of algorithmic exposure to extremist content, the influence of specific algorithmic designs, and the effectiveness of various intervention strategies require further research.

- Further Research Avenues: Longitudinal studies, comparative analyses across different platforms, and investigations into the effectiveness of algorithmic interventions are critical next steps.

Conclusion

This article has explored the role of algorithms in radicalization, highlighting the complex interplay between algorithmic processes, online extremism, and mass shooter cases. Algorithms, through their amplification of extremist content, facilitation of online communities, and contribution to the spread of misinformation, create an environment conducive to radicalization. Understanding this connection is crucial for developing effective strategies to mitigate the risk. We must advocate for responsible algorithm design and platform accountability to combat the spread of extremism online. Only through a concerted effort toward responsible algorithm design and a critical approach to online information can we hope to mitigate the risk of algorithmic radicalization and prevent future tragedies.

Featured Posts

-

Lw Ansf Alqwmu Nhw Astqlal Wtny Mstdam

May 30, 2025

Lw Ansf Alqwmu Nhw Astqlal Wtny Mstdam

May 30, 2025 -

The Road Not Taken Jacob Alons Departure From Dentistry

May 30, 2025

The Road Not Taken Jacob Alons Departure From Dentistry

May 30, 2025 -

Rozmowa Telefoniczna Trump Zelenski Transkrypcja I Interpretacja

May 30, 2025

Rozmowa Telefoniczna Trump Zelenski Transkrypcja I Interpretacja

May 30, 2025 -

Advancing Gene Therapy The Power Of Crispr For Whole Gene Delivery

May 30, 2025

Advancing Gene Therapy The Power Of Crispr For Whole Gene Delivery

May 30, 2025 -

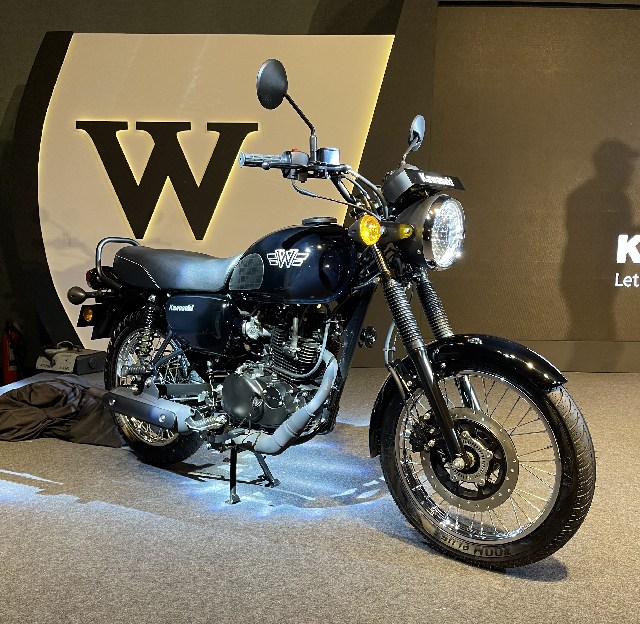

Kawasaki W175 Cafe Perpaduan Retro Klasik Dan Modern

May 30, 2025

Kawasaki W175 Cafe Perpaduan Retro Klasik Dan Modern

May 30, 2025

Latest Posts

-

The Tour Of The Alps A Look At Team Victoriouss Chances

May 31, 2025

The Tour Of The Alps A Look At Team Victoriouss Chances

May 31, 2025 -

Canadian Red Cross Response To Manitoba Wildfires Donate And Volunteer

May 31, 2025

Canadian Red Cross Response To Manitoba Wildfires Donate And Volunteer

May 31, 2025 -

Analysis Team Victorious At The Tour Of The Alps

May 31, 2025

Analysis Team Victorious At The Tour Of The Alps

May 31, 2025 -

Giro D Italia Live Info Orari E Risultati

May 31, 2025

Giro D Italia Live Info Orari E Risultati

May 31, 2025 -

Supporting Manitoba Wildfire Victims A Guide To Red Cross Aid

May 31, 2025

Supporting Manitoba Wildfire Victims A Guide To Red Cross Aid

May 31, 2025