Why AI Doesn't Truly Learn And How To Use It Responsibly

Table of Contents

The Illusion of AI Learning

While AI systems can achieve remarkable results, it's crucial to understand that their "learning" differs fundamentally from human learning. The term "AI learning," often used interchangeably with "machine learning," can be misleading.

- Machine learning relies on pattern recognition and statistical analysis, not genuine understanding. AI algorithms identify correlations in vast datasets to make predictions or classifications, but they lack the contextual awareness and reasoning abilities of humans.

- Humans learn through context, reasoning, and emotional intelligence – aspects currently missing in AI. We learn from experiences, adapt to new situations, and make nuanced judgments based on a complex interplay of factors. AI, in its current form, lacks this holistic understanding.

- AI excels at specific tasks but lacks generalizability and adaptability. An AI trained to identify cats in images may struggle to recognize a cat in a different setting or pose. This lack of adaptability is a significant limitation compared to human intelligence.

Current AI algorithms also face several limitations:

- Data bias leads to skewed outcomes and unfair predictions. If the data used to train an AI system reflects existing societal biases (e.g., gender, race), the AI will likely perpetuate and amplify these biases in its predictions.

- AI systems are vulnerable to adversarial attacks and manipulation. Small, carefully crafted changes to input data can fool an AI system, leading to incorrect or malicious outcomes. This vulnerability is a serious concern in safety-critical applications.

- Lack of explainability makes it difficult to understand AI decision-making processes. This "black box" nature of many AI algorithms makes it challenging to identify and correct errors or biases, hindering trust and accountability.

How AI "Learns": A Deep Dive into the Mechanics

AI "learns" through various methods, each with its strengths and limitations.

- Supervised learning: This approach involves training an AI on a labeled dataset, where each data point is paired with the correct answer. For example, training an image recognition system by showing it thousands of images labeled "cat" or "dog."

- Unsupervised learning: Here, the AI explores unlabeled data to discover patterns and structures. This is useful for tasks like clustering similar data points or dimensionality reduction.

- Reinforcement learning: This method involves training an AI agent through trial and error. The agent receives rewards for desirable actions and penalties for undesirable ones, learning to maximize its cumulative reward. Games like Go and chess have seen significant advancements using this technique.

Let's illustrate these methods with examples:

- Image recognition (supervised learning): Large datasets of labeled images are used to train convolutional neural networks, allowing AI to identify objects, faces, and scenes with remarkable accuracy.

- Natural language processing (supervised and unsupervised learning): AI models are trained on massive text corpora to perform tasks like translation, sentiment analysis, and text summarization. Unsupervised learning techniques help uncover underlying relationships between words and phrases.

- Game playing (reinforcement learning): AI agents learn to play games like chess and Go by playing millions of games against themselves or other agents, optimizing their strategies based on rewards and penalties.

The Ethical Implications of AI's Limitations

The limitations of AI, particularly its susceptibility to bias and lack of transparency, have significant ethical implications across various applications.

- Algorithmic bias in hiring, loan applications, and criminal justice can perpetuate and amplify existing societal inequalities. AI systems trained on biased data can unfairly discriminate against certain groups, reinforcing existing prejudices.

- Lack of transparency can lead to unfair or discriminatory outcomes without a clear explanation of why. This lack of accountability makes it difficult to address injustices caused by AI systems.

The potential for misuse of AI technologies is also alarming:

- Deepfakes and misinformation campaigns: AI can be used to create realistic but fake videos and audio recordings, potentially damaging reputations or influencing elections.

- Autonomous weapons systems: The development of lethal autonomous weapons raises serious ethical concerns about accountability and the potential for unintended consequences.

Accountability and regulation are crucial to mitigate these risks. We need robust mechanisms to ensure that AI systems are developed and deployed responsibly.

Responsible AI Development and Deployment

Mitigating the risks associated with AI requires a multi-pronged approach:

- Strategies for mitigating bias in AI systems include using diverse datasets, algorithmic auditing, and data augmentation techniques to address imbalanced datasets. This involves carefully curating training data to represent diverse populations and regularly auditing AI systems for bias.

- Transparency and explainability are crucial for building trust and accountability. Developing interpretable AI models, where the decision-making process is transparent, is vital for identifying and correcting errors. Clear communication about AI's limitations to users is equally important.

- Human oversight and collaboration in AI development are essential to ensure ethical considerations are prioritized throughout the process. AI should be a tool to augment human capabilities, not replace human judgment entirely.

Conclusion: Responsible Use of AI and the Future of AI Learning

While AI is a powerful tool with immense potential, it's crucial to remember that it doesn't truly learn like humans. Its limitations – susceptibility to bias, lack of transparency, and vulnerability to manipulation – demand responsible development and deployment. Ethical considerations must be at the forefront of AI research and development. Ongoing research into more robust, transparent, and explainable AI systems is vital for ensuring that AI benefits all of humanity. By understanding the limitations of AI learning, we can actively contribute to a future where AI is used responsibly and benefits all of humanity. Let's continue the conversation on responsible AI. Learn more about responsible AI development at [link to relevant resource 1] and [link to relevant resource 2].

Featured Posts

-

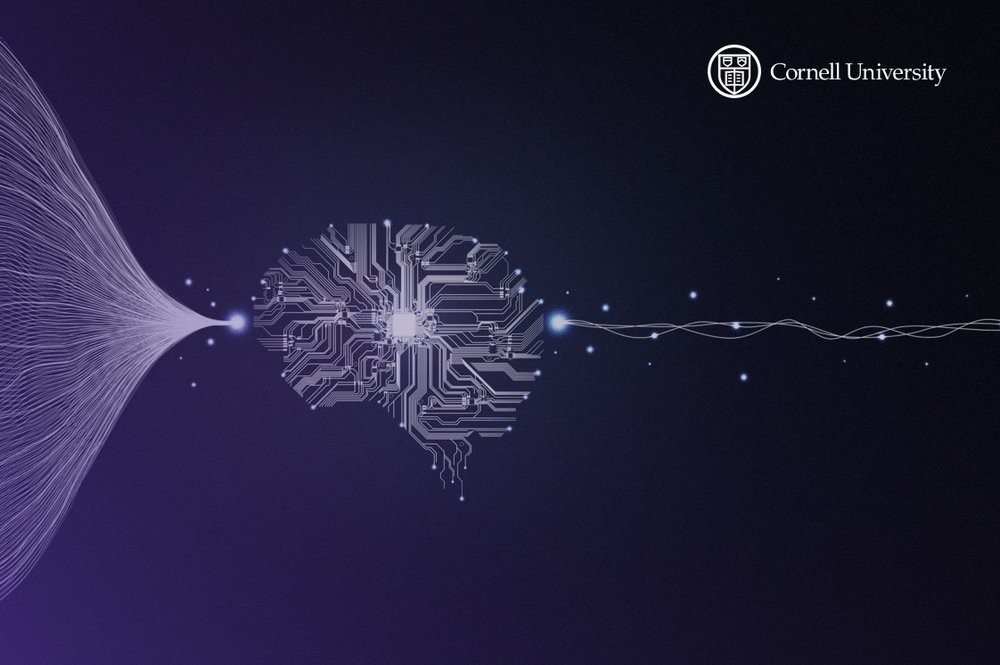

Tuesday March 18 Nyt Mini Crossword Solutions

May 31, 2025

Tuesday March 18 Nyt Mini Crossword Solutions

May 31, 2025 -

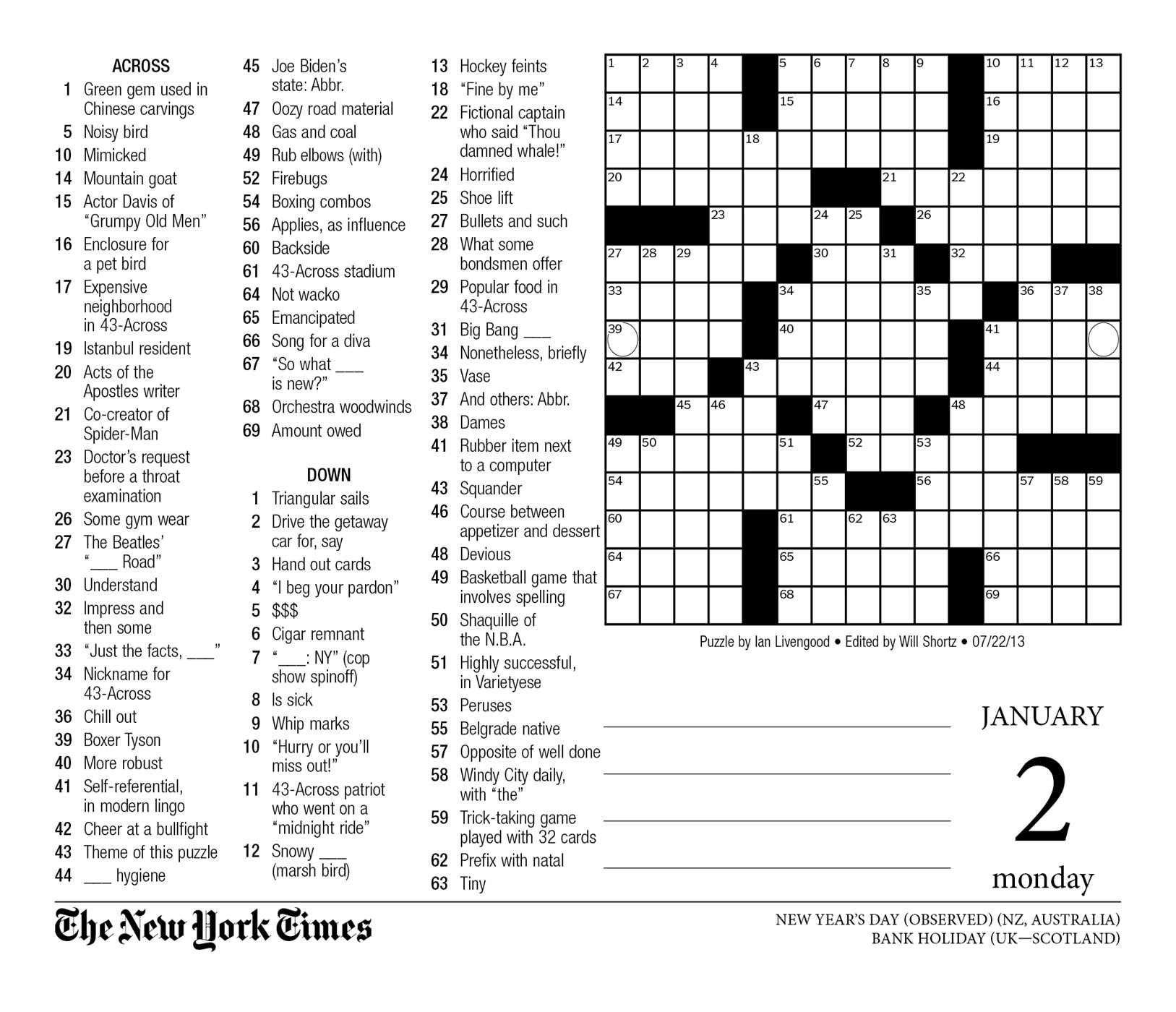

The Health Benefits Of Rosemary And Thyme

May 31, 2025

The Health Benefits Of Rosemary And Thyme

May 31, 2025 -

Premiera Singla Flowers Miley Cyrus Co Wiemy O Nowej Plycie

May 31, 2025

Premiera Singla Flowers Miley Cyrus Co Wiemy O Nowej Plycie

May 31, 2025 -

Banksys Broken Heart Mural Headed To Auction

May 31, 2025

Banksys Broken Heart Mural Headed To Auction

May 31, 2025 -

Gratis Wohnungen Diese Deutsche Stadt Sucht Neue Bewohner

May 31, 2025

Gratis Wohnungen Diese Deutsche Stadt Sucht Neue Bewohner

May 31, 2025