Character AI Chatbots And Free Speech: A Legal Gray Area

Table of Contents

H2: The First Amendment and AI-Generated Content

The very foundation of this discussion rests upon the First Amendment in the United States, guaranteeing freedom of speech. However, applying this principle to AI-generated content requires careful consideration.

H3: Defining "Speech" in the Context of AI

Defining "speech" in the digital age, particularly when generated by AI, is a challenge for the legal system. Does the output of a Character AI chatbot constitute protected speech? This is a question that courts are only beginning to grapple with.

- AI-generated art: Cases involving AI-generated images and artwork are raising questions about copyright and authorship. Who owns the copyright – the user, the developer, or the AI itself?

- AI-generated literature: The emergence of AI-authored books and poems challenges traditional notions of authorship and creative expression.

- AI-generated code: AI is increasingly used to create computer code. Legal questions arise about ownership and liability if that code is used for malicious purposes.

- The crucial element remains the degree of human involvement. Is the AI simply a tool, or does it possess a level of independent creative agency that warrants different legal treatment? This question of "human authorship" is central to the debate surrounding AI-generated speech.

H3: Limitations on Free Speech and Their Applicability to AI

Even within the protection of free speech, certain categories of expression are not protected. These limitations, such as incitement to violence, defamation, and obscenity, pose significant challenges in the context of AI-generated content.

- Incitement to violence: An AI chatbot could theoretically generate content encouraging violence or hatred, potentially leading to real-world harm. Determining liability in such cases is complex.

- Defamation: AI-generated statements could be defamatory, damaging an individual's reputation. Establishing the responsibility – user or developer – would be a key legal battleground.

- Obscenity: AI chatbots may generate sexually explicit or offensive content, raising concerns about legal compliance and the need for robust content moderation policies.

- The issue of "platform liability" is crucial here. Is Character AI responsible for the content generated by its users, even if it is not directly controlled by the platform? This question of platform responsibility remains a highly debated topic in online content moderation.

H2: Character AI's Specific Policies and Practices

Understanding Character AI's approach to content moderation is key to assessing its role in this complex legal landscape.

H3: Character AI's Terms of Service and Content Moderation

Character AI, like other AI platforms, has terms of service outlining acceptable use. How effectively are these enforced?

- Content moderation system effectiveness: Character AI's algorithms aim to detect and remove harmful content. The effectiveness of these systems is constantly challenged by evolving methods of circumventing restrictions.

- Handling controversial or offensive content: The platform faces the difficult task of balancing free speech with the need to protect users from harmful content. Consistency in applying its policies is crucial.

- Transparency: The lack of transparency around Character AI's content moderation processes raises concerns about fairness and accountability. Greater transparency would build user trust.

- The use of "AI safety" techniques, including bias detection and mitigation strategies, is essential to ensure responsible AI development and deployment.

H3: Liability and Responsibility for AI-Generated Harm

Determining liability for harm caused by AI-generated content is legally challenging.

- Legal precedents: Existing legal frameworks may not adequately address the unique challenges posed by AI. New legal precedents will likely emerge from future court cases.

- Challenges in assigning responsibility: Is the user who prompts the AI responsible? Or is the developer of the AI accountable for its output? Establishing clear lines of responsibility is crucial.

- Potential for future legal battles: The rapidly evolving nature of AI technology means that legal battles over liability are almost inevitable. The concept of "product liability" applied to AI is a complex and largely unexplored field.

H2: Global Legal Perspectives on AI and Free Speech

The legal landscape surrounding AI and free speech varies significantly across countries.

H3: International Differences in Free Speech Laws

Different jurisdictions have varying levels of tolerance for online content.

- Stricter regulations: Some countries have much stricter regulations on online content, including AI-generated content, than others.

- Comparative law: Comparing and contrasting approaches to AI regulation across various legal systems can help identify best practices and potential pitfalls.

- "Data privacy" laws also significantly impact the development and deployment of AI, influencing what data can be collected and how it can be used to train and operate these systems.

H3: The Future of AI Regulation and Free Speech

The future of AI regulation will significantly impact free speech online.

- Balancing free speech and harm mitigation: Finding a balance between protecting free speech and preventing harm caused by AI-generated content will be a critical challenge.

- Self-regulation vs. government oversight: The role of self-regulation by AI companies versus government intervention needs careful consideration. A collaborative approach may be most effective.

- The emerging field of "AI ethics" will play a significant role in shaping the future regulatory landscape, emphasizing responsible development and deployment of AI technologies.

3. Conclusion

The interplay of Character AI chatbots and free speech presents a multifaceted legal and ethical dilemma. Content moderation, platform responsibility, and the evolving definitions of speech in the digital age all contribute to the complexity of this issue. Different countries take vastly different approaches, highlighting the international dimension of this challenge. Moving forward, a nuanced and collaborative approach is essential, balancing the crucial protection of free speech with the equally crucial need to mitigate the potential for harm caused by AI-generated content. Learn more about the evolving legal landscape of Character AI chatbots and free speech and join the conversation about responsible AI development and free speech.

Featured Posts

-

Zimmermann Showcases Amira Al Zuhair At Paris Fashion Week

May 24, 2025

Zimmermann Showcases Amira Al Zuhair At Paris Fashion Week

May 24, 2025 -

Rybakina O Svoey Forme Poka Ne Na Pike

May 24, 2025

Rybakina O Svoey Forme Poka Ne Na Pike

May 24, 2025 -

Discover The Best New R And B Music Leon Thomas Flo And More

May 24, 2025

Discover The Best New R And B Music Leon Thomas Flo And More

May 24, 2025 -

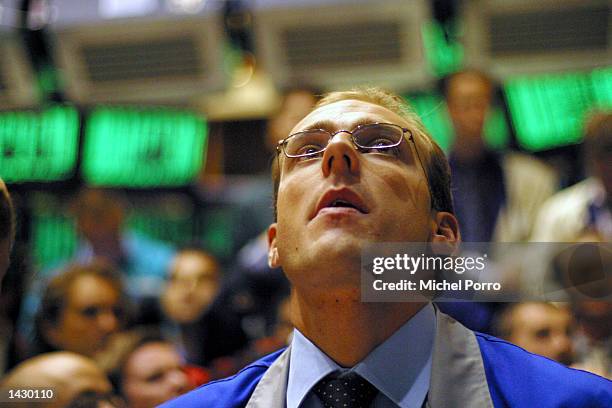

Amsterdam Aex Index Sharpest Decline In Over A Year

May 24, 2025

Amsterdam Aex Index Sharpest Decline In Over A Year

May 24, 2025 -

Southwest Airlines Carry On Restrictions New Rules For Portable Chargers

May 24, 2025

Southwest Airlines Carry On Restrictions New Rules For Portable Chargers

May 24, 2025

Latest Posts

-

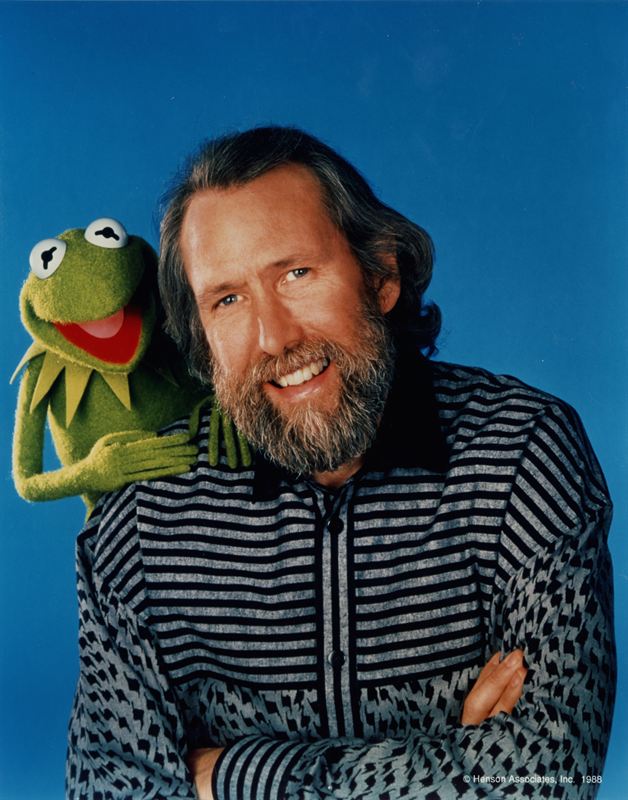

Hi Ho Kermit University Of Marylands 2025 Commencement Speaker

May 24, 2025

Hi Ho Kermit University Of Marylands 2025 Commencement Speaker

May 24, 2025 -

Kermit The Frog 2025 University Of Maryland Commencement Speaker

May 24, 2025

Kermit The Frog 2025 University Of Maryland Commencement Speaker

May 24, 2025 -

Kermit The Frog To Deliver 2025 Commencement Address At University Of Maryland

May 24, 2025

Kermit The Frog To Deliver 2025 Commencement Address At University Of Maryland

May 24, 2025 -

Kazakhstans Billie Jean King Cup Win A Detailed Match Report

May 24, 2025

Kazakhstans Billie Jean King Cup Win A Detailed Match Report

May 24, 2025 -

Billie Jean King Cup Qualifier Kazakhstan Beats Australia

May 24, 2025

Billie Jean King Cup Qualifier Kazakhstan Beats Australia

May 24, 2025