Decoding I/O And Io: A Comparative Analysis Of Google And OpenAI Approaches

Table of Contents

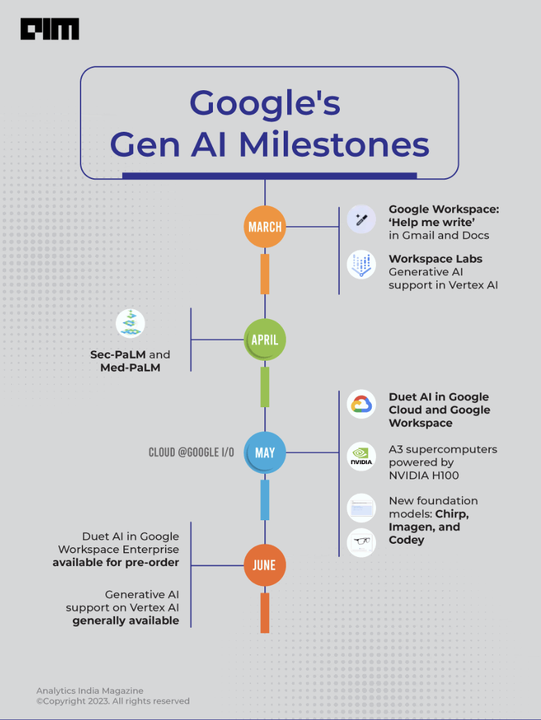

Google's Approach to I/O and io in AI

Google's approach to I/O is deeply intertwined with its massive infrastructure and commitment to scalability. Their solutions are built to handle enormous datasets and complex queries with remarkable efficiency.

Google's Infrastructure and Scalability

Google's infrastructure is a cornerstone of its I/O strategy. It leverages distributed systems, massive data centers, and cutting-edge hardware to manage the immense data flow inherent in its AI operations.

- Google Cloud Platform (GCP): GCP provides the underlying infrastructure for Google's AI services, offering scalable compute resources, storage, and networking capabilities crucial for efficient I/O.

- TensorFlow: This open-source machine learning framework is optimized for distributed computing, enabling efficient parallel processing and minimizing I/O bottlenecks during model training and inference.

- TPUs (Tensor Processing Units): Google's custom-designed hardware accelerators significantly improve the performance of machine learning workloads, accelerating computations and reducing I/O latency.

Google's infrastructure allows for efficient parallel processing of data, distributing the I/O load across multiple machines. This reduces bottlenecks and enables the handling of massive datasets that would overwhelm traditional systems. The seamless integration of these components optimizes the entire I/O pipeline.

Google's Focus on Data Efficiency and I/O Optimization

Google prioritizes minimizing I/O operations through various techniques, significantly impacting the overall efficiency of its AI systems.

- Data Compression: Employing advanced compression algorithms reduces the amount of data needing to be processed and transferred, minimizing I/O requirements.

- Caching: Strategic caching mechanisms store frequently accessed data in faster memory tiers, reducing the need to repeatedly fetch data from slower storage, thus improving response times.

- Optimized Database Design: Google utilizes highly optimized database systems designed for efficient data retrieval and management, reducing the load on I/O resources.

These techniques, combined with sophisticated query optimization strategies and efficient data structures, dramatically enhance the speed and efficiency of Google's AI systems, reducing the burden on I/O and improving overall performance. For instance, efficient indexing and data partitioning minimize the amount of data scanned during queries.

Google's I/O in the Context of Specific AI Applications

Google's I/O strategies are tailored to the specific needs of its diverse AI applications.

- Google Search: Handling billions of search queries daily demands extremely efficient I/O. Google's indexing and retrieval systems are highly optimized to deliver fast search results, minimizing latency and maximizing throughput.

- Google Assistant: Real-time responses necessitate low-latency I/O. Google employs advanced caching and optimization techniques to ensure swift and accurate responses to user requests.

- Other Google Services: From Google Maps to Google Translate, various services rely on highly efficient I/O to deliver seamless user experiences. Specific challenges and solutions differ across services, but the overarching principle is optimization for speed and reliability.

OpenAI's Approach to I/O and io in AI

OpenAI's strategy focuses heavily on building powerful large language models (LLMs), and I/O plays a crucial role in their training and deployment.

OpenAI's Model-Centric Approach and I/O

OpenAI's primary focus is on developing advanced LLMs like GPT-3, DALL-E, and others. Training these models requires processing and transferring enormous datasets, making I/O a significant factor.

- GPT-3, DALL-E, etc.: The sheer size of these models and the datasets used to train them presents significant I/O challenges. Efficient data loading, preprocessing, and model parameter storage are crucial for effective training.

- Computational Demands: Training these models necessitates significant computational resources and efficient data pipelines to manage the massive data flows involved in both training and inference.

OpenAI faces trade-offs between model size, computational resources, and I/O performance. Larger models often require more data and increased computational power, impacting the overall I/O requirements.

OpenAI's API and I/O Interaction

OpenAI provides an API that allows developers to interact with its models. The efficiency of this API significantly impacts the user experience.

- API Requests and Responses: Developers interact with OpenAI's models through API calls, requiring efficient encoding and decoding of data for requests and responses.

- I/O Considerations and Limitations: API limitations may include rate limits, data size restrictions, and response time constraints, all of which are I/O-related factors.

OpenAI focuses on optimizing the API to minimize latency and ensure smooth interaction for developers, balancing performance with the resources available. Efficient data serialization and deserialization are vital for minimizing overhead.

OpenAI's I/O and Research Focus

OpenAI actively researches ways to improve I/O efficiency in LLMs. This involves exploring new architectures, algorithms, and hardware solutions.

- Research Papers and Publications: OpenAI regularly publishes research papers exploring novel techniques for improving the efficiency of LLMs, including optimization strategies focused on I/O.

- Future Trends: Future advancements might include specialized hardware for LLMs, improved data compression techniques, and more efficient model architectures that reduce I/O demands.

A Direct Comparison of Google and OpenAI's I/O Strategies

Comparing Google and OpenAI reveals distinct approaches shaped by their respective priorities.

Scalability and Infrastructure

Google's massive infrastructure provides unparalleled scalability for handling enormous data volumes and complex I/O tasks. OpenAI's approach is more focused on optimizing model performance within a potentially more constrained infrastructure.

Data Efficiency and Optimization Techniques

Both companies employ advanced data efficiency and optimization techniques. However, Google's focus on its vast infrastructure allows for different scaling strategies compared to OpenAI, which centers on model optimization.

Focus and Application

Google's I/O priorities are shaped by the diverse range of its services, requiring extremely high scalability and reliability. OpenAI's focus on LLMs prioritizes model performance and efficient API interaction for developers.

Conclusion

This comparative analysis of Google and OpenAI's approaches to I/O and io highlights distinct strategies tailored to their goals and infrastructure. Google's emphasis on massive scalability and data efficiency is evident in its infrastructure and optimization techniques, while OpenAI prioritizes model performance and developer accessibility through its API. Understanding these differences is crucial for anyone involved in AI development and research. By delving deeper into the intricacies of I/O and io, you can gain valuable insights into the future of AI and build more efficient and effective AI systems. Continue exploring the nuances of I/O and io to stay at the forefront of this rapidly advancing field.

Featured Posts

-

Escape To The Country Choosing The Right Rural Property For You

May 25, 2025

Escape To The Country Choosing The Right Rural Property For You

May 25, 2025 -

The Nvidia Rtx 5060 Launch Lessons For Gamers And Reviewers

May 25, 2025

The Nvidia Rtx 5060 Launch Lessons For Gamers And Reviewers

May 25, 2025 -

Demnas Gucci Designs Kering Announces Sales Drop

May 25, 2025

Demnas Gucci Designs Kering Announces Sales Drop

May 25, 2025 -

Can Wall Streets Recovery Undermine The German Daxs Gains

May 25, 2025

Can Wall Streets Recovery Undermine The German Daxs Gains

May 25, 2025 -

Building Inspiration Lego Master Manny Garcia At Veterans Memorial Elementary

May 25, 2025

Building Inspiration Lego Master Manny Garcia At Veterans Memorial Elementary

May 25, 2025

Latest Posts

-

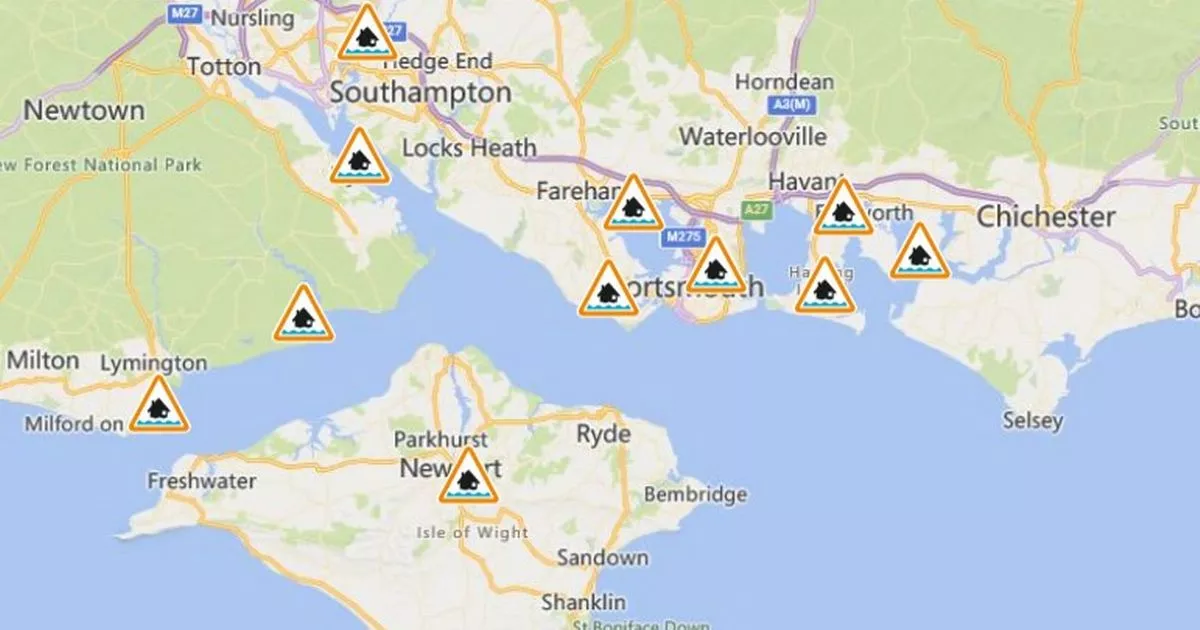

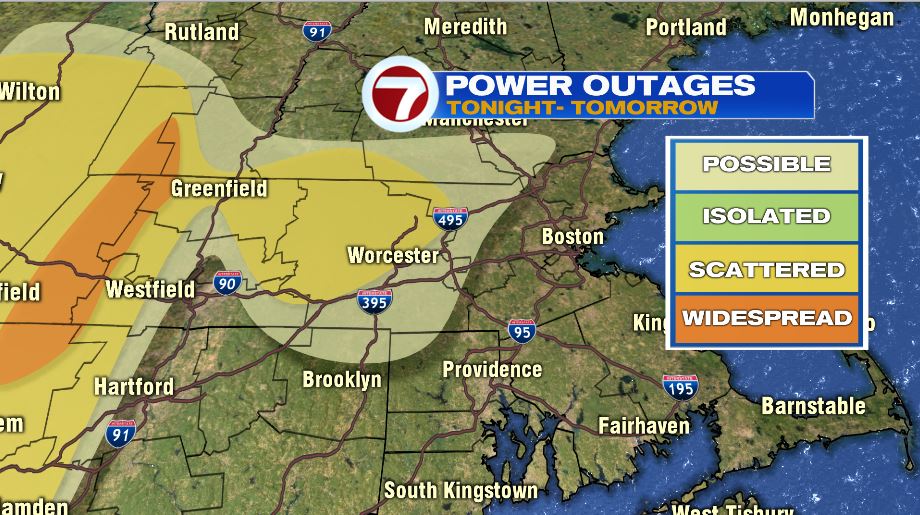

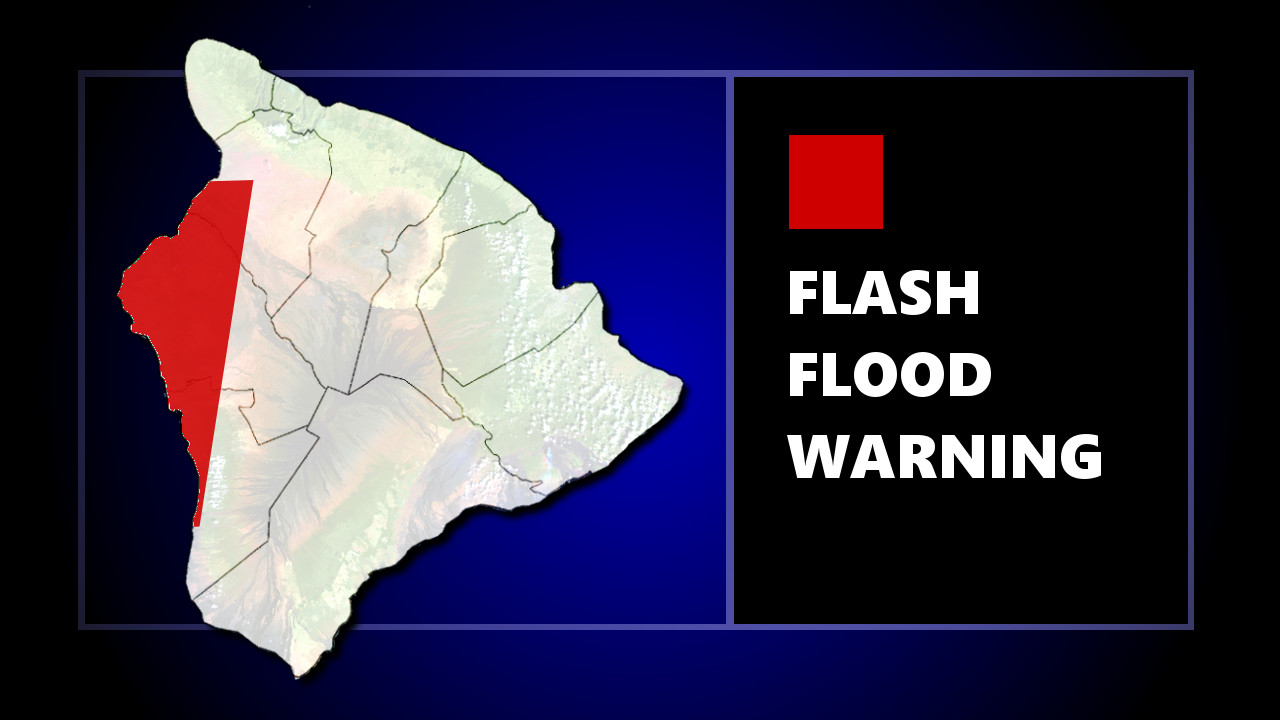

Thursday Night Flash Flood Warning Issued For Hampshire And Worcester

May 25, 2025

Thursday Night Flash Flood Warning Issued For Hampshire And Worcester

May 25, 2025 -

Flash Flood Threat Urgent Warning For Hampshire And Worcester Counties

May 25, 2025

Flash Flood Threat Urgent Warning For Hampshire And Worcester Counties

May 25, 2025 -

Severe Thunderstorms Bring Flash Flood Warning To Hampshire And Worcester

May 25, 2025

Severe Thunderstorms Bring Flash Flood Warning To Hampshire And Worcester

May 25, 2025 -

Hampshire And Worcester Counties Under Flash Flood Warning Thursday

May 25, 2025

Hampshire And Worcester Counties Under Flash Flood Warning Thursday

May 25, 2025 -

Urgent Flash Flood Warning Issued For Parts Of Pennsylvania

May 25, 2025

Urgent Flash Flood Warning Issued For Parts Of Pennsylvania

May 25, 2025