From Waste To Words: How AI Creates A Meaningful Podcast From Repetitive Scatological Documents

Table of Contents

The Challenge of Processing Scatological Data

Working with scatological data presents unique and significant hurdles. The inherent nature of this type of data introduces complexities not found in more conventional datasets. The unstructured format, often riddled with inconsistencies and informal language, makes traditional analysis methods ineffective. Furthermore, the potential for offensive language requires careful handling, necessitating robust filtering and ethical considerations.

- Data cleaning and pre-processing challenges: Removing irrelevant information, handling inconsistencies in spelling and grammar, and dealing with the sheer volume of data are all significant initial steps.

- Identifying patterns and meaningful information within the noise: Sifting through vast quantities of potentially repetitive and irrelevant text to extract meaningful insights requires sophisticated algorithms.

- Ethical considerations regarding the use and representation of such data: Privacy concerns, the potential for misinterpretation, and the risk of perpetuating harmful stereotypes necessitate careful consideration and responsible data handling.

AI's Role in Data Analysis and Synthesis

Fortunately, Artificial Intelligence, specifically Natural Language Processing (NLP), offers powerful tools to overcome these challenges. AI algorithms can effectively analyze, synthesize, and transform this seemingly unusable data into structured information suitable for podcast creation.

- Sentiment analysis: NLP techniques can gauge the emotional tone within the documents, identifying prevalent sentiments like frustration, humor, or anger, enriching the podcast's narrative depth.

- Topic modeling: Algorithms like Latent Dirichlet Allocation (LDA) can identify recurring themes and narratives, providing the structure for a coherent podcast storyline.

- Text summarization: AI can condense large amounts of data into concise and manageable summaries, providing key talking points for the podcast script.

- AI-driven script generation: Advanced models can even generate initial scripts based on the extracted information, significantly accelerating the podcast production process.

Specific AI Techniques and Tools

Several specific AI tools and techniques are crucial for this process. NLP libraries like spaCy and NLTK provide fundamental functionalities for text preprocessing, tokenization, and part-of-speech tagging. More advanced techniques, such as transformer-based models (like those from Hugging Face), excel at tasks like sentiment analysis, topic modeling, and text summarization. These models leverage deep learning to understand context and nuance within the text, leading to more accurate and insightful analyses.

Transforming Data into a Meaningful Podcast Narrative

Once the data has been analyzed and synthesized, the next step is to transform it into a compelling and engaging podcast narrative. This involves careful consideration of story structure, scriptwriting, and audio production techniques.

- Structuring the narrative arc: Creating a clear beginning, middle, and end, building suspense, and ensuring a satisfying conclusion are vital for a captivating listener experience.

- Incorporating relevant sound effects and music: Audio elements can enhance the emotional impact and thematic coherence of the podcast.

- Choosing a suitable voice-over artist: The voice needs to complement the tone and content of the podcast, effectively conveying the extracted narratives.

- Podcast editing and mastering techniques: Professional editing and mastering are crucial to ensure high-quality audio production.

Ethical Considerations and Responsible AI

The ethical implications of using scatological data cannot be overlooked. Responsible AI development is paramount to avoid any potential harm or misrepresentation.

- Anonymization and data privacy: Protecting the identities of individuals mentioned in the documents is crucial. Techniques like data anonymization are vital.

- Avoiding the perpetuation of harmful stereotypes or biases: AI models must be carefully trained and monitored to prevent the amplification of existing societal biases.

- Transparency and accountability in the AI process: The methods used and the decisions made should be transparent and auditable.

Conclusion

Creating a meaningful podcast from scatological documents using AI involves navigating the challenges of data processing, leveraging the power of NLP techniques, and carefully considering the ethical implications. By employing AI-powered tools for data analysis, synthesis, and script generation, it's possible to transform seemingly useless data into a compelling and insightful audio narrative. This demonstrates the versatility and transformative potential of AI in podcast creation.

Ready to transform your own 'waste' into 'words'? Explore the potential of AI-powered podcast creation from unexpected sources. Let AI unlock the stories hidden within your data, even the most unconventional ones. Explore the possibilities of AI podcast generation and data-driven podcasting to create truly unique and engaging unconventional podcast content.

Featured Posts

-

The Next Pope How Franciss Papacy Will Shape The Conclave

Apr 22, 2025

The Next Pope How Franciss Papacy Will Shape The Conclave

Apr 22, 2025 -

500 Million Bread Price Fixing Settlement Key Hearing Scheduled For May

Apr 22, 2025

500 Million Bread Price Fixing Settlement Key Hearing Scheduled For May

Apr 22, 2025 -

Swedens Tanks Finlands Troops A Pan Nordic Defense Force

Apr 22, 2025

Swedens Tanks Finlands Troops A Pan Nordic Defense Force

Apr 22, 2025 -

Trump Supporter Ray Epps Defamation Lawsuit Against Fox News Details Of The Jan 6th Case

Apr 22, 2025

Trump Supporter Ray Epps Defamation Lawsuit Against Fox News Details Of The Jan 6th Case

Apr 22, 2025 -

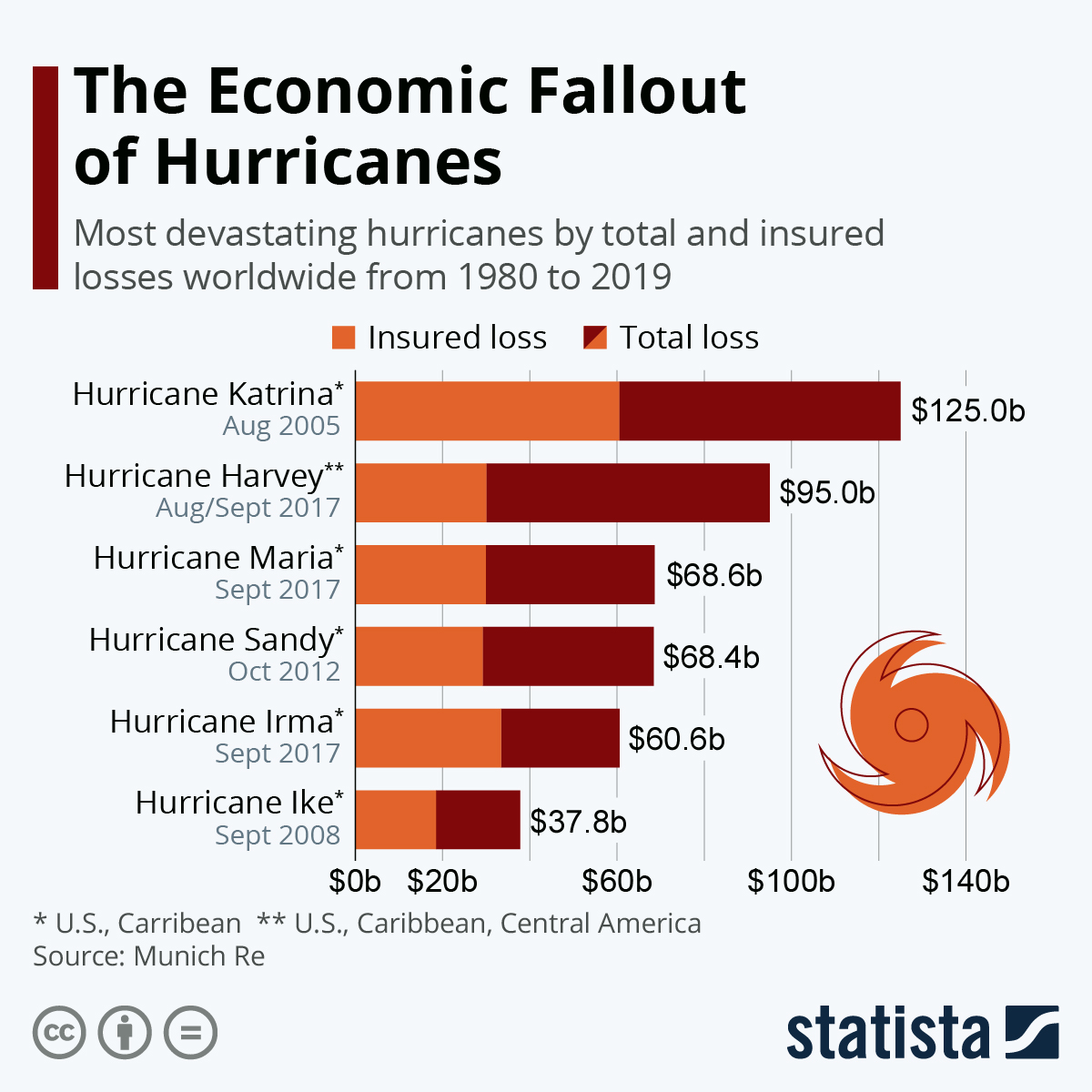

The Economic Fallout How Trumps Trade Actions Affected Us Financial Power

Apr 22, 2025

The Economic Fallout How Trumps Trade Actions Affected Us Financial Power

Apr 22, 2025

Latest Posts

-

Indian Stock Market Rally Sensex Nifty Hit New Highs Key Gainers And Losers

May 10, 2025

Indian Stock Market Rally Sensex Nifty Hit New Highs Key Gainers And Losers

May 10, 2025 -

Sensex 600 Nifty

May 10, 2025

Sensex 600 Nifty

May 10, 2025 -

Market Rally Sensex And Nifty Climb Ultra Tech Experiences A Decline

May 10, 2025

Market Rally Sensex And Nifty Climb Ultra Tech Experiences A Decline

May 10, 2025 -

Indian Stock Market Update Sensex Nifty Rise Ultra Tech Cement Falls

May 10, 2025

Indian Stock Market Update Sensex Nifty Rise Ultra Tech Cement Falls

May 10, 2025 -

Stock Market Today Sensex Up 200 Nifty Above 18 600 Ultra Tech Dips

May 10, 2025

Stock Market Today Sensex Up 200 Nifty Above 18 600 Ultra Tech Dips

May 10, 2025