Google Vs. OpenAI: A Deep Dive Into I/O And Io Differences

Table of Contents

Google's Approach to I/O and its Implications

Google's Infrastructure and Scalability

Google's approach to I/O is deeply intertwined with its massive infrastructure. Its reliance on cloud computing, specifically the Google Cloud Platform (GCP), provides unparalleled scalability and efficiency in handling I/O operations. This robust infrastructure underpins Google's ability to manage and process the immense datasets required for training and deploying its sophisticated AI models.

- Distributed Systems: Google leverages distributed systems to handle massive I/O workloads, distributing the burden across numerous machines for optimal performance.

- TensorFlow's Role: TensorFlow, Google's open-source machine learning framework, plays a vital role in managing I/O efficiently. It provides tools and optimizations for handling large datasets and complex data pipelines.

- Speed and Scalability: Google's infrastructure ensures lightning-fast I/O, allowing for seamless processing of data streams and rapid model training. This high scalability allows Google to handle massive requests for services like Google Search and Google Assistant without performance degradation.

Example: Google Search's ability to return relevant results within milliseconds relies heavily on its efficient I/O infrastructure. The system must process billions of queries daily, requiring instantaneous access to and processing of vast amounts of indexed data.

I/O in Google's AI Models

The I/O operations are deeply integrated into the design and functionality of Google's AI models, such as BERT (Bidirectional Encoder Representations from Transformers) and LaMDA (Language Model for Dialogue Applications). Efficient I/O is crucial for both training and inference phases.

- Data Preprocessing: Google employs advanced techniques for preprocessing vast datasets to optimize I/O during model training. This includes data cleaning, transformation, and feature engineering.

- Model Deployment: For efficient inference, Google optimizes model deployment to minimize latency and maximize throughput, ensuring quick response times for applications using its AI models.

- Streaming Data Handling: Google's infrastructure supports the efficient handling of streaming data, enabling real-time processing and analysis in applications requiring immediate feedback.

Example: In LaMDA, efficient I/O is crucial for handling the continuous flow of user input and generating coherent and contextually relevant responses. Optimizations are in place to minimize the latency between user input and model output.

OpenAI's Approach to I/O and its Implications

OpenAI's Model-Centric Approach

OpenAI's strategy differs significantly from Google's. Its primary focus is on developing and deploying powerful language models, like GPT-3 and GPT-4. This model-centric approach shapes its I/O strategies, prioritizing ease of access through APIs rather than building expansive, self-contained infrastructure.

- API Reliance: OpenAI primarily provides access to its models through APIs, simplifying integration for developers but limiting direct control over I/O processes.

- Customization Limitations: While offering powerful models, OpenAI's API-driven approach offers less flexibility for customizing I/O pipelines compared to Google's infrastructure-centric approach.

- Infrastructure Abstraction: Developers interacting with OpenAI models don't directly manage the underlying infrastructure, relying instead on OpenAI's managed services.

Example: While Google offers more control over data pipelines within its infrastructure, OpenAI's ease of integration through APIs simplifies model deployment for developers who prioritize rapid prototyping and application development.

I/O Considerations in OpenAI's APIs

Using OpenAI APIs, like the GPT-3 API, introduces specific I/O considerations that developers must address for optimal performance.

- Prompt Engineering: The design and structure of the input prompt significantly impact the I/O efficiency and the quality of the model's response. Carefully crafted prompts can minimize the number of tokens required, reducing costs and improving response times.

- Token Limits: OpenAI's APIs impose token limits, affecting the length of input prompts and output responses. Developers need to manage these limits effectively to avoid truncation or exceeding API call limits.

- Efficient Data Formatting: The way data is formatted for API calls influences I/O efficiency. Optimizing data structures and minimizing unnecessary data transfer can improve performance.

Example: A poorly structured prompt might require more tokens, increasing the cost and processing time. Conversely, a concise and well-structured prompt can significantly improve the efficiency of the API interaction.

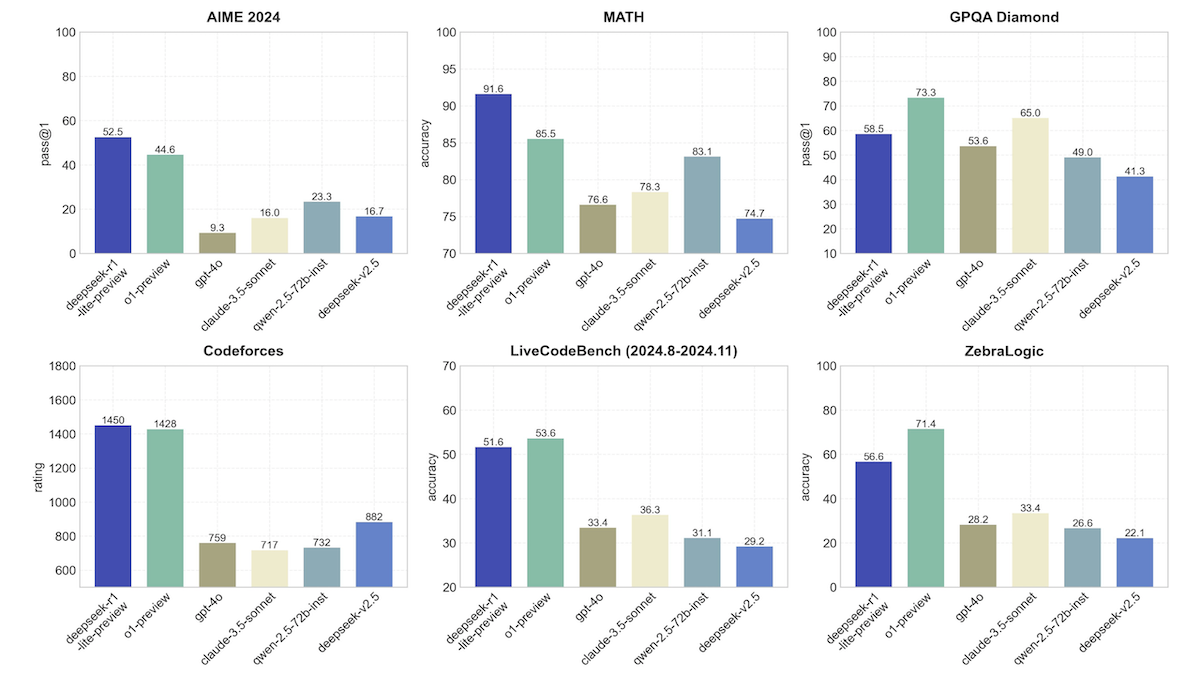

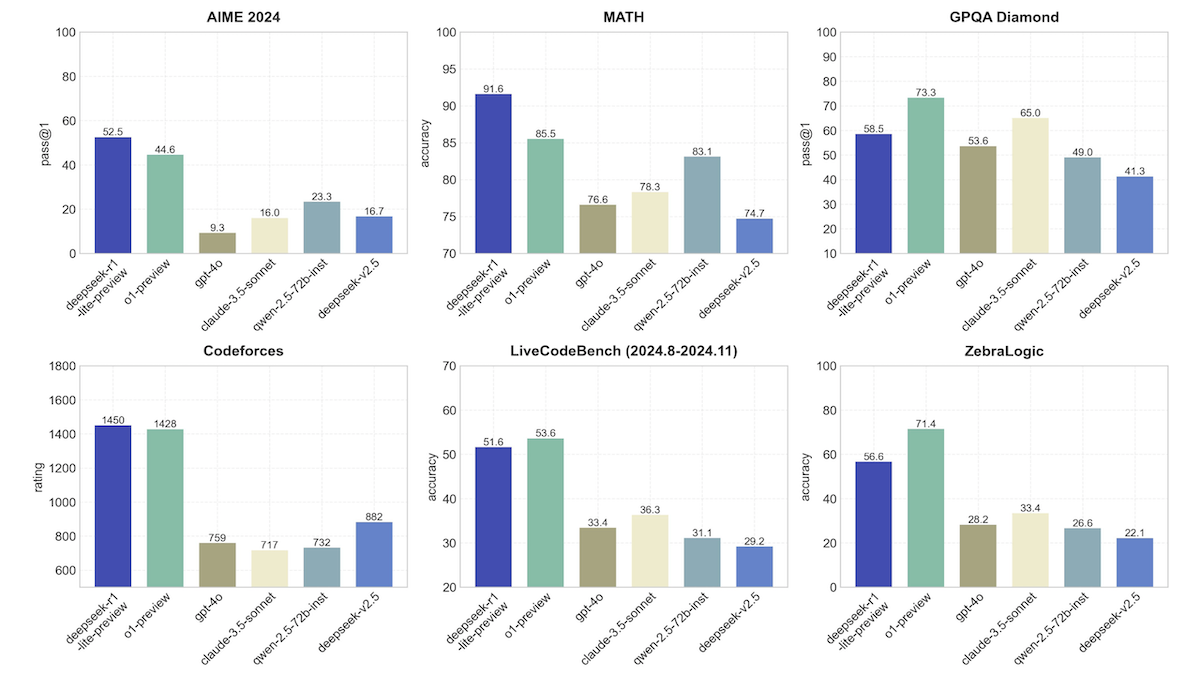

Key Differences between Google and OpenAI's I/O Handling

| Feature | OpenAI | |

|---|---|---|

| Scalability | Extremely high, massive infrastructure | High, but relies on managed services |

| I/O Control | High, direct control over data pipelines | Low, limited control through APIs |

| Data Handling | Highly efficient, optimized for large datasets | Efficient for API interactions, token limits apply |

| Ease of Integration | Requires more technical expertise | Easier integration through APIs |

These differences significantly impact developers. Google's approach offers greater control and scalability but requires more technical expertise. OpenAI's approach offers easier integration but sacrifices control and customization.

Conclusion

The core distinction between Google and OpenAI's handling of I/O lies in their differing priorities: Google prioritizes scalable infrastructure and fine-grained control, while OpenAI prioritizes ease of access and powerful pre-trained models through APIs. Understanding these differences is vital for developers selecting the best tools for their AI projects. The choice depends on the project's specific requirements regarding scalability, control over I/O processes, and the level of customization needed.

Call to Action: Deepen your understanding of the nuances of Google and OpenAI's I/O capabilities. Explore the resources available from both companies to further your knowledge on this crucial aspect of AI development and deployment. Further research into Google's and OpenAI's I/O methodologies will empower you to make informed decisions in your AI projects.

Featured Posts

-

Le Parcours Fascinant De Melanie Thierry

May 25, 2025

Le Parcours Fascinant De Melanie Thierry

May 25, 2025 -

The Kyle And Teddi Dog Walker Incident A Full Account

May 25, 2025

The Kyle And Teddi Dog Walker Incident A Full Account

May 25, 2025 -

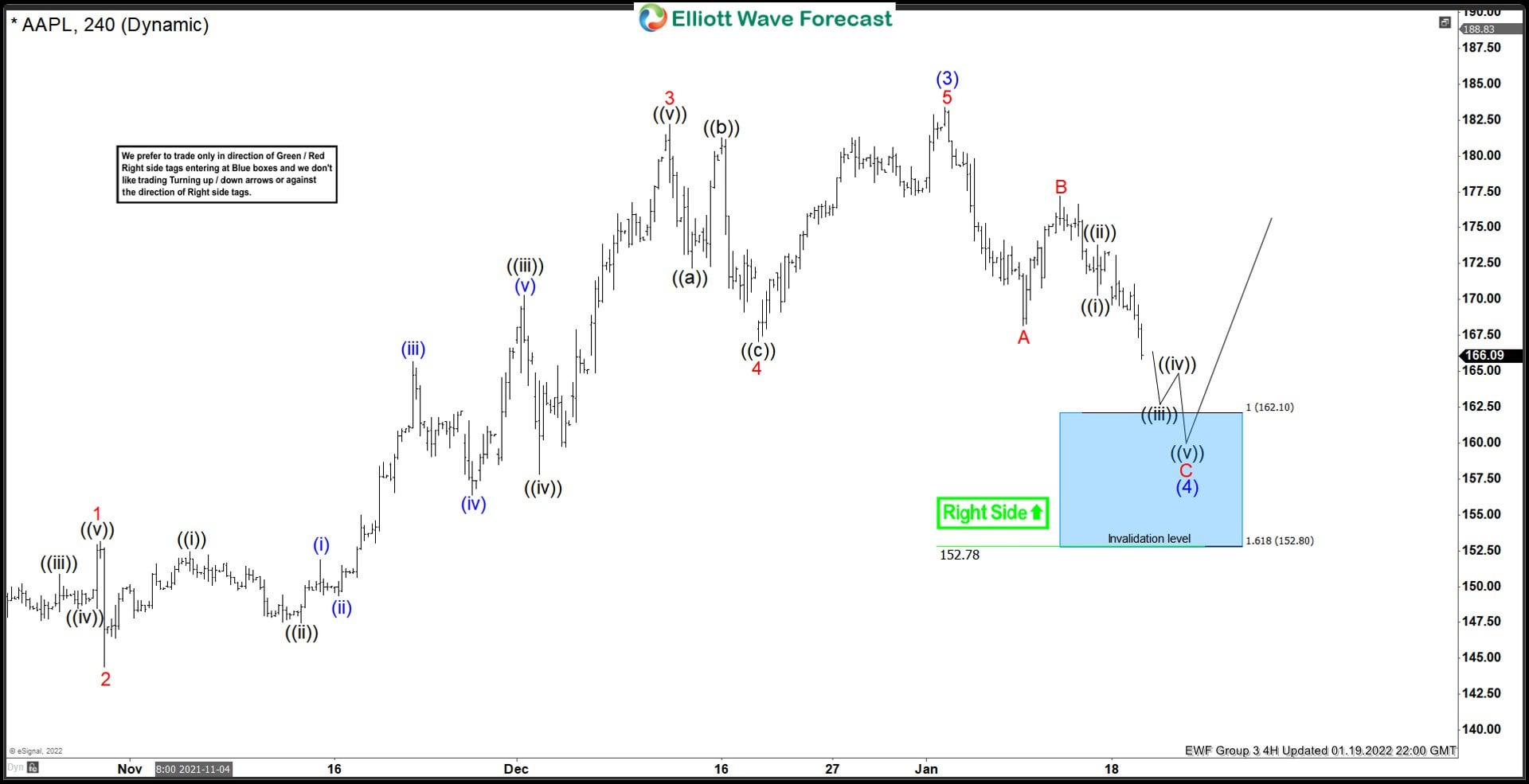

Apple Stock Dips Below Key Levels Before Q2 Earnings

May 25, 2025

Apple Stock Dips Below Key Levels Before Q2 Earnings

May 25, 2025 -

Armando Iannucci Has His Satirical Edge Dulled

May 25, 2025

Armando Iannucci Has His Satirical Edge Dulled

May 25, 2025 -

Yevrobachennya 2025 Chi Spravdyatsya Peredbachennya Konchiti Vurst

May 25, 2025

Yevrobachennya 2025 Chi Spravdyatsya Peredbachennya Konchiti Vurst

May 25, 2025