OpenAI's ChatGPT: FTC Probe Into Data Privacy And AI Practices

Table of Contents

FTC's Investigation into ChatGPT: Scope and Concerns

The Federal Trade Commission (FTC), responsible for protecting consumers and preventing anti-competitive business practices, launched its investigation into OpenAI's ChatGPT based on concerns surrounding its data handling practices and potential violations of consumer protection laws. The FTC's authority extends to regulating unfair or deceptive acts or practices in commerce, making data privacy a key area of its oversight. The investigation aims to determine whether OpenAI has engaged in unfair or deceptive practices related to the collection, use, and protection of user data.

Specific concerns raised by the FTC and other stakeholders include:

- Data collection practices and the potential for misuse of personal information: ChatGPT's training data includes vast amounts of text and code from the internet, raising concerns about the inclusion and potential misuse of personal information.

- Lack of transparency regarding data usage and algorithmic decision-making: The opacity surrounding how ChatGPT processes and uses user data raises concerns about accountability and the potential for biased or discriminatory outcomes. Users are often unaware of the extent to which their data is being collected and utilized.

- Potential for biased outputs and discriminatory outcomes: The AI model learns from its training data, which may contain biases reflecting societal prejudices. This can result in ChatGPT generating outputs that perpetuate or amplify these biases.

- Concerns about the security of user data and the risk of breaches: The sheer volume of data processed by ChatGPT makes it a potentially attractive target for cyberattacks. Robust security measures are crucial to prevent data breaches and protect user privacy.

- Violation of consumer protection laws related to data privacy: The investigation seeks to determine whether OpenAI's data practices violate existing consumer protection laws, such as the California Consumer Privacy Act (CCPA) or other state and federal regulations.

Data Privacy Issues Surrounding ChatGPT and Similar AI Models

ChatGPT and similar large language models (LLMs) process incredibly sensitive data, including personal conversations, sensitive health information, financial details, and other private communications shared by users. This sensitive data is used to train and improve the AI model, raising significant data privacy concerns.

The challenge lies in anonymizing and securing this data. While OpenAI may employ techniques to de-identify user data, the risk of re-identification remains, particularly given the sophistication of modern data analysis tools. Even seemingly anonymized data can be vulnerable to re-identification through various methods, potentially exposing users' private information.

Specific data privacy risks associated with ChatGPT include:

- Data breaches and unauthorized access to user information: A data breach could expose millions of users' private conversations and personal information.

- Use of user data for purposes beyond what was disclosed: Users may not be fully aware of how their data is used beyond improving the model's performance.

- Potential for profiling and discrimination based on user data: The data used to train ChatGPT could contain biases that lead to discriminatory outcomes, potentially profiling users based on their interactions.

Implications for AI Development and Ethical Considerations

The FTC's investigation into ChatGPT has significant implications for the broader AI landscape. It highlights the urgent need for responsible AI development, emphasizing the importance of prioritizing ethical considerations throughout the entire AI lifecycle – from design and development to deployment and maintenance.

This investigation signifies a potential shift towards increased regulatory scrutiny of AI companies and their data practices. It could pave the way for stricter data privacy regulations and standards specifically tailored to AI technologies. Furthermore, the probe underscores the importance of fostering transparency and accountability in AI development processes.

Key implications of the FTC's investigation include:

- Increased regulatory scrutiny of AI companies and their data practices: We can expect greater oversight and stricter enforcement of existing data privacy regulations.

- Development of stricter data privacy regulations and standards for AI: New legislation may be enacted to address the unique challenges posed by AI technologies.

- Shift towards more transparent and accountable AI development processes: Companies will be pressured to be more transparent about their data practices and algorithms.

The Future of ChatGPT and Responsible AI Innovation

The FTC's investigation is likely to influence future iterations of ChatGPT and similar AI models. OpenAI may need to implement significant changes in its data handling practices, prioritizing user privacy and data security. This might include improved data anonymization techniques, enhanced security measures, and greater transparency about data usage.

The future of responsible AI innovation hinges on a collaborative effort involving AI developers, policymakers, and the broader public. AI developers must prioritize user privacy and ethical considerations from the outset, designing AI systems with built-in privacy protections and mechanisms for accountability. Robust data governance frameworks are crucial, combined with the development and implementation of privacy-preserving AI techniques. Stronger regulatory frameworks, coupled with industry self-regulation and ethical guidelines, will play a crucial role in shaping a future where AI innovation is both impactful and responsible.

Conclusion

The FTC's investigation into OpenAI's ChatGPT underscores the crucial need for responsible AI development and rigorous data protection practices. The probe highlights the potential risks associated with large language models and the growing importance of addressing data privacy concerns in the era of rapidly advancing AI technology. The outcome of this investigation will significantly shape the future of AI regulation and development, influencing how companies handle user data and ensure ethical AI practices.

Call to Action: Stay informed about developments in the FTC's investigation of OpenAI's ChatGPT and the evolving landscape of AI data privacy. Understanding the complexities surrounding ChatGPT and similar AI models is crucial for protecting your own data and shaping the future of responsible AI innovation.

Featured Posts

-

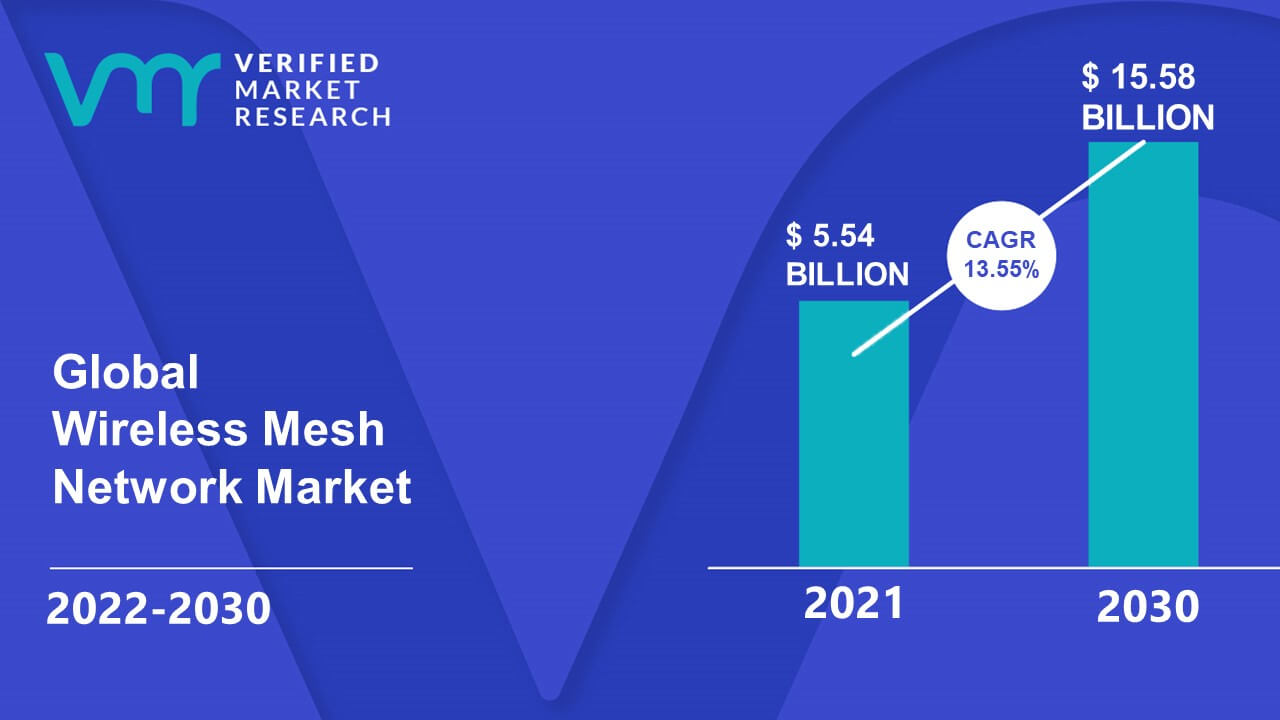

8 Cagr Projected For Wireless Mesh Networks Market Size

May 09, 2025

8 Cagr Projected For Wireless Mesh Networks Market Size

May 09, 2025 -

Dangotes Influence On Nnpc Petrol Prices A Thisdaylive Analysis

May 09, 2025

Dangotes Influence On Nnpc Petrol Prices A Thisdaylive Analysis

May 09, 2025 -

Find Live Music And Events In Lake Charles This Easter Weekend

May 09, 2025

Find Live Music And Events In Lake Charles This Easter Weekend

May 09, 2025 -

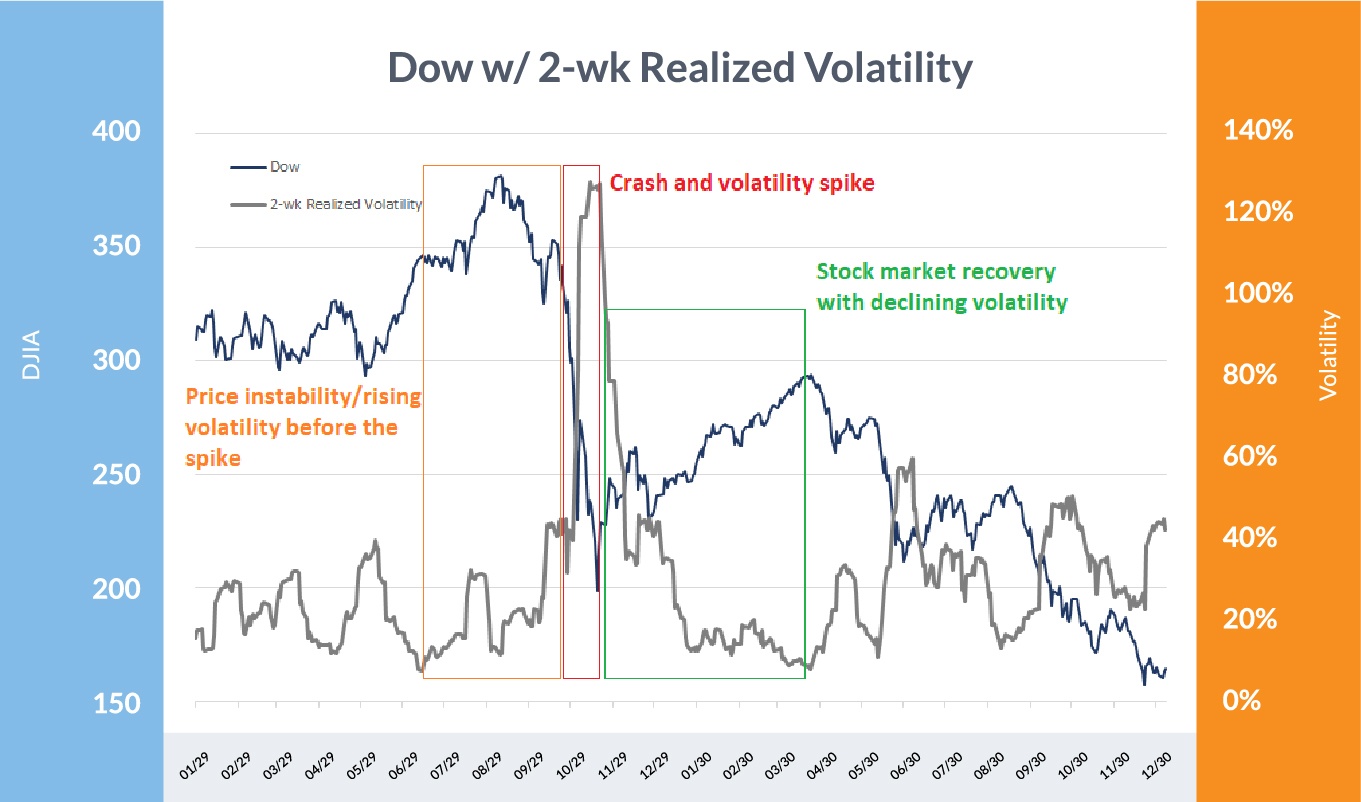

Elon Musks Billions In Decline Analysis Of Recent Market Volatility And Its Impact

May 09, 2025

Elon Musks Billions In Decline Analysis Of Recent Market Volatility And Its Impact

May 09, 2025 -

Daycares Impact On Children A Psychologists Viral Podcast And Expert Responses

May 09, 2025

Daycares Impact On Children A Psychologists Viral Podcast And Expert Responses

May 09, 2025

Latest Posts

-

Stock Market Valuation Concerns Bof A Offers Reassurance To Investors

May 10, 2025

Stock Market Valuation Concerns Bof A Offers Reassurance To Investors

May 10, 2025 -

Relaxed Regulations Urged Indian Insurers And Bond Forward Contracts

May 10, 2025

Relaxed Regulations Urged Indian Insurers And Bond Forward Contracts

May 10, 2025 -

Understanding High Stock Market Valuations Bof As Viewpoint

May 10, 2025

Understanding High Stock Market Valuations Bof As Viewpoint

May 10, 2025 -

Bond Forward Market Indian Insurers Advocate For Simplified Rules

May 10, 2025

Bond Forward Market Indian Insurers Advocate For Simplified Rules

May 10, 2025 -

Whats App Spyware Litigation Metas 168 Million Loss And The Path Forward

May 10, 2025

Whats App Spyware Litigation Metas 168 Million Loss And The Path Forward

May 10, 2025