OpenAI Facing FTC Investigation: Examining The Regulatory Landscape Of AI

Table of Contents

The FTC Investigation into OpenAI

The Federal Trade Commission (FTC) launched an investigation into OpenAI, focusing on potential violations of Section 5 of the FTC Act, which prohibits unfair or deceptive acts or practices. While the specifics of the investigation remain confidential, the FTC's inquiry likely centers around concerns about the potential harms caused by OpenAI's large language models, such as GPT-4.

- Potential Concerns:

- Unfair or deceptive trade practices related to data handling: Concerns exist regarding the collection, use, and protection of personal data used to train OpenAI's models. Questions around informed consent and data minimization are central to the investigation.

- Lack of transparency regarding AI model training data and algorithms: The "black box" nature of many AI systems makes it difficult to understand how they arrive at their outputs. This lack of transparency raises concerns about accountability and the potential for bias.

- Potential for biased outputs and discriminatory outcomes: AI models trained on biased data can perpetuate and amplify existing societal biases, leading to discriminatory outcomes in areas like loan applications, hiring processes, and criminal justice.

- Insufficient safeguards against misuse of the technology: The potential for malicious actors to utilize AI for harmful purposes, such as generating deepfakes or spreading misinformation, necessitates robust safeguards.

This investigation sets a crucial precedent. It signals that the FTC intends to actively scrutinize the ethical and societal implications of AI development and deployment, paving the way for stricter AI regulation in the future.

The Current State of AI Regulation Globally

The regulatory landscape for AI is still developing globally, with various approaches adopted across different jurisdictions.

US Regulatory Landscape

The US currently lacks comprehensive, specific legislation for AI. However, several existing laws indirectly address AI-related issues:

- Data privacy laws: The California Consumer Privacy Act (CCPA) and other state-level laws provide some protection for personal data, although their applicability to AI training data is still being debated.

- Consumer protection laws: The FTC Act can be used to address unfair or deceptive practices involving AI, as seen in the OpenAI investigation.

- Antitrust laws: Concerns exist about the potential for AI companies to monopolize markets, prompting antitrust scrutiny.

Several bills aiming at specific AI regulation are currently under consideration in Congress, but reaching consensus on a federal framework remains challenging. Applying existing legislation to novel AI technologies also presents significant hurdles, requiring legal interpretation and adaptation.

EU's AI Act

The European Union's AI Act represents a more proactive and comprehensive approach to AI regulation. It employs a risk-based approach, categorizing AI systems into different levels of risk:

- Unacceptable risk: AI systems considered unacceptable, such as those used for social scoring, will be banned.

- High-risk: AI systems used in critical infrastructure, law enforcement, and healthcare will be subject to stringent requirements, including conformity assessments.

- Limited risk: Systems like spam filters will face less stringent requirements.

- Minimal risk: Systems with minimal risk, such as AI-powered video games, will face minimal regulatory oversight.

The EU's AI Act is significant because it establishes a precedent for a risk-based regulatory framework, potentially influencing AI regulation globally. Its impact on global AI development is still to be determined, but it's likely to drive greater accountability and transparency within the AI industry.

Other International Initiatives

Many other countries and international organizations are actively involved in developing AI regulation, including Canada, China, and Japan, as well as organizations like the OECD. These initiatives showcase the growing global consensus on the need for responsible AI development and deployment.

Key Challenges in Regulating AI

Developing effective AI regulation presents several significant challenges:

Defining and Classifying AI

Establishing clear and consistent definitions of AI for regulatory purposes is difficult. The rapid evolution of AI technology makes it challenging to create definitions that remain relevant over time.

Balancing Innovation and Safety

Regulators face a delicate balance between fostering innovation and mitigating the potential risks of AI. Overly restrictive regulations could stifle innovation, while insufficient regulation could lead to harmful consequences.

Enforcement and Compliance

Enforcing AI regulations in a rapidly evolving technological landscape is challenging. Regulators need to adapt their approaches to stay ahead of technological developments.

International Cooperation

International collaboration is crucial for creating effective AI regulation. The global nature of AI development requires international cooperation to ensure consistent standards and avoid regulatory fragmentation.

The Future of AI Regulation

The future of AI regulation likely involves increased government oversight, stricter accountability mechanisms, and more robust enforcement. We can expect:

- Increased emphasis on transparency and explainability in AI systems.

- More stringent data privacy protections related to AI training data.

- Development of specific AI safety standards and certification processes.

- Greater focus on addressing AI bias and ensuring fairness.

These regulations will have a significant impact on AI companies, shaping their business models and prompting greater investment in ethical AI development. The role of self-regulation and industry best practices will also be critical in ensuring responsible AI innovation.

Conclusion

The FTC investigation into OpenAI underscores the crucial need for a comprehensive and effective regulatory framework for AI. The complexities of AI regulation necessitate a balanced approach that fosters innovation while addressing ethical concerns and mitigating potential risks. The future of AI development hinges on the ability of governments, industry, and researchers to collaborate in creating robust AI regulation that ensures responsible technological advancement. Stay informed on the developments concerning AI regulation and its impact on the future of technology. Continue to research the implications of AI regulation to stay ahead of this rapidly evolving landscape.

Featured Posts

-

Norfolk Mps Supreme Court Challenge Nhs Gender Identity Dispute

May 02, 2025

Norfolk Mps Supreme Court Challenge Nhs Gender Identity Dispute

May 02, 2025 -

The Importance Of Mental Health Literacy Education In Schools And Communities

May 02, 2025

The Importance Of Mental Health Literacy Education In Schools And Communities

May 02, 2025 -

Exclusive Which A Lister Wants To Visit Melissa Gorgas Nj Beach House

May 02, 2025

Exclusive Which A Lister Wants To Visit Melissa Gorgas Nj Beach House

May 02, 2025 -

Lotto Results Wednesday April 16 2025

May 02, 2025

Lotto Results Wednesday April 16 2025

May 02, 2025 -

Bhth Wzyr Altjart Sbl Tezyz Alteawn Alaqtsady Me Jmhwryt Adhrbyjan

May 02, 2025

Bhth Wzyr Altjart Sbl Tezyz Alteawn Alaqtsady Me Jmhwryt Adhrbyjan

May 02, 2025

Latest Posts

-

4 5

May 10, 2025

4 5

May 10, 2025 -

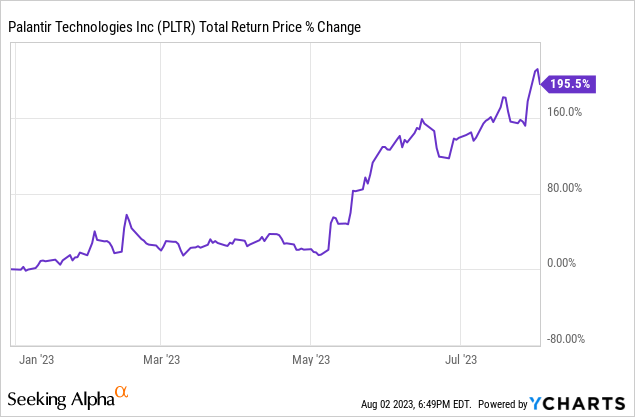

Analyzing Palantirs Potential A 40 Stock Increase By 2025 Is It Achievable

May 10, 2025

Analyzing Palantirs Potential A 40 Stock Increase By 2025 Is It Achievable

May 10, 2025 -

Late To The Game Evaluating Palantir Stock Investment Potential In 2024 For 2025 Gains

May 10, 2025

Late To The Game Evaluating Palantir Stock Investment Potential In 2024 For 2025 Gains

May 10, 2025 -

40 Palantir Stock Growth By 2025 A Realistic Investment Opportunity

May 10, 2025

40 Palantir Stock Growth By 2025 A Realistic Investment Opportunity

May 10, 2025 -

Should You Buy Palantir Stock Before Its Predicted 40 Rise In 2025

May 10, 2025

Should You Buy Palantir Stock Before Its Predicted 40 Rise In 2025

May 10, 2025