OpenAI's ChatGPT Under FTC Scrutiny: A Deep Dive

Table of Contents

The FTC's Concerns Regarding ChatGPT and Data Privacy

The FTC's investigation into ChatGPT centers heavily on data privacy concerns. The sheer volume of data processed by the chatbot and the potential for misuse raise significant questions about OpenAI's compliance with existing regulations.

Data Collection and Usage Practices

ChatGPT's data collection methods are extensive. This includes:

- User inputs: Every conversation, prompt, and response is logged.

- User profiles: While not explicitly stated, inferred data about user preferences and behaviors are likely collected.

- Third-party data: OpenAI may integrate data from other sources to enhance the chatbot's functionality.

The transparency surrounding data usage is a critical concern. Many users are unaware of the extent of data collection and how their information is used. This lack of clarity raises potential violations of privacy laws like the California Consumer Privacy Act (CCPA) and the General Data Protection Regulation (GDPR), depending on the user's location. Experts and consumer advocates have raised concerns about the potential for unauthorized data sharing and the lack of robust consent mechanisms.

Potential for Data Breaches and Misuse

Large language models (LLMs) like ChatGPT are complex systems vulnerable to data breaches. A successful attack could expose sensitive user data, including personally identifiable information (PII), intellectual property, and confidential communications. The potential for misuse of this data is significant, ranging from identity theft to targeted advertising and even blackmail. While OpenAI employs security measures, their effectiveness remains under scrutiny within this ChatGPT FTC investigation. The FTC likely wants to assess the robustness of these measures against sophisticated cyberattacks.

Algorithmic Bias and Fairness Issues in ChatGPT

Another key aspect of the ChatGPT FTC investigation focuses on algorithmic bias. Large language models are trained on massive datasets that may reflect societal biases, leading to unfair or discriminatory outputs.

Identifying and Addressing Bias in AI Models

LLMs like ChatGPT inherit biases present in their training data. This can manifest in various ways, such as:

- Gender bias: ChatGPT might generate responses that perpetuate stereotypes about gender roles.

- Racial bias: The model may produce outputs that reflect harmful racial stereotypes.

- Cultural bias: ChatGPT's responses may not be equally sensitive or accurate across different cultures.

While OpenAI is actively researching techniques to mitigate bias, such as data augmentation and adversarial training, completely eliminating bias remains a challenge. The FTC is likely examining OpenAI's efforts and whether they are adequate to prevent harm.

The Impact of Bias on Vulnerable Groups

Algorithmic bias disproportionately affects vulnerable groups, such as minorities, women, and individuals with disabilities. Biased AI systems can perpetuate societal inequalities and reinforce harmful stereotypes. The ethical implications are profound, potentially leading to legal challenges related to algorithmic discrimination and unfair treatment. The ChatGPT FTC investigation is crucial in establishing guidelines for preventing such biases from harming vulnerable communities.

The Broader Implications of the FTC Investigation for the AI Industry

The FTC's investigation into ChatGPT has far-reaching implications for the entire AI industry.

Setting Precedents for AI Regulation

This investigation could set a crucial precedent for future AI regulation, influencing how other AI companies develop and deploy their products. The outcome could lead to stricter guidelines on data privacy, algorithmic transparency, and bias mitigation. This will significantly impact the landscape of AI ethics and governance.

The Future of AI Development and Innovation

Balancing innovation with responsible AI development is paramount. Increased regulation may slow down innovation, but it's crucial to ensure AI technologies are deployed ethically and safely. The ChatGPT FTC investigation encourages the AI industry to adopt best practices for ethical AI development and deployment, prioritizing user privacy, fairness, and accountability. This includes proactive bias detection, robust data security, and transparent data handling policies.

Conclusion

The FTC's investigation into OpenAI's ChatGPT highlights the critical need for responsible AI development and robust regulatory frameworks. Concerns surrounding data privacy, algorithmic bias, and potential misuse demand careful consideration. The outcome of this ChatGPT FTC investigation will significantly influence the future of AI regulation and the way companies develop and deploy powerful AI technologies like ChatGPT. Understanding the issues surrounding this ChatGPT FTC investigation is crucial for navigating the complex ethical and legal landscape of artificial intelligence. Stay informed about developments in this critical area and continue to advocate for responsible AI practices.

Featured Posts

-

The Complexities Of Robotic Nike Sneaker Manufacturing

Apr 22, 2025

The Complexities Of Robotic Nike Sneaker Manufacturing

Apr 22, 2025 -

Hollywood Shut Down The Impact Of The Dual Writers And Actors Strike

Apr 22, 2025

Hollywood Shut Down The Impact Of The Dual Writers And Actors Strike

Apr 22, 2025 -

Pope Francis Dead At 88 Following Pneumonia Battle

Apr 22, 2025

Pope Francis Dead At 88 Following Pneumonia Battle

Apr 22, 2025 -

Fsus Post Shooting Class Resumption Plan A Controversial Decision

Apr 22, 2025

Fsus Post Shooting Class Resumption Plan A Controversial Decision

Apr 22, 2025 -

Land Your Dream Private Credit Role 5 Crucial Dos And Don Ts

Apr 22, 2025

Land Your Dream Private Credit Role 5 Crucial Dos And Don Ts

Apr 22, 2025

Latest Posts

-

4 5

May 10, 2025

4 5

May 10, 2025 -

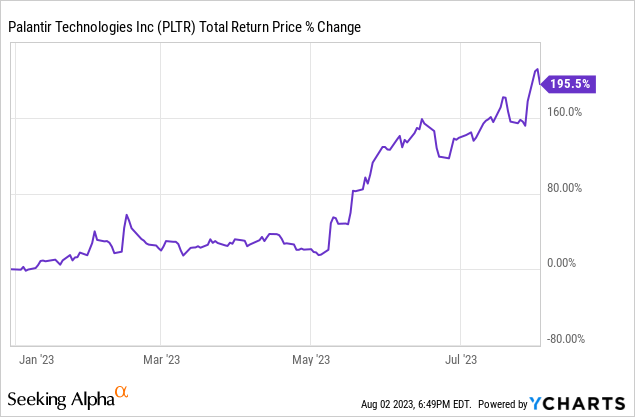

Analyzing Palantirs Potential A 40 Stock Increase By 2025 Is It Achievable

May 10, 2025

Analyzing Palantirs Potential A 40 Stock Increase By 2025 Is It Achievable

May 10, 2025 -

Late To The Game Evaluating Palantir Stock Investment Potential In 2024 For 2025 Gains

May 10, 2025

Late To The Game Evaluating Palantir Stock Investment Potential In 2024 For 2025 Gains

May 10, 2025 -

40 Palantir Stock Growth By 2025 A Realistic Investment Opportunity

May 10, 2025

40 Palantir Stock Growth By 2025 A Realistic Investment Opportunity

May 10, 2025 -

Should You Buy Palantir Stock Before Its Predicted 40 Rise In 2025

May 10, 2025

Should You Buy Palantir Stock Before Its Predicted 40 Rise In 2025

May 10, 2025